Introduction

Imagine your mobile app could understand voice commands, recognize gestures, or detect objects, without needing cloud access.

No lag. No heavy battery drain. Just quick, smart reactions right on the device.

Welcome to the world of TinyML, where artificial intelligence fits in your pocket, a coin runs on a battery, and does not require an internet connection.

It’s changing everything about how we build mobile apps.

Here’s the thing:

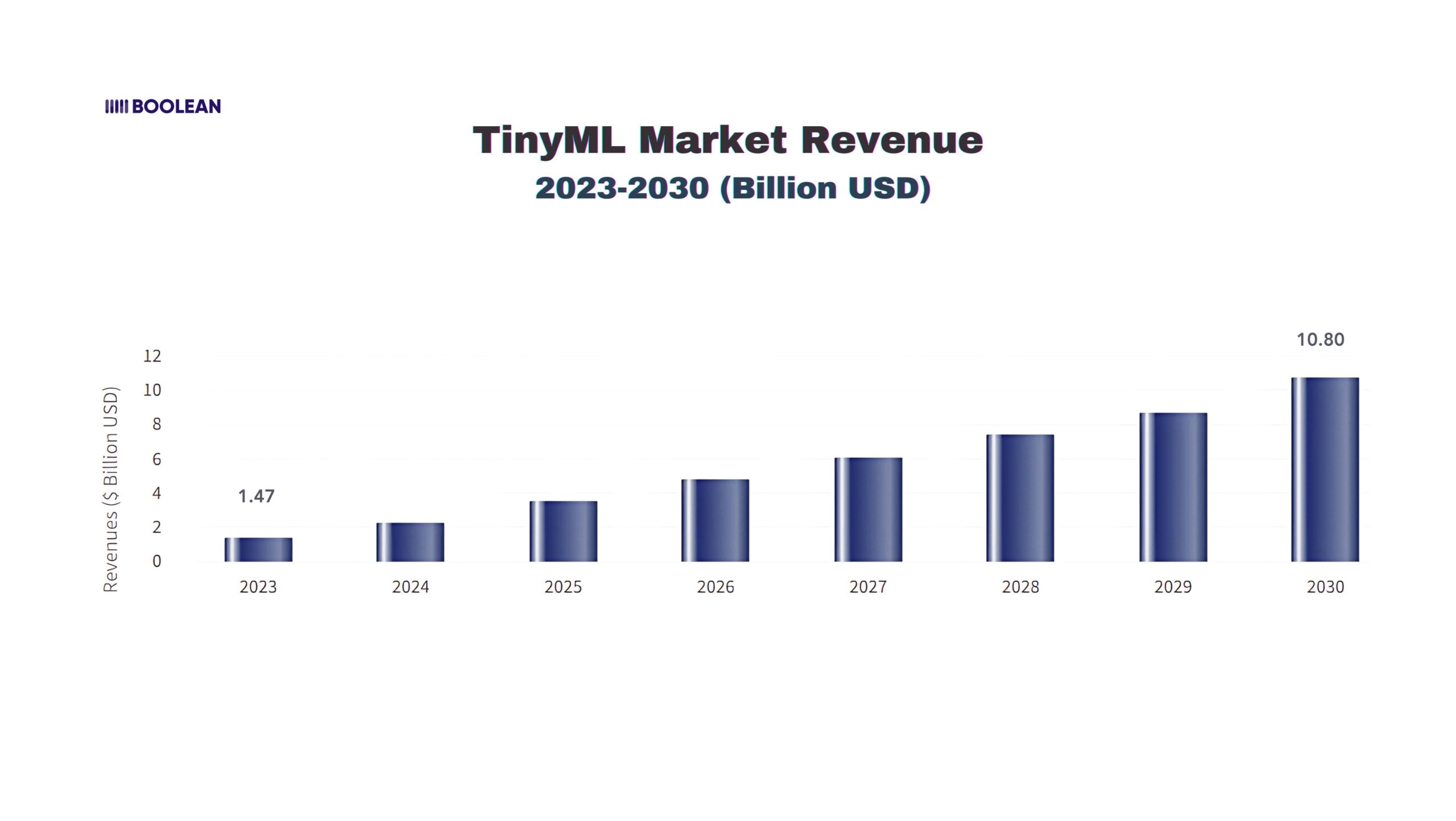

The global TinyML market was priced at USD 1.47 billion in 2023. In 2030? This is predicted to hit USD 10.80 billion. This is a 24.8% growth rate every year.

These are not just numbers; they are a sign that something big is happening.

But what is really TinyML?

Think of it this way. Traditional AI is like a supercomputer that analyzes your data in the cloud, and TinyML?

It’s like having a tiny genius living right inside your phone. The tiny machine learning (TinyML) market involves creating and using machine learning technologies for small, low-power devices.

No cloud needed. No waiting. No privacy concerns about sending your data elsewhere.

Your fitness app can count reps without uploading your workout videos. Your camera app can blur backgrounds instantly. Your voice assistant can understand “Hey” without recording everything you say.

The best part is you don`t need to be a machine learning expert to get started.

Maybe you’re building your first app. Maybe you’re looking to add that “wow” feature users love.

Or maybe you’re just curious about and want to know where mobile development is heading.

Whatever you brought here, you are in the right place.

And today, we are going to show you how to make that magic in your own app.

Are you ready to make your apps more clever than ever before? Let’s dive.

What is TinyML?

Okay, keep it simple.

TinyML means Tiny machine learning. But don’t let the “small” part fool you.

This means that the power of AI is actually bringing into small equipment, such as sensors, microcontrollers, and mobile gadgets that barely sip any battery.

Think of devices that are so small, they don’t even have a proper operating system.

Yet, with TinyML, they can still do “smart” things. Like recognizing a wake word (“Hey Siri”), detecting when you’re moving, or even figuring out environmental sounds.

And they do all this on-device, with no help from the cloud.

In the past, running AI meant that you needed a powerful server or cloud infrastructure. The devices will collect data, send it to the Internet, wait for AI to process it, and then receive a response.

It worked, but it was not absolutely sharp or battery-friendly. Also, if the internet went down, good luck.

TinyML changes that.

It brings machine learning right to where the data is created: inside the device itself. The AI is built-in, always ready, and doesn’t need to “phone home” to process data.

This means sharp reactions, more privacy (because the data does not leave the device), and long battery life.

Here is an example of a real world:

- Fitness trackers that count your push-ups.

- Earbuds that adjust noise cancellation based on your environment.

- Smartphones that detect gestures without needing cloud AI.

All of these use TinyML.

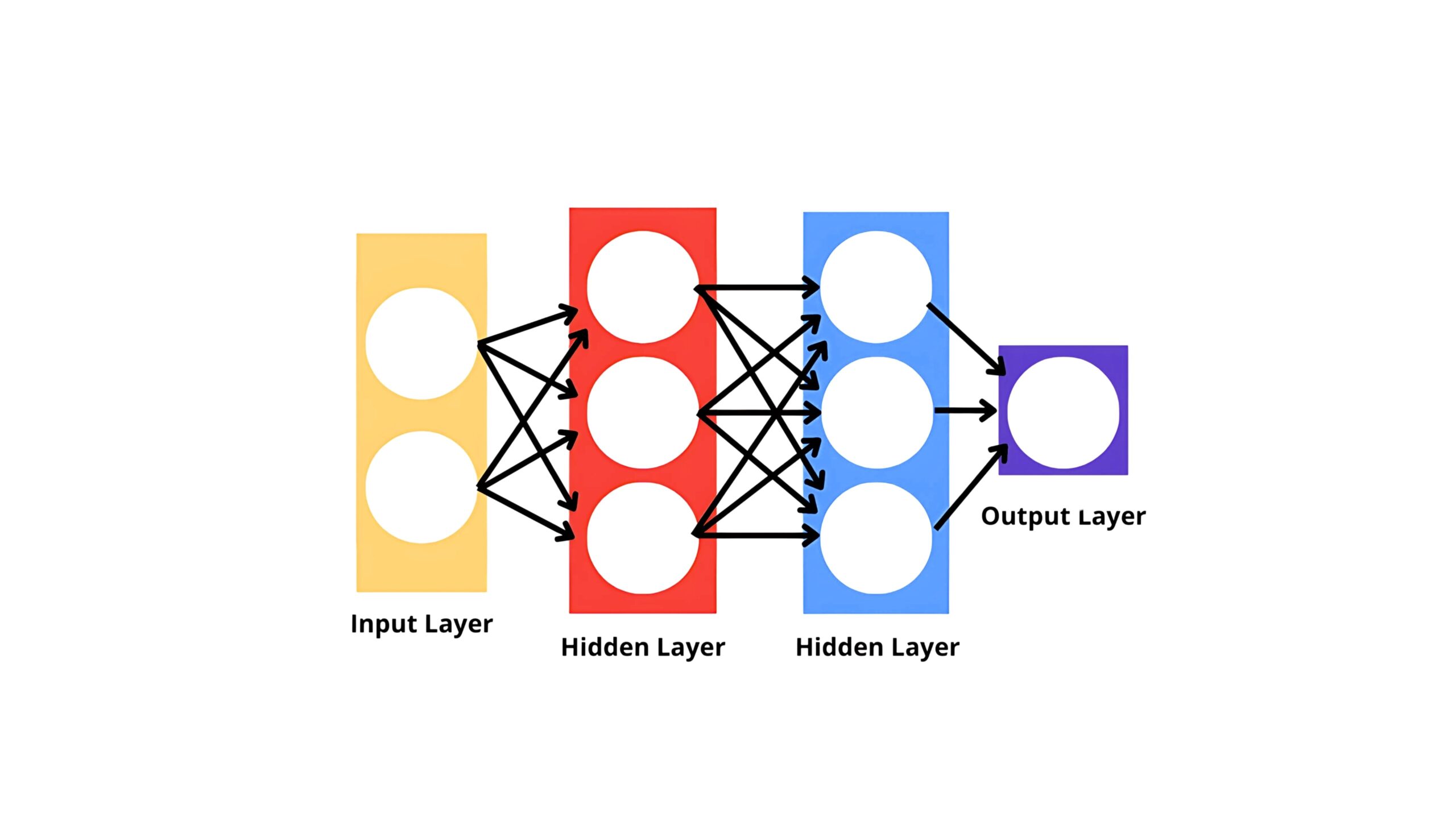

The above image represents a small neural network that can run on small, low-power devices such as a microcontroller.

- Input layer: The sensor receives data (eg, from a microphone, accelerometer, or camera).

- Hidden layers: Compute lightly to process data. These layers are kept small to fit the limited memory and processing power of small devices.

- Output layer: gives results, such as the detection of keywords, identifying the gesture, or identifying an object.

TinyML uses such a simple neural network to bring real-time edge AI capabilities to the side AI capabilities, enabling smart features without the need for a powerful computer or cloud connection.

But there’s a catch: these devices are tiny, so the AI models need to be tiny too. You can’t run a massive ChatGPT-powered app on a microcontroller.

TinyML is about squeezing AI in very tight places. It is a delicate balance between size, speed, and accuracy.

Nevertheless, the capacity is very large.

Imagine creating a mobile app where AI features work immediately, offline, and users’ batteries work without draining. That’s what TinyML enables.

It’s not just a tech buzzword. It’s the future of AI at the edge.

Traditional AI vs TinyML: What’s the Main Difference?

Before we dive into building with TinyML, stop for a second.

You might be thinking, “Isn’t it already running on my phone and apps?” That is a very good question.

Yes, AI is everywhere. But how and where he runs AI that creates a big difference.

Most of the AIs you use today, such as voice assistants, photo filters, or recommendation engines, depend a lot on cloud computing.

Your device collects data, sends it to the cloud server where the heavy AI models process it, and then the results return to your app.

It works, but its shortcomings are:

- It’s slower because of network delays.

- It drains more battery.

- And sometimes, it feels not ok when your data is flying around on other remote servers.

This is why TinyML is more important.

Instead of sending data to the clouds, AI brings to the device; It likes to be a mini brain inside your gadget, always ready, always and always private.

Here is a quick comparison to clarify the crystal:

| Aspect | Traditional AI (Cloud-based) | TinyML (On-device AI) |

|---|---|---|

| Where AI Runs | In big, powerful cloud servers | Right inside your device (microcontrollers, sensors) |

| Internet Dependency | Needs a constant internet connection | No internet required, works offline |

| Response Time (Latency) | Slower, depends on network traffic | Super-fast, real-time responses |

| Battery Usage | Drains battery due to constant data transmission | Low power consumption |

| Privacy & Security | Data gets sent to the cloud (privacy risk) | Data stays on-device, more secure |

| Hardware Requirements | Needs servers, GPUs, high-performance chips | Runs on tiny, low-cost microcontrollers |

| Best for | Heavy AI tasks (e.g., language models, cloud AI services) | Lightweight tasks (e.g., keyword detection, sensor alerts) |

Traditional AI is like having a remote brain in the cloud. TinyML is like giving your mini brain to your device.

You can build AI-operated features that immediately respond, and users can work even when they are offline.

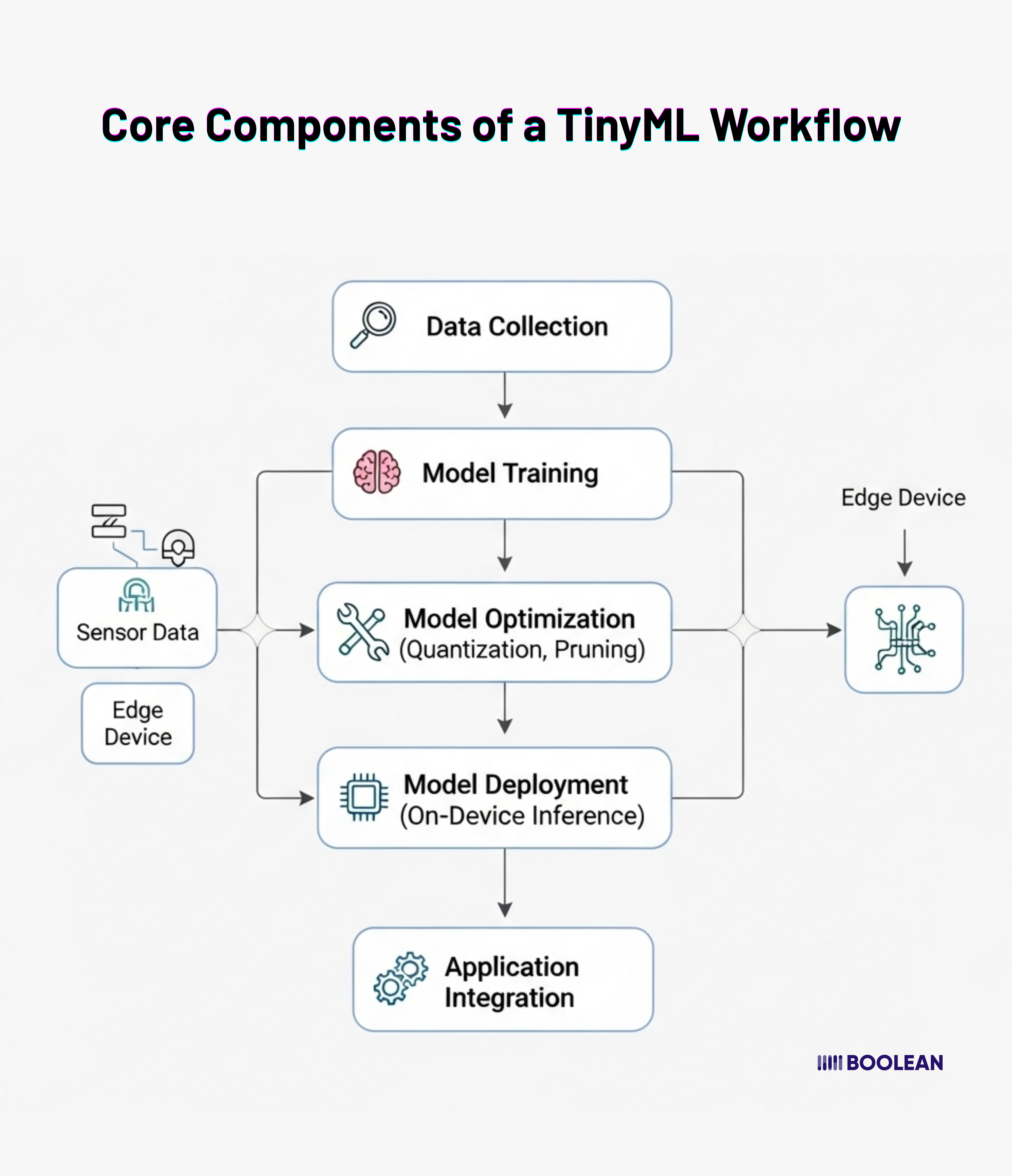

Core Components of a TinyML Workflow

If you are wondering how to really meet an idea for a work facility in a mobile app, you are in the right place.

TinyML may look like a high-level AI model, but once you break it the workflow is stunning.

Think of it as a simple assembly line where the data comes, AI gets trained, trimmed down, and is finally sent to your device.

Let me walk you through each step:

- Data Collection (Feeding Your AI with Real-World Info)

Everything starts with data. Just as humans learn from experience, AI learns from examples.

In TinyML, this data sensor comes from microphones, cameras, an accelerometer, a temperature sensor, you name it. For example:

- If you are creating a gesture to find an app, you will collect the accelerometer data.

- If it is a wake-word detector, you will record tons of voice samples.

- If it is recognizing specific sounds, like a dog barking, you will need an audio clip of barking.

You are originally teaching AI, showing what you want to identify, over and over again.

Pro Tip: The better your data, the cleverer your AI will be. Garbage in, garbage out!

- Model Training (Teaching the AI What Matters)

Once you have your data, it’s time to train the model. This is where AI starts learning patterns.

But here’s the good news:

- You don’t train on the device itself. You’ll use your laptop or a cloud service.

- Think of this like a school for your AI. You’re showing it examples, giving it feedback, and helping it understand “this is a wave gesture” or “this is a clap sound.”

It’s important to know that TinyML models are usually small, specialized models. You’re not building something huge like ChatGPT. You’re creating a model that’s laser-focused on doing one task very well.

Curious about ChatGPT alternatives or top AI chatbots for 2025? Check out our blog on the best AI chatbots for mobile apps and web platforms!

- Model Optimization (Trimming Down the AI Brain)

Now here comes the TinyML magic.

The model you trained is probably too “big” to fit on a microcontroller. So, you need to optimize it, a fancy way of saying: “Let’s make this model smaller and faster without losing its smarts.”

Two popular techniques are:

- Quantization: Reducing the accuracy of numbers (from 32-bit float to 8-bit integer).

- Pruning: Cutting parts of the model that do not contribute much to the results.

It’s like packing a suitcase for a weekend trip; you don’t need your entire wardrobe, just the essentials.

The result? A lightweight AI model that’s small enough to live comfortably on a tiny device.

- Model Deployment (Moving AI into Your Device)

Once your AI model has been slimmed down, it’s ready to be deployed onto an edge device.

This is when your AI actually gets “installed” into hardware, like a smartphone chip, a microcontroller, or a sensor module.

From this point on, the device doesn’t need the cloud to process data. The AI lives inside the gadget, ready to make decisions on its own.

Whether it’s detecting movement, recognizing a sound, or sensing a pattern, it all happens locally and instantly.

- Application Integration (Bringing it All to Life)

Now the exciting part comes – changing it into a feature will be liked by your users.

This step is where you, the user, take that AI model and integrate it into the real-world application:

- Maybe you’ll build a mobile app that vibrates when a hand wave is detected.

- Or an app that turns off music automatically when it hears you snoring.

- Or maybe a smartwatch feature that alerts you if it senses unusual heart rhythms.

This is the place where TinyML stops having a “cool technique demo” and becomes something practical and valuable for end-users.

Pro Tip: Always design user, keeping in mind the user experience. TinyML is most powerful when it makes the app feel sharp, smooth, and more personal.

So, in summary, the TinyML workflow isn’t some mysterious process. It’s a simple, repeatable flow:

- Collect Data →

- Train AI Model →

- Optimize (Shrink) Model →

- Deploy to Device →

- Integrate into an App

Each step has its own tools and best practices (which we’ll talk about next), but once you “get” this flow, you’ll see how practical and accessible TinyML really is.

Tools & Frameworks to Get Started with TinyML

Okay, so you are excited to create TinyML-operated mobile apps, but now you are asking, “What equipment do I really need to start?”

You are not alone! Good news? You do not need to strengthen the wheel.

The TinyML ecosystem is rich in frameworks, SDKs, and equipment that make AI easier than before to bring to edge equipment.

Let’s break it down.

- TensorFlow Lite (TFLite)

If you are starting now, TensorFlow Lite (TFlite) is a mild version of Google’s TensorFlow, which is specifically designed for mobile and embedded devices.

- Supports the quantization, pruning, and model optimization.

- Android works with iOS and microcontrollers.

- Tons of community support and tutorials to lean on.

Pro Tip: TFLite is perfect for “on-device inference,” so your AI models can run offline and with low latency.

- Core ML (Apple’s Edge AI Framework)

For iOS developers, Core ML is Apple’s official framework to run AI models on iPhones, iPads, and even Apple Watches.

- It’s deeply integrated with iOS hardware (like the Neural Engine).

- TensorFlow supports changing models from PyTorch and ONNX.

- Data on-device since ideal for privacy-focused apps.

If you’re comparing frameworks, you’ll definitely want to read:

👉 Best Mobile AI Frameworks in 2025: From ONNX to CoreML and TensorFlow Lite

- ONNX Runtime Mobile (The Cross-Platform Powerhouse)

ONNX Runtime Mobile is great if you want flexibility. This lets you deploy trained models in different -folded frameworks (TensorFlow, PyTorch, etc.) and run them on mobile devices.

- Customized for performance and size.

- A solid pick if you’re aiming for a composable architecture, where modular components can work across platforms with ease.

Composable architecture is key when building apps that may utilize LLMs (Large Language Models) for specific features, while leveraging TinyML for edge tasks such as gesture detection.

- Edge Impulse (Low-code Platform for Beginners)

If you are not a hardcore AI engineer? No problem.

Edge Impulse is a beginner-friendly platform that simplifies data collection, model training, and deployment, all through an easy-to-use interface.

- Great for prototyping.

- Helps you deploy models to edge devices without writing too much custom code.

- Integrates nicely into CI/CD pipelines for mobile AI apps.

- CI/CD Pipelines (Automating Your AI Deployments)

As your TinyML-operated app increases, you want to automate boring tasks.

CI/CD (continuous integration/continuous deployment) workflows ensure that every time you update your AI model, it is automatically built, tested, and sent to your mobile app build.

It is super important to combine TinyML with LLM in mobile apps; you are likely to have a hybrid stack where cloud-based LLMs handle heavy tasks, while TinyML models offline, manage low-opinion features.

Keeping both updates through CI/CD is a game-changer for the pace of app development.

Want to understand how SDKs and APIs fit into this picture?

👉 Check out: Mobile SDKs vs APIs: What You Need to Build for Your Platform

- Arduino IDE & Arduino Libraries

The classic Arduino development environment, now with libraries that support TinyML models.

- Familiar if you’ve used Arduino before.

- Easy to upload code and models to your board.

- Libraries like Arduino_TensorFlowLite make integration simple.

How to get started:

Install the Arduino IDE, add the necessary libraries, and explore the Arduino TinyML examples.

- MicroPython & CircuitPython

It is a lightweight versions of Python that run on microcontrollers.

- Write code in Python, which is beginner-friendly.

- Some support for running simple ML models (though more limited than TFLM).

- Great for quick prototyping and learning.

How to get started:

Visit micropython.org or circuitpython.org to find compatible boards and tutorials.

Other Helpful Tools

- Audio and Sensor Data Collection Apps: Use your phone or computer to record data for training.

- Jupyter Notebooks: Great for experimenting with data and models before deploying to your device.

- Model Optimization Tools: Tools like TensorFlow Model Optimization Toolkit help shrink your models to fit on tiny devices.

So, Where Should You Start?

- If you’re a mobile dev who loves code, start with TensorFlow Lite or CoreML.

- If you want drag-and-drop simplicity, explore Edge Impulse.

- If you’re aiming for a scalable, modular app structure, ONNX Runtime Mobile gives you that flexibility.

- And don’t forget that setting up CI/CD for mobile AI apps early will save you tons of deployment headaches later on.

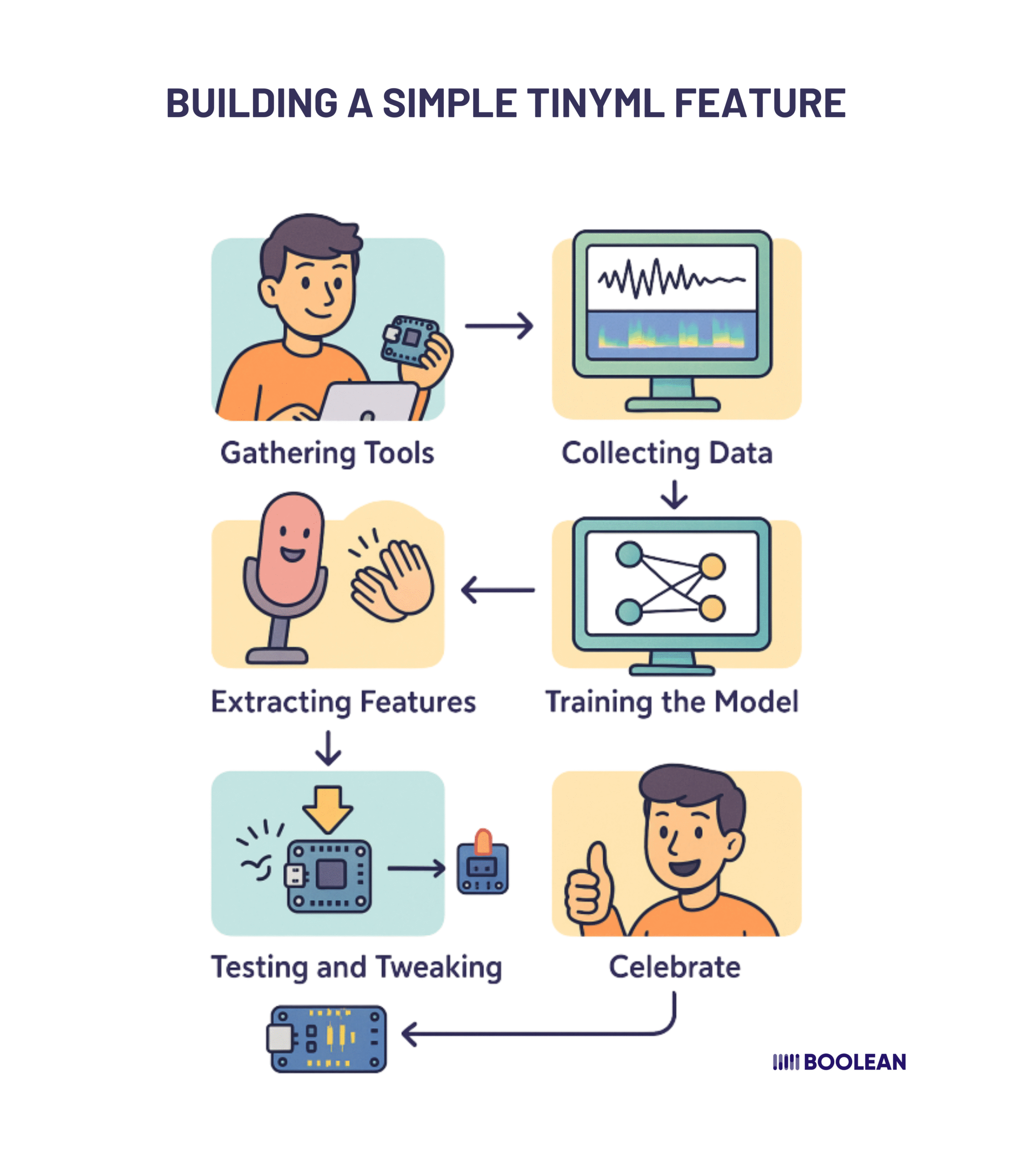

Step-by-Step: Building a Simple TinyML Feature

Let’s build a simple TinyML feature together!

Imagine this:

You want to make a small tool that recognizes your hands while clapping.

No clouds. No fancy hardware. Just a small microcontroller (such as an Arduino Nano 33 BLE Sense), a microphone, and a dash of machine learning magic.

Sounds fun? Let’s do this step by step.

Step 1: Gather Your Tools

First of all, make sure you have everything you need:

- A microcontroller with a microphone (eg, Arduino Nano 33 BLE Sense).

- Connect your computer to a USB cable.

- The Arduino IDE is installed on your machine and it’s free!.

- And most importantly… a little curiosity and patience!

No fancy lab or AI degree needed. You’re already halfway there.

Step 2: Collect Some Data (Teach Your Device What a Clap Sounds Like)

Time to gather some “training data”, think of this as teaching by example.

Here’s what to do:

- Open the Arduino IDE and load a simple sketch to record audio from the microphone.

- Start recording yourself clapping, try different variations:

- Loud claps

- Soft claps

- Fast, rapid claps

- Slow, spaced-out claps

- Don’t forget to also record background noise (silence, talking, ambient sounds). This helps the AI learn the difference between “clap” and “not clap.”

Pro Tip: Save your recordings with clear names like “clap1.wav”, “noise1.wav”. Organization will save you time later.

Step 3: Extract Features

Raw audio files are a bit too much for our tiny microcontroller to process. We need to simplify.

Here’s how:

- Get help from a tool like Edge Impulse Studio (super beginner-friendly) or a Python library like librosa.

- Convert those recordings into features, like Spectrograms or MFCCs (Mel Frequency Cepstral Coefficients). Think of this as turning sound into a visual fingerprint that the AI can easily “see.”

Don’t take any stress, these tools automate most of the hard work for you.

Step 4: Train a Simple Model

Now comes the fun part: training your AI model.

You’ll:

- Upload your extracted features into Edge Impulse or TensorFlow Lite for Microcontrollers.

- Set up a tiny classifier. This could be a small neural network or even a simple decision tree.

- Train the model to recognize patterns of a “clap” versus “not a clap.”

Test it right there. Does it recognize your claps? If it’s confused, that’s okay, just collect a bit more data or adjust your model settings.

Step 5: Deploy to Your Device (Bring Your AI to Life)

Now it’s time to take your trained model and put it onto your device.

Here’s how:

- Convert the model into a .tflite (TensorFlow Lite) file.

- Upload it to your microcontroller using the Arduino IDE or via Edge Impulse’s deployment options.

- Write a simple Arduino sketch that:

- Listens to the microphone input.

- Extracts features in real-time.

- Runs the model to check if a “clap” is detected.

This is where it all starts to feel real.

Step 6: Test and Fine-Tune

Give it a go, clap near your device.

Did it react? If not, don’t panic. TinyML is a bit like training a puppy; it might need a few tries before it gets it right.

Here’s what you can do:

- Record more diverse data (different environments, hand positions).

- Tweak your model’s architecture or thresholds.

- Fine-tune the feature extraction parameters.

Every iteration gets you closer to perfection.

Take a moment and celebrate. You just built your first TinyML-powered feature from scratch.

Welcome to the world of TinyML in action. 🚀

Challenges and Limitations of TinyML

Okay, till now you are probably thinking that tinimal is great, and you are right!

But let’s take a break and talk about the other side of the story.

Like any technology, TinyML is not magic. It comes with its set of challenges and boundaries, which you should know about-either if you plan to use it in real-world applications.

Don’t worry, I am not here to scare you. This is about being realistic so that you can make them smarter.

- Limited Computing Power (Tiny Devices = Tiny Brains)

The biggest challenge?

You are working with microcontrollers and edge devices that have very limited processing power, memory, and battery life.

Unlike the cloud AI system with a large-scale GPU, the TinyML model needs to be incredibly light. This often means:

- Smaller models (sometimes at the cost of accuracy)

- Simplified computations

- Careful balancing between performance and resource usage

It’s a bit like teaching a goldfish to solve a Rubik’s Cube, possible, but you’ve got to simplify the puzzle.

- Accuracy Trade-offs (It Won’t Be Perfect)

TinyML is all about good-enough intelligence on-device.

But here’s the catch: You might have to sacrifice some accuracy to make the model small and efficient.

For simple tasks like:

- Wake-word detection (“Hey Siri”)

- Simple gestures or sound classification, TinyML performs beautifully.

But if you’re aiming for more complex tasks, like object detection in busy environments or language understanding, don’t expect cloud-level precision. You’ll need to set realistic expectations.

- Data Collection is a Hassle (But Critical)

Unlike large AI models trained on millions of datasets from the internet, TinyML models are often custom-trained.

That means YOU need to collect data, lots of it.

- Variations of the gestures, sounds, or patterns you want to detect.

- Environmental noise, edge cases, and “negative examples.”

Data collection can be tedious, but it’s absolutely critical for a TinyML model to perform well in real-life situations.

- Debugging is Tough (No Fancy Dashboards Here)

On cloud platforms, debugging AI models is easy with cool visualizations and analytics tools.

But on tiny devices? Debugging can feel like detective work.

You often don’t get rich feedback or logs. You have to rely on:

- Blink patterns of LEDs

- Serial monitor outputs

- Careful testing of edge cases

This means patience and methodical troubleshooting are essential skills for a TinyML developer.

- Limited Flexibility (One-Task Wonders)

TinyML models are often designed to do one thing really well.

If you need multi-functional intelligence (like object detection, voice recognition, and sentiment analysis all in one), TinyML devices will struggle.

You might need to:

- Use multiple microcontrollers (adds cost/complexity)

- Design clever, composable architectures where TinyML handles edge tasks, while cloud AI handles the heavy lifting.

- Keeping Models Updated

Once you flash a model onto a device, updating it isn’t as seamless as updating a cloud service.

You’ll need to:

- Plan for OTA (Over-the-Air) updates if your device supports it.

- Or manually update firmware (which isn’t fun at scale).

For apps that require regular model updates (like evolving customer preferences), this could become a bottleneck unless planned well.

Yes, TinyML has its hurdles.

But here’s the silver lining: most of these challenges are design problems, not dealbreakers. With careful planning, clever engineering, and realistic expectations, you can build incredibly powerful real-time edge AI features that are fast, private, and battery-friendly.

TinyML is good when:

- You need real-time responses

- You want offline intelligence

- You care about battery life and data privacy

When Should You Use TinyML in a Mobile App? (And When You Shouldn’t)

Ok, so you are probably thinking:

“Is TinyML perfect for my app idea, or should I just live with Cloud AI?”

Great question. Let’s go through it together.

TinyML is not a size-fit-all solution. Sometimes, this is the right superpower. The second time, it likes to use a screwdriver when you actually need a hammer.

It is mentioned here how to detect it.

Use TinyML When…

- You Need Lightning-fast Reactions (no internet lag)

If your app needs to react immediately, TinyML is your best friend.

- Example: A fitness app that counts pushups in real time.

- Example: A safety app that suddenly finds (such as a glass brake) detects and sends alerts.

Don`t wait for data to upload. No cloud round-trips.

It all happens right there on the device; milliseconds matter.

- You Want Offline Functionality

If your users are often in places with poor or no internet (think hiking trails, factories, remote areas), cloud AI is a no-go.

TinyML works completely offline.

- Example: A language learning app that hears the pronunciation without the need for Wi-Fi.

- Example: A wildlife tracker that classifies bird calls in remote forests.

- You’re Prioritizing Privacy

TinyML keeps all data processing on the device.

This is critical if your app handles sensitive information, like:

- Health diagnosis apps

- Kids’ educational toys

- Security systems

No data ever leaves the device, which makes GDPR and privacy compliance much easier.

- Battery Life is Critical

The TinyML model is designed for ultra-low power.

If you are creating wearables, IOT gadgets, or mobile apps that require the conservation of every battery, then the TinyML device can help in dramatically increasing life.

- The Task is Narrow & Well-Defined

TinyML excels at doing one job well.

- Detect a specific keyword.

- Recognize a simple gesture.

- Monitor a specific environmental condition.

If your feature is narrowly scoped, TinyML is a perfect fit.

Avoid TinyML When…

- You Need Complex or Large-Scale Intelligence

If your app needs to understand natural language in depth, analyze large images, or perform tasks like sentiment analysis across massive datasets, TinyML will struggle.

These tasks need the heavy lifting of cloud AI or LLMs (Large Language Models).

- Frequent Model Updates Are Required

If your AI models need to be retrained and updated frequently (like recommendation engines or evolving user personalization), managing this on TinyML devices becomes messy.

Cloud AI allows seamless updates.

TinyML updates require careful OTA infrastructure or manual firmware updates.

- You Need Multi-Tasking AI Features

TinyML is luxurious for a single-purpose model.

But if your app needs to do several AI tasks simultaneously (eg, speech recognition, object detection, and intentions analysis), then you will hit the hardware limits faster.

In such cases, a hybrid approach works better:

- TinyML for edge sensing tasks.

- Cloud AI for the complex, compute-heavy tasks.

| Use TinyML When… | Avoid TinyML When… |

|---|---|

| You need instant, offline AI responses | You need complex, large-scale AI tasks |

| Data privacy is a top concern | Frequent model updates are required |

| Battery life optimization is critical | You need multi-tasking, high-capacity AI models |

| The AI task is simple and well-scoped | Heavy LLMs or large image processing are needed |

Conclusion

So there you have it, TinyML might be “tiny” in size, but it’s huge in potential.

This is not about the construction of the next AI supercomputer. It is about creating smart, useful features that work quietly, correct on your device, without the need for a constant internet connection.

Certainly, it has quirks and limitations, but it is part of the fun. With a little creativity (and patience), you can build AI that is fast, efficient, and almost magical for users.

TinyML isn’t the future. It’s happening now.

And you’re just one project away from being part of it.

FAQs

- Is TinyML the same as Edge AI?

Not exactly! TinyML is a subset of Edge AI. It specifically focuses on running machine learning models on ultra-low-power, tiny devices like microcontrollers. Edge AI can include bigger edge devices too, like smartphones and IoT hubs.

- Can I run LLMs (like ChatGPT) on TinyML devices?

TinyML devices can’t run large language models (LLMs) directly; they’re too heavy. But you can build hybrid systems where TinyML handles local tasks, and complex LLM processing happens in the cloud.

- What are popular TinyML frameworks for beginners?

For beginners, TensorFlow Lite for Microcontrollers and Edge Impulse Studio are great starting points. They make it easier to train, optimize, and deploy models on small devices without diving deep into code.

- Do I need coding experience to build TinyML projects?

Basic coding knowledge (like using the Arduino IDE) definitely helps, but many platforms now offer no-code/low-code TinyML workflows, especially for data collection and model training.

- Where is TinyML used today?

You’ll find TinyML in voice wake-words, fitness trackers, smart home sensors, and even in earbuds for noise cancellation.