Introduction

Edge AI isn’t just modernizing our processes—it’s quietly powering some of the smartest mobile experiences you use every day.

From instant language translation on your smartphone to AR filters that track your face in real time, Edge AI mobile apps are transforming how we interact with devices, right in the palm of our hands, without needing constant access to the cloud.

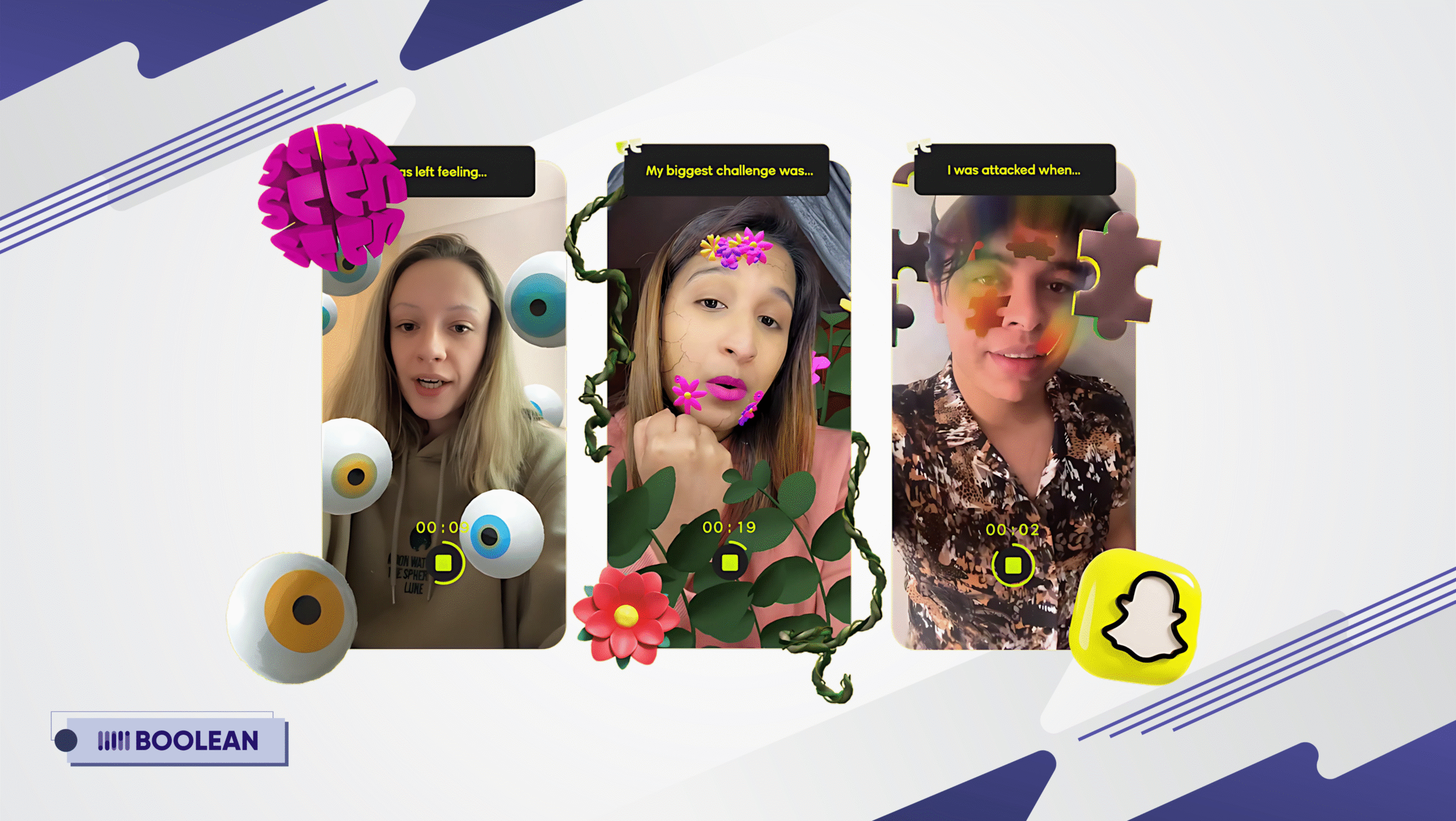

A great example? Snapchat’s AR lenses.

They didn’t just rely on cloud servers—they leveraged Edge AI to process face filters in real time, directly on users’ phones.

The result?

- Over 300 million daily active users (many using AI filters).

- Near-zero latency (even with complex effects).

- No privacy headaches (facial data never leaves the device).

And this is just the beginning.

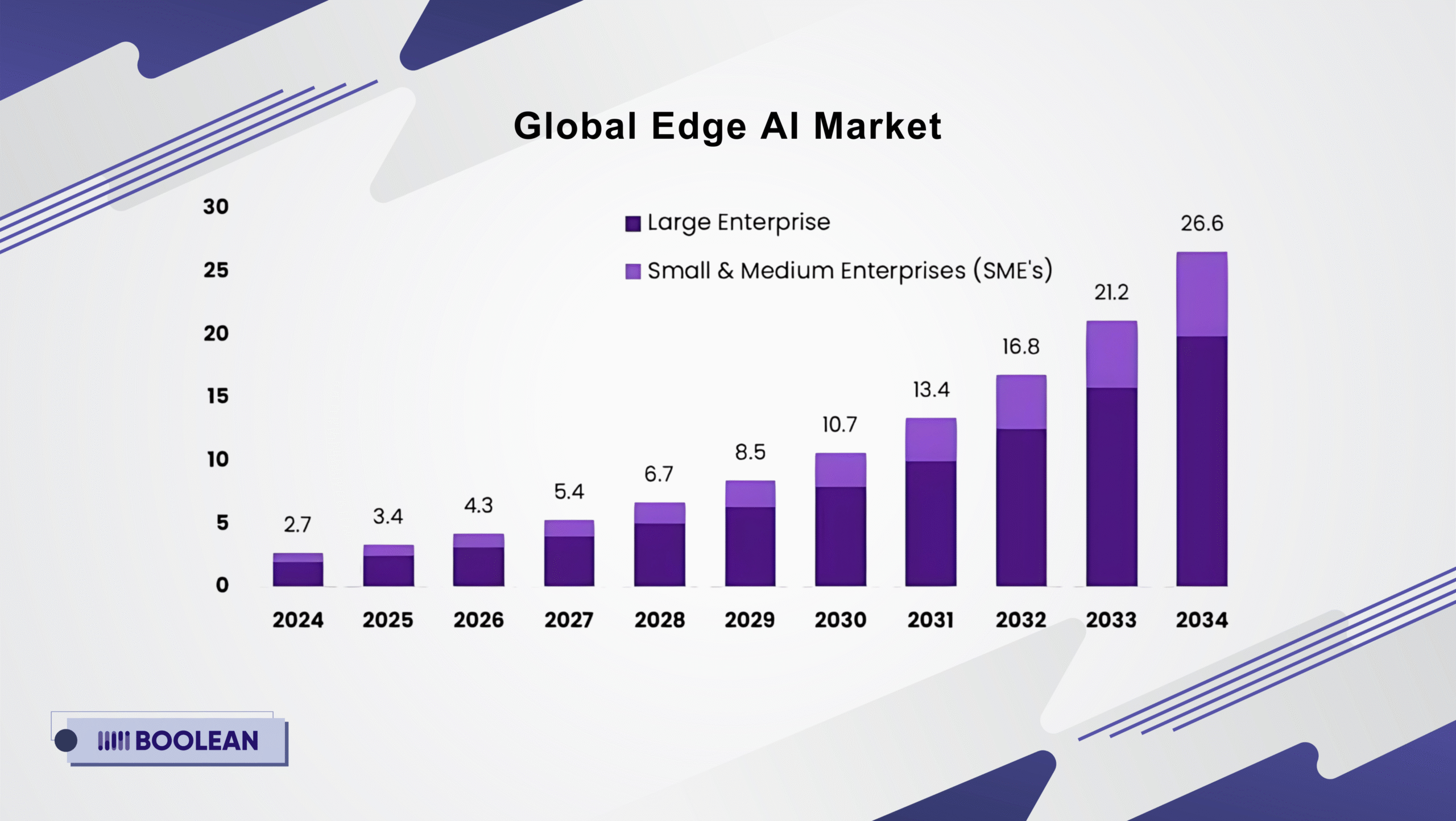

The Global Edge AI Market is exploding—expected to hit $26.6 billion by 2034, up from $2.7 billion in 2024 (growing at 25.70% CAGR).

This isn’t just about cool tech. It’s a movement.

Just like great ecommerce websites increase conversion through intuitive design, mobile developers are now leveraging real-time AI processing to elevate user experience, boost engagement, and unlock features that weren’t possible just a few years ago.

Think about it:

- No waiting for a cloud server to process data

- No privacy risks from constantly uploading sensitive information

- No internet? No problem—on-device AI still runs smoothly

Whether you’re building a fitness app that detects posture, a security app with real-time facial recognition, or a retail app using smart visual search, Edge AI applications are opening up new frontiers.

In this blog, we’re diving deep into:

- What makes real-time Edge AI so powerful in mobile

- The best Edge AI frameworks for mobile development

- Real-world Edge AI use cases that are already making waves

- And how to overcome challenges in AI integration for mobile apps

Let’s decode the future of AI in mobile development—and show you how to build smarter, faster, and more private apps with Edge AI.

What is Edge AI?

Edge AI is a breakthrough in how mobile apps do artificial intelligence without going through the cloud. Rather than sending raw data over fiber optic lines to distant servers for deciphering and interpreting.

The Edge AI can process video images directly in real-time at the level of your smartphone, leading to quicker, more private responses and greater freedom from needing to operate online.

Here’s why Edge AI is so compelling for mobile apps

Unlike traditional cloud-based AI, Edge AI mobile apps deliver:

- Lightning-fast performance (No lag—ideal for real-time AI applications like video filters or voice assistants).

- Enhanced privacy (Data stays on-device, critical for health apps or AI-powered security cameras).

- Offline capabilities (Works without internet—perfect for travel apps or on-device AI translations).

The AR Zone App, for example, uses AI to enhance augmented reality features without depending on high-speed internet.

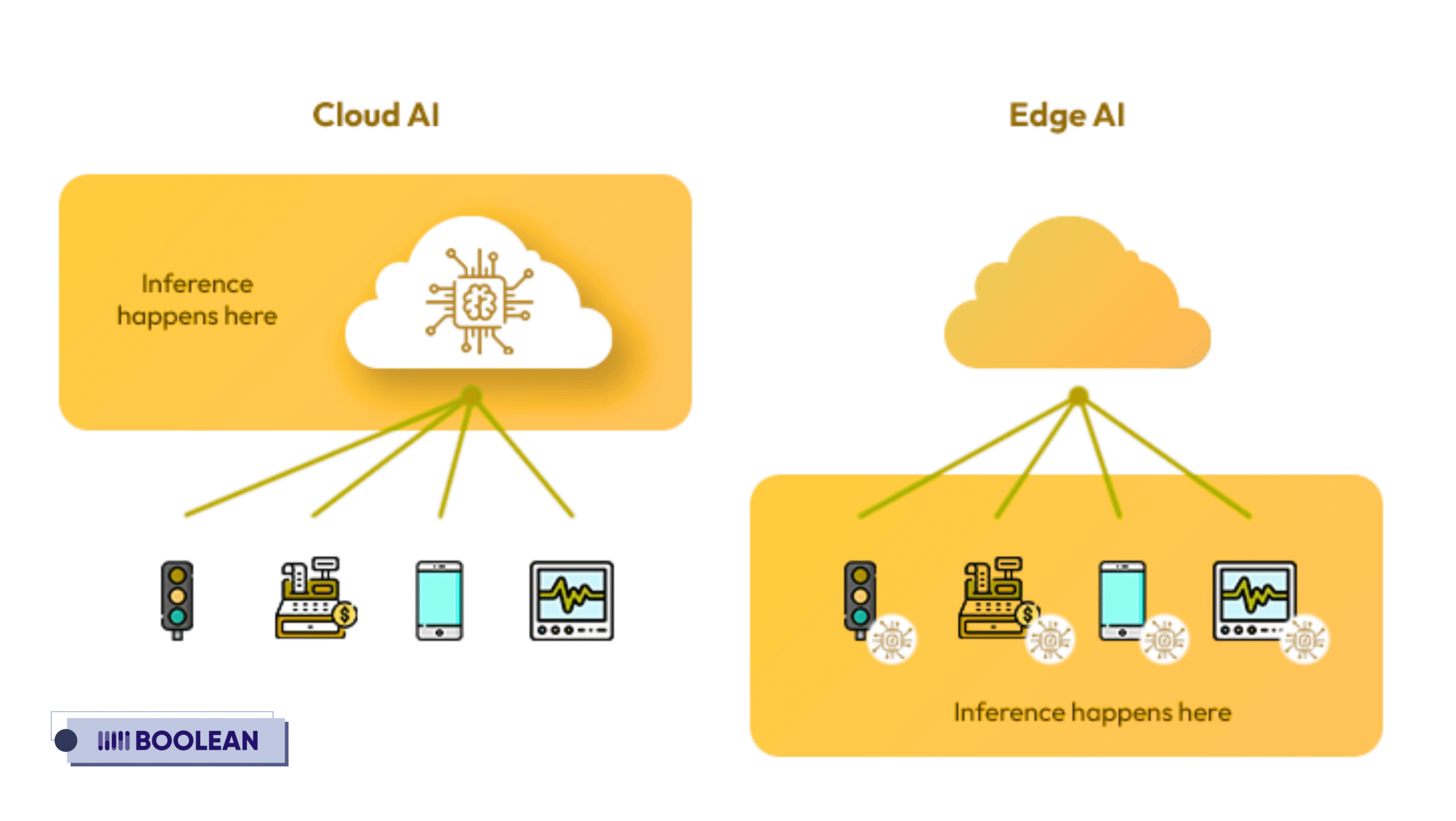

Edge AI vs. Cloud AI: What’s the Difference?

| Feature | Edge AI (On-Device) | Cloud AI (Server-Based) |

| Speed | Instant (real-time edge AI) | Delay (network-dependent) |

| Privacy | Data never leaves the device | Risk of breaches |

| Offline Use | Fully functional | Requires internet |

| Cost | Lower long-term (no server fees) | High cloud compute costs |

Where You’ve Already Seen Edge AI in Action

Some of the most popular Edge AI applications include:

- Apps like Snapchat use AR Lenses (Powered by mobile AI frameworks like TensorFlow Lite).

- Google’s Live Translate (Works offline via on-device AI models).

- iOS’s Face ID (Uses Core ML for instant, secure recognition).

Want to see more cutting-edge examples? Check out our guide on AR and VR Trends in Mobile Apps—where Edge AI meets immersive tech.

Mobile Real-Time Edge AI

You can use Edge AI frameworks (like PyTorch Mobile or ML Kit) to:

- Optimize AI models (shrinking them for phones via quantization).

- Leverage hardware accelerators (NPUs/GPUs for real-time AI processing).

- Integrate with sensors (cameras, mics—key for AI mobile apps).

For a deeper dive into tools, explore our list of Top AI Tools for Android App Development—featuring the best SDKs for Edge AI integration.

How Real-Time Edge AI Works in Mobile Environments

Consider a mobile application that detects your mood based on your facial expressions, or a mobile application that instantly and directly translates your speech in an offline way.

That user experience is not using a cloud server located miles away, it is powered by real-time Edge AI right on the edge of your mobile device.

What Is Real-Time Edge AI?

Put simply, Edge AI mobile applications can do data processing locally using on-device AI (sometimes called ‘on-device AI’).

Rather than sending your data to the cloud for processing, these applications are capable of making real-time decisions where the data is being created. This reduces latency and privacy risk and can be fully operational without a network connection.

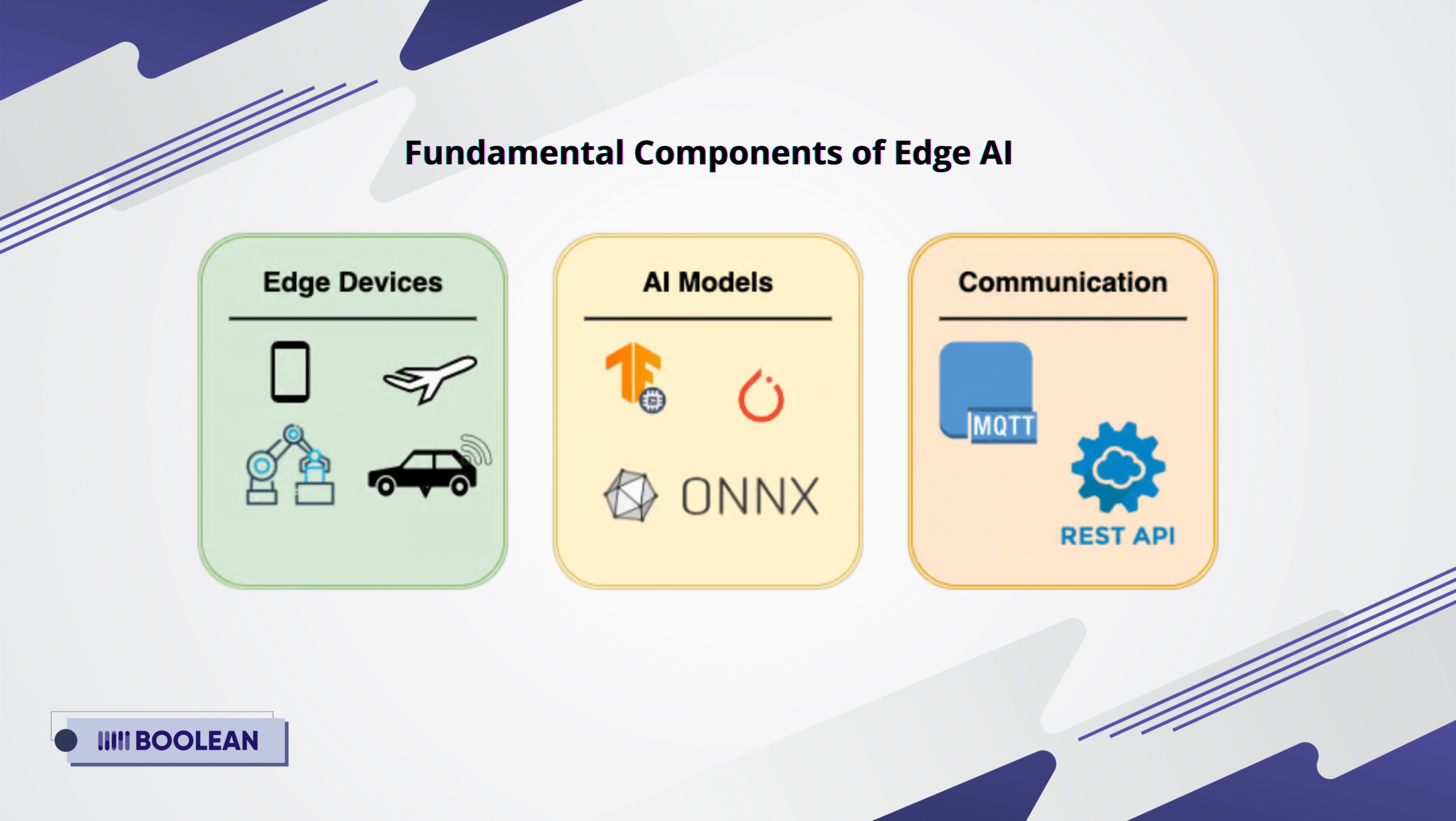

Fundamental Components of Edge AI

To appreciate how this all works, let’s examine the three key components of Edge AI:

- Edge Devices: These are the mobile phones, tablets, wearables, or IoT devices where the AI processing takes place. Today’s smartphones and their powerful chipsets are not just a possibility for mobile AI processing, but an efficient reality.

- AI Models: These are the brains of the operations – trained algorithms for tasks such as image recognition, natural language understanding, or sound classification. In mobile app development for AI, these models are optimized for speed and low power use.

- Communication: Although most processing takes place locally, there are still some applications that need to sync data or get updates from the cloud. This hybrid approach is conducive to adaptable Edge AI applications that include both real-time processing and periodic connectivity.

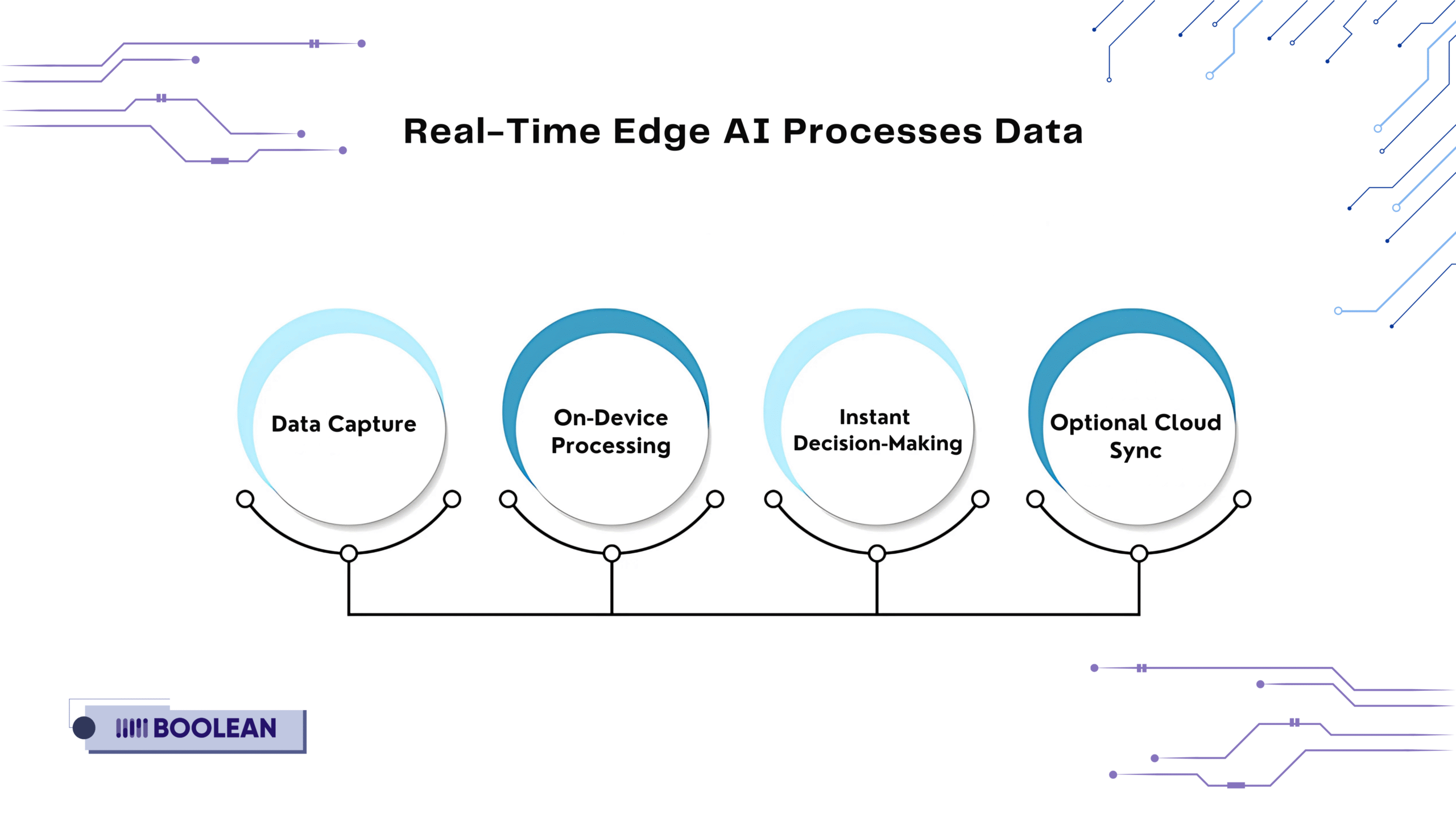

Step-by-Step: How Real-Time Edge AI Processes Data

Let’s say you’re using a fitness app with posture detection. Here’s what happens behind the scenes:

Data Capture

- Your phone’s camera records your movements.

On-Device Processing

- The Edge AI framework (like ML Kit) analyzes the video feed directly on your phone—no internet needed.

Instant Decision-Making

- The AI detects slouching and alerts you in real-time (thanks to low-latency AI processing).

Optional Cloud Sync (If Required)

- Summary data (e.g., “You slouched 5x today”) may sync to the cloud later, but raw video stays private.

Why This Matters for Developers

If you’re diving into AI app development, understanding these components is crucial. Ask yourself:

- Does your app need to work in real time?

- Should it function offline?

- Are privacy and speed essential to the user experience?

If the answer is yes, then Edge computing in mobile apps is likely your best path forward.

With the right combination of hardware, software, and models, real-time AI applications can go from vision to reality—right in the palm of your hand.

Popular Edge AI Frameworks for Mobile App Development

If you’re seeking to incorporate real-time AI into your mobile applications, choosing appropriate tools won’t guarantee success, but it’s half the battle.

Whether your goal is to develop smart assistants, AR experiences, or intelligent camera capabilities for showcasing something to a user, leveraging Edge AI frameworks will allow you to construct a mobile app that will be harnessed quickly, efficiently, and to a standard for future use.

Using Edge AI means the processing occurs on-device using on-device AI – so your user is getting real-time responses, offline use, better privacy due to data not being in the cloud, and so on.

The mobile shift to edge computing for mobile applications is being driven by a growing mobile AI framework and AI SDK ecosystem.

Why Frameworks Matter in Mobile AI

For developers diving into AI app development, these frameworks provide pre-built tools and optimized environments for training, deploying, and running AI mobile app features directly on the device.

They’re the bridge between your creative ideas and functional, real-time experiences.

Let’s take a look at some of the most popular and effective Edge AI frameworks for mobile development:

1. TensorFlow Lite

- Best for: Cross-platform on-device AI (Android & iOS).

- Key Features:

- Supports quantized models (smaller, faster AI).

- Hardware acceleration (NPU/GPU) for real-time edge AI.

- Pre-trained models for vision, text, and speech.

- Use Case: Apps like Google Translate (offline mode).

- Optimization Tip: Pair with Mobile App Performance Optimization guides for smoother execution.

2. PyTorch Mobile

- Best for: Developers who want flexibility in AI app development.

- Key Features:

- Dynamic model adjustments (unlike static TFLite).

- Easy integration with Python-based AI frameworks.

- Strong community support.

- Use Case: AI-powered social media filters.

- Pro Tip: Combine with Free APIs for Mobile Apps for added features.

3. Core ML (Apple)

- Best for: High-performance AI mobile apps on iOS.

- Key Features:

- Optimized for Apple’s Neural Engine (blazing-fast AI processing mobile).

- Supports vision, NLP, and speech models.

- Easy integration with Swift.

- Use Case: Face ID, Siri Shortcuts.

- ASO Note: Optimize Core ML apps for discoverability with App Store Optimization.

4. ML Kit (Firebase by Google)

- Best for: Quick AI integration in mobile apps without heavy coding.

- Key Features:

- Pre-built APIs for face detection, barcode scanning, etc.

- Works offline (Edge computing in mobile apps).

- Combines Edge + Cloud AI when needed.

- Use Case: Smart shopping apps (scan products offline).

5. ONNX Runtime

- Best for: Developers using multiple AI frameworks.

- Key Features:

- Runs models from TensorFlow, PyTorch, etc.

- Cross-platform (Android, iOS, Windows).

- Optimized for real-time AI applications.

- Use Case: Autonomous drone navigation.

Choosing the Right Framework

| Need | Best Framework |

| Cross-platform | TensorFlow Lite |

| iOS-only speed | Core ML |

| Quick prototyping | ML Kit |

| Flexible models | PyTorch Mobile |

Key Use Cases of Edge AI in Mobile Applications

Edge AI mobile apps have power beyond speed or privacy advantages; they solve problems.

Edge AI applications redefine everything possible at mobile levels, from personalized user experiences to intelligent automation.

Here are the most impactful Edge AI applications today:

- Augmented Reality (AR): Snapchat Filters, IKEA Place

AR sounds very futuristic, but Edge AI frameworks give it the position of mainstream in so many applications you already are using on mobile devices.

Take Snapchat, for example. Its face filters and AR lenses aren’t just fun—they’re built on sophisticated mobile AI frameworks that recognize facial features and apply real-time effects in milliseconds.

This kind of responsiveness is only possible because the AI models run directly on your device, making it a perfect example of Edge AI mobile apps in action.

Another brilliant use case is IKEA Place. This app lets users visualize furniture in their homes using AR.

Here, Edge AI applications play a key role in detecting spatial dimensions and aligning virtual furniture with real-world surroundings—all without sending video data to the cloud. The result? A seamless, private, and real-time experience powered by Edge AI use cases.

These AR-driven apps represent just one category of innovation.

But the common thread? Each one uses Edge AI frameworks to process data locally, respond instantly, and enhance user experiences in powerful ways.

- Healthcare & Fitness Apps: Personalized Wellness on the Edge

From monitoring heart rate to analyzing workout form, Edge AI mobile apps are reshaping how we approach personal health and wellness.

With on-device AI being embedded into fitness and health platforms, developers are creating user experiences that are smarter, faster, and more private.

Apps like Apple Fitness+, WHOOP, and Freeletics use mobile AI frameworks to monitor motion, identify patterns, and give feedback in real-time, all without an internet connection.

This means whether you are counting reps during a workout or identifying irregular heart rhythms, the features are enabled by real-time AI processing on-device.

This is where Edge AI applications truly shine.

With AI models trained for movement analysis, biometrics, or even mental health tracking, users benefit from instant insights that aren’t delayed by network lags or privacy concerns. It’s not just smart—it’s empowering.

Some standout Edge AI use cases in health & fitness include:

- Posture correction and form analysis during exercise

- Sleep quality tracking with audio and motion sensing

- Heart rate and stress monitoring with on-device processing

- Real-time injury prevention and recovery suggestions

These apps tap into lightweight, efficient Edge AI frameworks that are designed for wearable tech and smartphones. With local processing, users experience minimal battery drain and maximum privacy—two essentials in the healthcare space.

As AI in mobile development continues to grow, expect more fitness and wellness apps to adopt AI integration in mobile apps through robust AI frameworks and specialized AI SDKs.

- Smart Cameras & Security: Intelligent Vision at the Edge

One of the most powerful Edge AI use cases lies in turning everyday mobile devices into intelligent vision systems.

In smart cameras and mobile security apps, Edge AI mobile apps are enabling real-time analysis of video feeds, facial recognition, object tracking, and even anomaly detection—all without sending sensitive footage to the cloud.

This is made possible through on-device AI that processes video data locally, helping users maintain privacy while gaining instant results.

Whether it’s a home security app identifying a familiar face or a mobile surveillance tool flagging unusual activity, these apps showcase the transformative potential of Edge AI applications.

How It Works

These capabilities are built using specialized Edge AI frameworks and mobile AI frameworks that support computer vision models optimized for performance on mobile chipsets. By combining real-time AI processing with edge computing power, users benefit from:

- Instant motion or intruder alerts

- Offline video analytics (no internet needed)

- Face, object, and license plate recognition

- Enhanced user authentication through facial biometrics

Apps like Nest Cam, Ring, and even built-in smartphone camera software (like Google Pixel’s image enhancement features) rely on AI integration in mobile apps to deliver real-time, intelligent results.

Security-focused apps also take advantage of AI SDKs that support encrypted, on-device video processing.

This not only boosts performance but significantly reduces the risk of data breaches—an essential concern for users today.

As AI in mobile development advances, the ability to embed vision-based intelligence into everyday apps will continue to grow.

Whether it’s a doorbell camera or a baby monitor, the use of AI frameworks is making mobile security smarter, faster, and more reliable.

- Retail & Shopping Apps: Personalized Experiences in Real Time

Shopping has changed from simply looking for products to a seamless, personalized experience from discovery through checkout.

With Edge AI mobile applications, retailers are delivering smarter, faster, and more interactive experiences and using on-device artificial intelligence everywhere.

Edge AI applications are being used in retail and e-commerce mobile applications to improve fun features, including product recommendations, visual search, virtual try-ons, and smart in-store navigation.

Features are working fully independent of the cloud with little latency and all their development and support must be accommodated for sporadic connectivity in places such as large shopping malls and remote areas.

With Edge AI and mobile AI frameworks, you can enable high-performance features on customers’ smartphones and deliver a real-time AI processing experience that users perceive as their response.

Key Edge AI Use Cases in Retail & Shopping:

Visual Search

- Use your phone camera to scan a product and instantly find similar items online using on-device AI.

Virtual Try-On

- Try on glasses, makeup, or clothes through AR powered by real-time AI applications.

Personalized Recommendations

- Deliver hyper-relevant product suggestions based on behavior, preferences, or past interactions—without ever sending data to the cloud.

Smart Price Tag Scanning & Translation

- Use the camera to scan tags or labels and get real-time pricing, descriptions, or even language translations.

In-Store Navigation

- Guide users to specific aisles or products inside large retail spaces using AI-driven location intelligence.

Apps like Amazon, Zara, and Sephora are already leveraging these Edge AI use cases, proving that smart commerce isn’t just the future—it’s happening now.

By integrating AI SDKs and choosing the right AI frameworks, developers are building AI mobile apps that not only sell—but serve.

- Voice Assistants & Translation: Real-Time Interaction Without the Cloud

When you say “Hey Siri” or ask Google Assistant to set a reminder, you are experiencing Edge AI mobile applications in action.

What might seem like a simple voice query is a complicated process with on-device AI and real-time AI processing to yield immediate results without having to send data to the cloud.

This is even more imperative for voice assistants and translation applications where speed, privacy, and offline features are paramount.

With on-device AI (mobile AI frameworks) applicable and emerging Edge AI frameworks, mobile applications can now recognize speech, translate languages, and even detect intent in real time.

Powerful Edge AI Use Cases in Voice & Translation:

Offline Voice Commands

- Control your phone, smart home devices, or apps using speech, even without an internet connection—powered by on-device AI.

Real-Time Translation

- Apps like Google Translate now offer live translation of speech or text, with some features processed locally for faster, more secure performance.

Voice-to-Text Messaging

- Convert your speech into text messages or notes instantly, using an AI mobile app logic that learns your voice over time.

Language Learning

- Apps such as Duolingo and iTranslate use real-time AI applications to provide pronunciation feedback and contextual translation exercises.

Accessibility Features

- Enable speech commands or real-time captions for users with visual or hearing impairments, making apps more inclusive with AI integration in mobile apps.

These features are powered by optimized AI frameworks and lightweight AI SDKs, allowing developers to build responsive and intelligent apps that work anytime, anywhere.

With AI in mobile development moving toward more privacy-first solutions, Edge AI applications in voice tech are becoming the standard, not the exception.

Whether you’re building a travel app, a smart productivity tool, or an assistive tech solution, voice-driven interactions powered by Edge computing in mobile apps can elevate the user experience dramatically.

Edge AI Deployment Models: Choosing the Right Hardware & Software

Deploying Edge AI in mobile apps isn’t just about algorithms—it’s about finding the perfect hardware-software combo to keep your app fast, efficient, and user-friendly.

Why does this matter?

A mismatched setup can lead to:

- Laggy real-time AI processing (frustrated users).

- Battery drain (unoptimized hardware).

- Costly over-engineering (overspending on unnecessary power).

Let’s break down your Edge AI deployment options so you can build smarter.

- Edge AI Hardware: Powering On-Device Intelligence

Your smartphone isn’t just a camera + screen—it’s packed with AI-accelerated chips designed for Edge AI mobile apps. Here’s what’s under the hood:

Key Hardware Options for Mobile AI

Smartphone NPUs (Neural Processing Units):

- Apple’s Neural Engine (Core ML apps).

- Qualcomm’s Hexagon DSP (Android AI).

- Google Tensor chips (Pixel’s on-device AI).

Dedicated Edge AI Chips (For advanced apps):

- Google Coral Edge TPU (ultra-low-power vision AI).

- NVIDIA Jetson (for high-performance mobile robotics).

- Intel Movidius Myriad X (drones/smart cameras).

Pro Tip:

- Need long battery life? Prioritize chips with TOPS/Watt efficiency (like Coral TPU).

- Building real-time AR? Leverage the GPU + NPU combo in flagship phones.

- Edge AI Software: Frameworks & Management Tools

Even the best hardware needs the right Edge AI frameworks and tools. Here’s what developers use:

Top Software Solutions for Edge AI

For Model Deployment:

- TensorFlow Lite (Android/iOS optimized models).

- Core ML Tools (Convert PyTorch/TF → Apple-friendly).

- ONNX Runtime (Cross-platform AI execution).

For Edge Device Management:

- Azure IoT Edge (Microsoft’s cloud-to-edge pipeline).

- Google Distributed Cloud (Hybrid AI deployments).

- ClearBlade Alef (Enterprise edge AI platform).

Pro Tip:

- Use quantization tools (like TF Lite Converter) to shrink models by 4x.

- For AI app development, start with ML Kit if you need pre-built APIs.

Matching Hardware + Software for Your Use Case

| Application | Ideal Hardware | Best Software Framework |

| AR Filters | Phone NPU + GPU | TensorFlow Lite / Core ML |

| Offline Translation | CPU + NPU | PyTorch Mobile |

| Smart Security Cam | Coral Edge TPU | ONNX Runtime |

Benefits and Challenges of Real-Time AI in Mobile Applications

When it comes to mobile applications, real-time Edge AI enables game-changing capabilities—but it takes a lot of work to realize this potential.

Let’s examine the benefits and challenges so you can build smarter, faster applications.

Benefits of Real-Time Edge AI

- Lightning-Fast Performance

- No cloud lag: On-device processing means instant results (critical for AR filters, live translations, or gaming).

- Example: Snapchat lenses react in under 50ms—faster than the human eye blinks.

- Enhanced Privacy & Security

- Data never leaves the device, making it ideal for:

- Healthcare apps (patient vitals stay private).

- Banking/finance (fraud detection without exposing transactions).

- Offline Functionality

- Works in airplane mode, remote areas, or poor connectivity:

- Google Maps’ offline AI navigation.

- Real-time language translation without Wi-Fi.

- Cost Efficiency

- Reduces cloud server costs by up to 60% (no need for massive data transfers).

- Scalability

- Edge AI scales effortlessly—every phone handles its processing.

Key Challenges (And How to Solve Them)

1. Limited Hardware Resources

- Problem: Phones have less power than servers.

- Solution:

- Use quantized models (e.g., TensorFlow Lite’s INT8 models).

- Leverage NPUs/GPUs (Apple Neural Engine, Qualcomm Hexagon).

2. Model Accuracy Trade-offs

- Problem: Smaller on-device models may sacrifice accuracy.

- Solution:

- Hybrid AI (simple tasks on-device, complex ones in the cloud).

- Federated learning (improve models without raw data).

3. Fragmented Device Ecosystem

- Problem: Not all phones have NPUs or the latest chips.

- Solution:

- Offer graceful degradation (basic AI on older devices).

- Detect hardware and adjust models dynamically.

4. Battery Drain

- Problem: Continuous AI processing can kill battery life.

- Solution:

- Optimize wake cycles (e.g., only activate AI when the camera is open).

- Use low-power modes (Android’s Neural Networks API).

5. Development Complexity

- Problem: Edge AI requires expertise in ML, mobile dev, and hardware.

- Solution:

- Start with pre-built SDKs (ML Kit, Core ML).

- Use no-code tools like Fritz AI for prototyping.

Pro Tips for Balancing Benefits & Challenges

- Test early on low-end devices.

- Monitor performance with tools like Firebase Performance Monitoring.

- Prioritize use cases—not every feature needs real-time AI.

How Boolean Inc. Can Help You Succeed with Real-Time Edge AI Mobile Apps

At Boolean Inc., we simplify the journey of building real-time Edge AI mobile apps.

Whether you’re launching a new AI mobile app or upgrading an existing one, our team helps you harness the power of on-device AI and Edge AI frameworks—efficiently and effectively.

What we offer:

- Custom AI Integration using optimized models for real-time AI processing

- Guidance on choosing the right Edge AI deployment model for your app

- Cross-platform development with leading mobile AI frameworks

- End-to-end support—from concept to launch and beyond

With Boolean Inc., you get a partner who understands both the tech and the user. Let’s build smarter, faster, and more secure mobile experiences—together.

Conclusion

As mobile apps become more intelligent and user expectations rise, Edge AI mobile apps are setting the new standard.

By enabling real-time AI processing directly on devices, developers can deliver faster, more secure, and more responsive experiences without relying on constant cloud access.

From augmented reality and healthcare apps to smart cameras and voice assistants, the possibilities with Edge AI applications are vast—and growing.

Leveraging the right Edge AI frameworks, AI SDKs, and mobile AI frameworks allows you to build powerful, privacy-conscious apps that work seamlessly anytime, anywhere.

AI-powered mobile apps can bring significant long-term impact through challenges — hardware limitations to model optimizations — for now, contextual deployment and thoughtful AI integration into mobile applications can lead to transformative effects.

Whether you are in the beginning stages of developing an AI mobile app or you are actively scaling, adopting Edge in mobile apps means you will be actively prepared for the next generational leap of innovation in AI mobile app technology.

FAQs

- What is Edge AI in mobile apps, and why do we need it?

Edge AI enables your mobile app to process data on-device instead of having to send it to the internet first, meaning your app can respond quickly, work without internet access, and keep user data private. When you use Edge AI, you are building a more intelligent and efficient way to build a modern app.

- Can Edge AI work if my app does not have an internet connection?

Absolutely! This is one of the biggest advantages of Edge AI. When you use on-device AI, your app can make decisions in real time without requiring any internet connectivity.

- What type of mobile app benefits the most from Edge AI?

Mobile apps in healthcare, security, fitness, AR, and voice assistants will benefit the most from Edge AI. Whenever you are using real time AI and instant feedback in your app, Edge is going to deliver the most value.

- Is it hard to add Edge AI into an existing mobile app?

Not when you have the right tools. With modern Edge AI frameworks and the assistance of an experienced team like Boolean Inc., adding Edge AI to your app is easier than ever before, even for teams that don’t have experience with AI.

- What is the difference between Edge AI and Cloud AI?

Cloud AI requires internet access for data to be processed, while Edge AI runs your AI directly on-device. Edge is faster, more private, and better suited for mobile environments.