Introduction

AI is everywhere.

Have you ever thought about how your phone can recognize your face, translate languages immediately, or suggest the right photo filter?

That’s the magic of AI driven mobile apps. These apps are everywhere now, quietly making our lives easier and more fun.

When it comes to AI driven mobile apps, that wait time often comes down to something called inference speed.

Simply put, it is estimated that the AI model of your app makes predictions or decisions based on the data you feed. Think about it, such as asking your phone to recognize a face in a photo or translate a sign in real time.

Here’s the matter: Mobile devices are not supercomputers. They are powerful, certain, but their boundaries are. Your phone needs to balance performance with battery life, manage heat, and perform several tasks simultaneously.

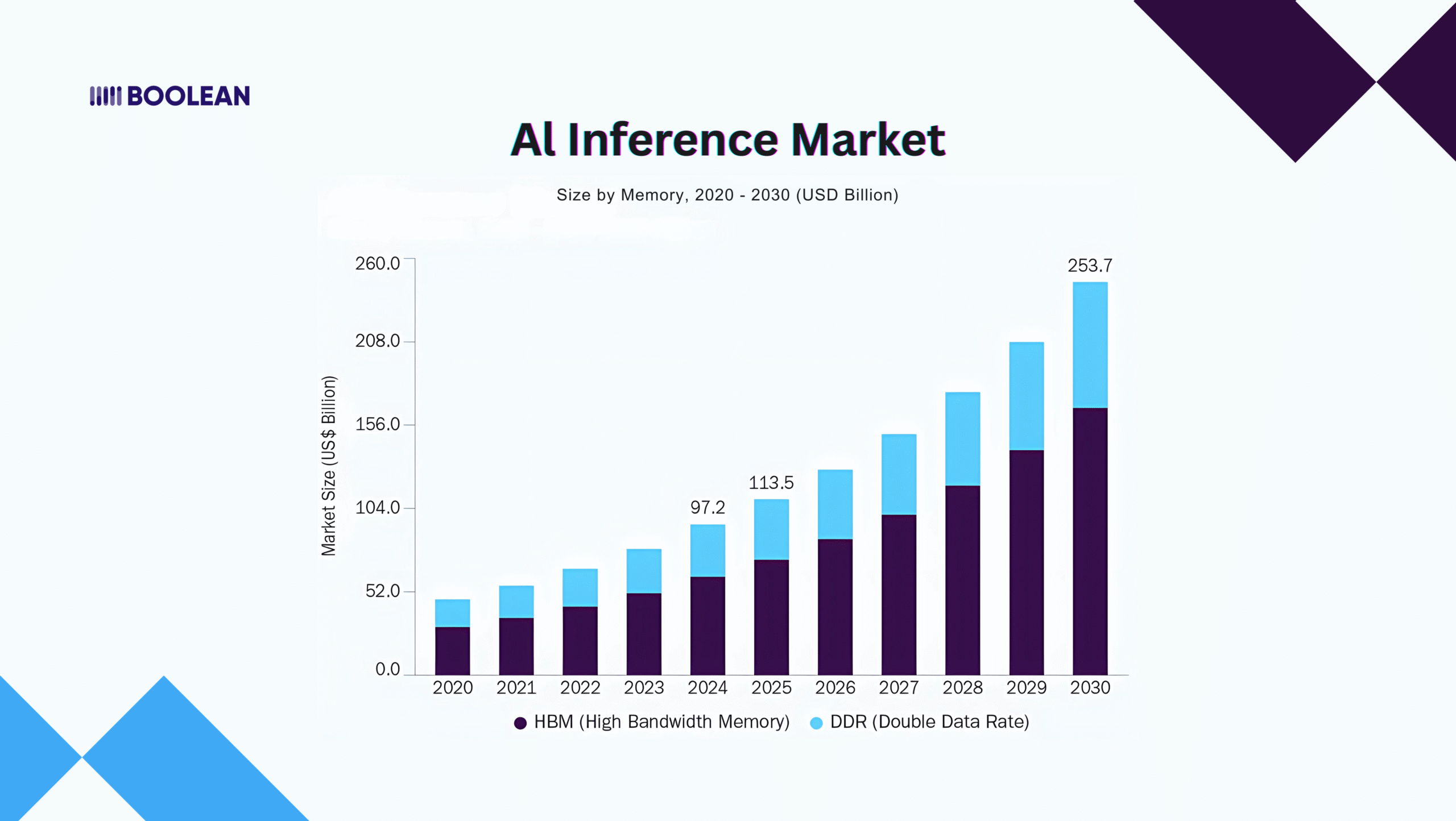

The Global AI Inference Market size was USD 97.24 billion in 2024 and is expected to reach USD 253.75 billion by 2030.

That’s massive growth. And you know what’s driving it? The explosion of AI driven mobile apps that people actually want to use.

But here’s where it gets tricky.

Users expect instant results. They want their photo filters applied immediately. They need voice assistants to respond without delay.

They expect real-time language translation that lives with conversation. When the estimate is slow, the users get frustrated.

Battery drain is another killer. Slow inference doesn’t just test patience; it drains batteries faster than a GPS app on a road trip.

Nobody wants an AI feature that turns their phone into a pocket warmer and dies by lunch.

The good news? You can optimize the Inference speed without renouncing the smart features that make your app special. All this is about knowing the correct techniques and understanding the trade-off.

Ready to speed up your AI driven mobile apps? Let’s dive.

Understanding AI Inference on Mobile Devices

Let’s start with the basics. What exactly happens when your AI driven mobile apps make predictions?

Inference is like having a really smart assistant living in your phone. You show it something, maybe a photo, some text, or audio, and it uses its training to figure out what you need.

Face unlock? That’s inference. Voice typing? Inference again. Those cool AR filters? You guessed it.

But mobile inference is a different beast than what happens on powerful servers.

Your phone has limited processing power. It’s got maybe 8GB of RAM if you’re lucky. The processor needs to handle everything from your music streaming to background app updates.

And it’s all powered by a battery that needs to last all day.

This is where things get interesting.

You’ve got two main options for running AI inference: on-device or in the cloud. Each has its pros and cons.

- On-device inference means everything happens right on your phone; it’s fast, private, and works offline.

- Cloud inference sends data to powerful servers, processes it there, and sends results back.

If you’re curious about the trade-offs, check out our guide on Real-Time Edge AI vs Cloud Inference.

The challenge with mobile devices goes beyond just raw power.

Memory is precious. Loading a large AI model can eat up hundreds of megabytes, or even gigabytes of RAM. That’s memory your other apps need too.

Then there’s thermal throttling. Play intensive calculations for a very long time, and your phone gets hot. When it becomes too hot, it slows down to cool. Your lightning-fast AI suddenly crawls.

Storage matters too. AI models can be huge. Some language models that would work great on a server are simply too big for phones.

That’s why mobile-specific solutions are crucial. Speaking of which, if you’re interested in running language models directly on devices, our article on Building AI-Powered Apps with On-Device LLMs dives deep into practical approaches.

Here’s what makes mobile inference unique:

Power constraints force you to be clever. You can’t just throw more computing power at the problem. Network latency becomes critical if you’re using cloud inference.

Even on fast 5G, there’s always some delay. Privacy concerns push more developers toward on-device solutions. Nobody wants their personal photos uploaded somewhere just to apply a filter.

The hardware itself varies wildly. Some phones have dedicated AI chips (Neural Processing Units) or TinyML. Others rely on GPUs or regular CPUs. Your app needs to do good work on all of them.

Understanding these obstacles is not to discourage you. It is about being realistic. When you know the challenges, you can design around them.

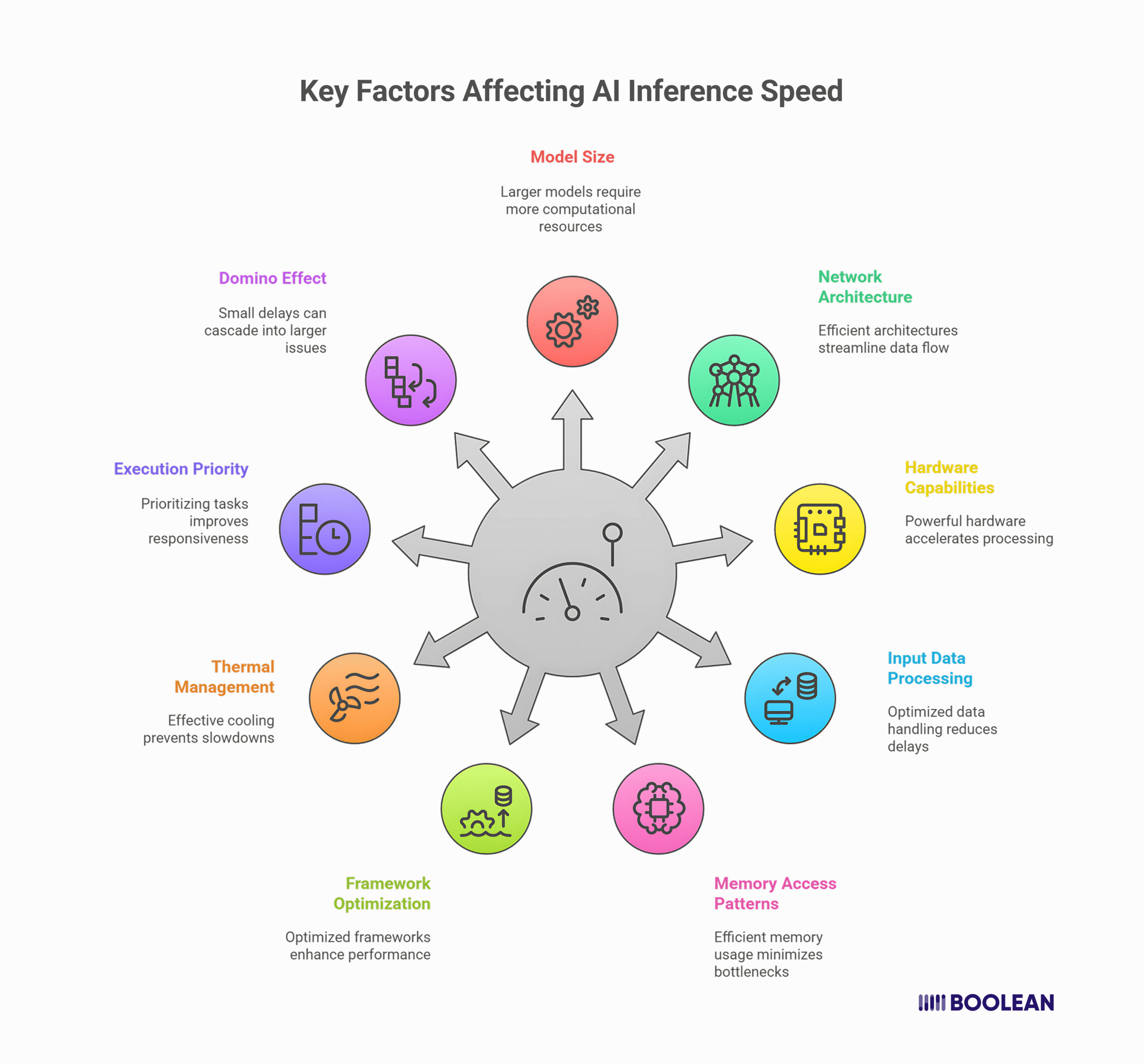

Key Factors Affecting AI Inference Speed

So what actually makes your AI-driven mobile apps fast or slow? Let’s break it down.

- Model Size and Complexity

Big models are powerful, but they’re also heavy.

The same goes for neural networks. More layers mean more calculations. More parameters mean more memory access. A model with billions of parameters might be incredibly smart, but it’ll crawl on a phone.

This is especially true when working with language models, if you’re building conversational features, our guide on LLM Agents in Mobile Apps shows how to balance capability with performance.

Size isn’t everything, though. Architecture matters just as much.

- Network Architecture Choices

Some AI architectures are built for accuracy. Others are built for speed.

MobileNet, for instance, was designed specifically for phones. It uses clever tricks like depthwise separable convolutions (fancy term, simple concept: doing the same work with fewer calculations).

Then you have models like YOLO for object detection or EfficientNet for image classification. Each makes different trade-offs. The key is picking the right tool for the job.

- Hardware Capabilities

Not all phones are created equal.

A flagship iPhone with a Neural Engine processes AI tasks differently from a budget Android phone.

Some devices have dedicated AI accelerators. Others rely on GPUs. Many still use regular CPUs for everything. Your inference speed can vary by 10x or more across different devices.

This is where framework choice becomes crucial. Different frameworks excel on different hardware.

Want to dive deeper? Check out our comparison of Best Mobile AI Frameworks: From ONNX to CoreML and TensorFlow Lite.

- Input Data Processing

Here’s something many users overlook: preprocessing can be a huge bottleneck.

Before your model even starts thinking, you need to prepare the data. Resizing images. Normalizing values. Converting formats. On a desktop, this is trivial. On a phone, it can take longer than the actual inference.

I’ve seen apps where image preprocessing took 200ms while inference took just 50ms. This is actually like spending more time preparing the ingredients than cooking.

- Memory Access Patterns

Mobile devices have limited memory bandwidth. How your model accesses memory matters enormously.

Sequential access is fast. Random access is slow. Models that jump around in memory constantly will struggle.

This is why quantization (using smaller data types) helps so much; it’s not just about model size, it’s about reducing memory traffic.

- Framework and Runtime Optimization

The framework you choose impacts everything.

- TensorFlow Lite might excel on Android devices.

- Core ML might be unbeatable on iOS.

- ONNX Runtime offers great cross-platform support.

Each framework has its own optimization tricks and hardware acceleration support. For real-world examples of how these choices play out, see our article on Real-Time Edge AI in Mobile Apps: Frameworks and Use Cases.

- Thermal and Power Management

This one’s sneaky. Your model might run blazingly fast… for about 30 seconds.

Then thermal throttling kicks in. The device heats up, the processor slows down, and suddenly your 50ms inference takes 200ms. Sustained performance is often more important than peak performance for AI-driven mobile apps.

- Background vs Foreground Execution

When your app runs matters too. Foreground apps get priority. Background apps get throttled. iOS and Android both limit what background apps can do to save battery.

Planning to run inference while the app is minimized? Your speed might drop dramatically.

- The Domino Effect

Here’s the thing about these factors: they compound.

A large model (strike to) with a bad memory access pattern (strike two) is running on an old device (strike three), a recipe for disappointed users.

But two or three of these factors also optimize, and you will see dramatic improvements.

Good news? You do not need to adapt everything completely. Pay attention to the biggest hurdles first. Often, some smart changes may change the performance of your app.

Techniques to Optimize Inference Speed

Time to roll up our sleeves. Here are the techniques that actually move the needle for AI-driven mobile apps.

- Model Quantization

Quantization is like compression for AI models. Instead of using 32-bit floating-point numbers, you use 8-bit integers. Sometimes even less.

The result? Your model shrinks by 75%. Memory usage drops. Calculations speed up. And here’s the kicker – accuracy barely changes for most tasks.

I’ve seen image classification models go from 100MB to 25MB with less than 1% accuracy loss.

Most frameworks make this easy. TensorFlow Lite has post-training quantization. Core ML handles it automatically. It’s often a one-line change for massive gains.

- Model Pruning: Cutting the Fat

Neural networks are surprisingly redundant. Many connections contribute almost nothing to the final output.

Pruning removes these useless connections. Think of it like removing unnecessary words from a sentence; the meaning stays the same, but it’s faster to read. You can often remove 50-90% of parameters without hurting performance.

The catch? Pruning requires retraining. It’s more work upfront, but the payoff is worth it for production apps.

- Knowledge Distillation: Teaching Smaller Models

Here’s a clever trick: train a small model to mimic a large one.

You start with a powerful but slow “teacher” model. Then you train a smaller “student” model to copy its behavior. The student learns not just the right answers, but the reasoning patterns.

This is especially powerful for language models if you’re building conversational features. Our guide on How to Build ChatGPT-Powered Apps for Business Use explores this in detail.

The student model can be 10x smaller while retaining 95% of the capability. Perfect for mobile.

- Hardware Acceleration: Use What You’ve Got

Modern phones pack specialized AI hardware. Not using it is like having a sports car and driving in first gear.

iOS devices have the Neural Engine. Many Android phones have NPUs or DSPs. Even GPUs can dramatically speed up inference. The key is using frameworks that tap into this hardware automatically.

But here’s a pro tip: don’t assume hardware acceleration is always faster. For tiny models, the overhead of moving data to specialized processors might actually slow things down. Test everything.

- Batch Processing: Work Smarter

Processing one image at a time? You’re leaving performance on the table.

Batch processing handles multiple inputs together. Instead of ten separate inference calls, you make one call with ten inputs. The overhead gets amortized. Memory access becomes more efficient.

This works great for non-real-time tasks. Processing a photo album? Batch it. Real-time camera feed? Maybe not.

- Model Architecture Selection

Sometimes the best optimization is choosing a different model entirely.

MobileNet, EfficientNet, and SqueezeNet were built for mobile. They use architectural tricks that traditional models don’t.

Depthwise separable convolutions. Inverted residuals. Squeeze-and-excitation blocks. Fancy names, simple goal: do more with less.

For language tasks, consider mobile-optimized models like DistilBERT or TinyBERT. They’re specifically designed for resource-constrained environments.

Speaking of which, check out The best AI chatbots in 2025 for mobile apps and web platforms for examples of optimized conversational AI.

- Caching and Precomputation

Not all inference happens in real-time. Be strategic about when you run your models.

Cache frequent predictions. Precompute results when the app launches. Run inference during idle times.

If your app uses AI for UI enhancements, coordinate with animation frames – our article on UI Animation in Mobile Apps shows how to balance smooth animations with AI processing.

- Dynamic Model Loading

Your app might have multiple AI features. Loading all models at once wastes memory.

Load models on-demand. Unload them when done. Use smaller models.

Best Practices in Mobile AI App Development

Developing AI AI-operated mobile app is not only about clever algorithms. It is about creating experiences that delight users by respecting their equipment, data, and expectations.

Let me run through the best practices in mobile AI app development.

Start With User Needs, Not AI Capabilities

I see this mistake constantly. Users get excited about a cool AI technique and build an app around it. Wrong approach.

Start by identifying genuine user problems. Does AI actually solve them? Is mobile the right platform?

Before writing a line of code, ask these questions. The most sophisticated neural network does not mean anything if users do not need it.

Your users don’t care about your brilliant implementation. They care about results. Period.

Design for Graceful Degradation

Keep in mind that your AI will fail sometimes, and you have a plan for it.

Build fallbacks into every AI feature. If the on-device model can’t handle a request, can you process it in the cloud?

If network connectivity drops, is there a simpler alternative? Never leave users stranded when AI components fail.

This approach requires humility. Accept that your AI isn’t perfect and design accordingly.

Transparent AI, Happy Users

People get uncomfortable when they don’t understand what’s happening with technology. Be transparent.

Explain what data you’re collecting and why. Show progress when processing is happening.

Let users know when AI is making decisions versus when traditional algorithms are at work. When appropriate, explain confidence levels in AI outputs.

This transparency builds trust. Trust keeps users engaged with your app.

Test With Diverse Data and Devices

Your testing needs to be more thorough with AI apps. Much more thorough.

Test across different devices, from flagship phones to budget models. Test with diverse data that represents different user groups. Simulate poor network conditions. Challenge your models with edge cases.

AI behaves differently in the wild than in your controlled development environment. Find those differences before your users do.

Balance Online and Offline Capabilities

Not everything needs to happen on the device. Not everything should happen in the cloud.

Sensitive operations might need to stay on-device for privacy reasons. Complex inference might perform better in the cloud. Find the right balance for your specific application.

Consider hybrid approaches. Use lightweight models on-device for immediate responses, with more powerful cloud models for complex cases. The best AI apps seamlessly blend both worlds.

Implement Progressive Enhancement

Build your core functionality to work without AI first. Then enhance it with AI capabilities.

This approach ensures that your app also remains functional when AI components face problems.

It also creates a stronger architecture and a clear separation of concerns in your codebase.

Optimize Battery and Resource Usage

AI can be resource-hungry. Respect your users’ devices.

Schedule intensive AI tasks when the device is charging or idle. Batch non-urgent processing. Monitor battery impact during development.

Consider throttling AI features when the battery is low.

Remember that in mobile, efficiency isn’t optional; it’s essential. An app that drains battery quickly will be uninstalled quickly, too.

Continuous Learning and Improvement

Your AI model should get better over time. Plan for this from day one.

Implement analytics to track model performance. Collect user feedback on AI outputs. Build mechanisms to update models without requiring full app updates. Consider A/B testing different models.

The apps that stand out are those that get smarter with use. Make yours one of them.

Mind the Privacy-Personalization Balance

Users want personalized experiences but also value privacy. This tension defines modern mobile development.

Be explicit about what data you collect and how you use it. Give users control over AI personalization. Consider federated learning approaches that improve models without sharing raw user data.

Document Everything

Document your data sources. Document your model architecture. Document your preprocessing steps. Document your performance metrics.

When you revisit your AI implementation six months later, you’ll thank yourself.

When new team members join, they’ll thank you too. When you need to troubleshoot unexpected behaviors, proper documentation will save days of frustration.

Keep Learning

The mobile AI landscape is developing rapidly. What is the state-of-the-art today will be old tomorrow.

Be informed about the new framework, adaptation techniques, and hardware abilities. Allocate time for continuous learning.

Developers who flourish in mobile AI are those who never prevent the students of crafts.

Consider Ethical Implications

AI isn’t morally neutral. Your implementation choices have real consequences.

Consider potential biases in your training data. Think about accessibility for users with disabilities.

Evaluate how your AI might impact different demographic groups. Plan for potential misuse.

Building responsible AI isn’t just the right thing to do; it’s good business. Users increasingly demand ethical technology.

Create Delightful Interactions

AI should enhance your app’s UI animation in mobile apps, not fight against it. Think about how AI responses integrate with your overall user experience.

Use animations to indicate AI processing. Create natural transitions between traditional UI and AI-driven elements. Make interactions with AI components feel intuitive and responsive.

The magic happens when powerful AI feels natural and effortless to use.

Remember, the construction of the great AI-driven mobile app is not about applying the most complex algorithms. It is about solving real problems for real people who still feel magically reliable.

Keep the user at the center of every decision, and the technical details will fall.

Case Studies and Examples

Nothing teaches better than real-world success stories.

Let’s look at how actual developers tackled inference speed challenges in their AI-driven mobile apps. Their journeys might surprise you.

- Snapshot: The Photo Enhancement App That Almost Failed

Snapshot launched with a brilliant AI that could transform ordinary photos into professional-looking images.

Just one problem: it took 8 seconds per photo. Users abandoned the app in droves.

The team was devastated. They had built amazing technology that nobody wanted to use.

Their turnaround story is instructive.

First, they quantized their model from 32-bit to 8-bit precision, cutting processing time to 5 seconds. Better, but still too slow.

Next, they implemented GPU acceleration, reducing it further to 3 seconds.

The breakthrough came when they split their model into two parts.

- A lightweight “preview” model delivered results in under 1 second, while the full model processed in the background. Users saw immediate results while waiting for the final version.

Downloads increased 340% after these optimizations.

The lesson? Users value speed over perfection.

- VoiceAssist: When Battery Life Kills a Great Idea

VoiceAssist created an on-device AI assistant that didn’t need cloud connectivity.

Privacy-conscious users loved it. Until their phones died by lunchtime.

The constant neural network processing drained batteries at an alarming rate. Uninstalls skyrocketed.

Their solution was multi-faceted. They implemented aggressive caching of common queries.

They lowered sampling rates when possible. Most importantly, they detected when the phone was stationary and reduced wake-word sensitivity.

Battery impact dropped by 70%. User retention doubled. The app survived.

What I find fascinating is that their most effective optimization wasn’t technical; it was behavioral.

Understanding when users actually needed the assistant saved more battery than any code optimization.

- MedScan: When Accuracy Can’t Be Compromised

MedScan used AI to identify potential skin conditions from phone camera images. Unlike photo filters or games, they couldn’t sacrifice accuracy for speed.

Their initial model was accurate but painfully slow at 12 seconds per scan. Unacceptable for medical use.

Instead of compromising accuracy, they rebuilt their pipeline. They optimized image preprocessing, reducing unnecessary color conversions.

They implemented a two-stage detection approach. Simple conditions got immediate results, while complex cases triggered more detailed analysis.

They also made the waiting experience better with UI animation that showed the analysis process. Users felt the app was working, not stalling.

The result? Scan time dropped to 4 seconds with slightly improved accuracy. User satisfaction scores increased by 45%.

The takeaway: sometimes optimization happens outside the AI model itself.

- ShopSmart: The Price of Cloud Dependence

ShopSmart used AI to identify products in stores and compare prices. Their initial version sent images to cloud servers for processing. It worked great in their office with fast Wi-Fi.

In actual stores with spotty connectivity? Disaster. Responses took up to 30 seconds or failed entirely.

Their solution was hybrid inference. They deployed a smaller model on-device that could identify common products instantly. The cloud model only activates for unusual items. They also compressed images before upload and cached previous results.

Response time dropped to under 2 seconds for 80% of scans. User engagement tripled.

The lesson? Never assume ideal conditions. Test your app where users actually use it.

- LinguaLearn: Optimization Through User Patterns

LinguaLearn built an AI language tutor that adapted to learning styles. Their initial release was praised for personalization but criticized for laggy responses.

Analysis revealed something surprising. Most users practiced similar phrases each day. The app was recalculating personalized responses for content it had seen before.

The solution was elegant. They implemented smart caching based on user patterns. Frequently practiced phrases got pre-computed responses.

They also identified peak usage times and pre-loaded models before users typically opened the app.

Response time improved by 65%. Battery usage dropped by 40%. All without changing their core AI models.

The insight? Sometimes the best optimization comes from understanding user behavior, not tweaking technical parameters.

- FitCoach: When Hardware Makes All The Difference

FitCoach used computer vision to analyze exercise form. On flagship phones, it worked flawlessly. On mid-range devices? Unusably slow.

Instead of accepting this limitation, they adapted.

- For newer phones, they used the full 30fps model. For mid-range devices, they dropped to 15fps and simplified the pose estimation model. For budget phones, they used still image analysis at key points rather than continuous video.

Each tier received an optimized experience appropriate for their hardware. User satisfaction scores became consistent across device types.

This approach required more development effort but expanded their market dramatically. Their app now performs well on devices representing 95% of their target market.

- ChatPal

ChatPal wanted to create a fully on-device emotional support chatbot. Early tests showed 3-5 second response times, far too slow for natural conversation.

They studied and noticed something interesting. The most responsive ones weren’t necessarily using smaller models. They were being smarter about context management.

ChatPal implemented progressive response generation, where the app displayed the first few words while continuing to generate the full response.

They also pruned the conversation context after identifying emotional themes rather than keeping the full conversation history.

Response time dropped to under 1 second. User session length increased by 250%.

The lesson? Sometimes perceived speed matters more than actual speed.

- BusinessAssist: Enterprise Challenges

BusinessAssist created a mobile platform for business use. Their enterprise clients demanded both speed and security.

Their innovation was workload-specific optimization. Document analysis ran on-device for security. Image processing used cloud resources for speed. Language tasks used a hybrid approach.

They also implemented organizational caching, where common queries within a company shared optimized paths.

Performance improved by 60% while maintaining security requirements. Enterprise adoption doubled.

The work done for them was rejecting a size-fit-all adaptation in favor of the work-appointed strategies.

The Patterns That Emerge

Looking across these case studies, patterns emerge:

- The most successful optimizations often combine multiple approaches.

- User perception of speed sometimes matters more than actual speed.

- Different hardware demands different optimization strategies.

- Understanding user behavior often reveals optimization opportunities.

- Clever UI design can make the necessary processing time feel shorter.

- These real-world examples show that optimizing AI driven mobile apps isn’t just a technical challenge. It’s about understanding people, devices, and contexts. It’s about making smart trade-offs and designing holistic experiences.

The most successful teams didn’t just optimize code; they optimized the entire user journey.

What will your optimization story be? Learn from these examples, apply principles to your unique position, and perhaps your case study will inspire other developers.

Collaborating with Boolean Inc.: A New Era of Fast and Efficient Mobile AI

When adaptation challenges seem heavy, you do not have to deal with them alone. Boolean Inc. is an expert in making the AI driven mobile app fast, smarter, and more efficient.

Their team has optimized inference pipelines for hundreds of mobile applications across industries. From healthcare to e-commerce, gaming to productivity, they’ve seen the challenges you’re facing and have solved them before.

What makes Boolean Inc. different? They don’t just optimize existing code. They rethink the entire approach.

Their engineers analyze your specific use case, user behavior patterns, and target devices to create customized optimization strategies. No cookie-cutter solutions here.

I’ve seen clients transform struggling apps into category leaders after partnering with Boolean Inc.

One fitness app reduced model size by 75% while actually improving pose detection accuracy, something the internal team had deemed impossible.

Ready to make your AI driven mobile app lightning-fast? Boolean Inc. offers free consultations to assess your specific optimization opportunities.

Your users won’t just notice the difference; they’ll feel it with every interaction.

Conclusion

Optimizing inference speed isn’t just about technical bragging rights; it’s about giving users an experience that feels instant, seamless, and magical.

In the world of AI driven mobile apps, speed is the difference between delight and frustration.

The good news? You don’t need massive resources to make your AI features faster. With the right techniques, quantization, pruning, and smart framework choices, you can build apps that feel snappy and responsive.

Start small. Measure. Tweak. Partners with experts when needed.

Because in AI-powered mobile experiences, each millisecond matters.

FAQs

- How much faster can I expect my app to be after implementing inference optimization?

Most developers see 30-70% improvements in inference speed after proper optimization. Results vary based on your starting point, model complexity, and target devices.

- Will optimizing my AI model for speed significantly reduce its accuracy?

Not necessarily. With techniques like quantization-aware training and knowledge distillation, many apps maintain 95%+ of their original accuracy while achieving 2-3x speed improvements.

- Which is better for mobile AI: TensorFlow Lite or PyTorch Mobile?

In 2025, TensorFlow Lite typically offers better optimization tools and hardware acceleration support across Android devices, while PyTorch Mobile provides easier development workflows.

- How do Apple’s Neural Engine and Google’s Edge TPU compare for on-device inference?

Apple’s Neural Engine generally provides superior performance for CoreML models on iOS devices, while Google’s Edge TPU excels at quantized TensorFlow Lite models on supported Android devices.

- Is it worth implementing cloud fallback for complex AI features?

Absolutely. Hybrid approaches that combine on-device processing with cloud fallback provide the best user experience. On-device handles common cases quickly while maintaining privacy, and cloud processing supports complex edge cases without bloating your app.