Introduction

It’s no secret that large language models (LLMs) are everywhere now. Just a few years ago, most people hadn’t even heard of them.

Today, they’re writing emails, answering customer queries, summarizing reports, and even helping you code.

The market for LLMs? It’s exploding.

In 2024, the LLM market is valued at $3.92 billion. By 2025, it is expected to grow to $5.03 billion. But hold on, that’s just the start.

By 2029, the market is estimated to reach $13.52 billion. This is 28% of the compound annual growth rate (CAGR).

Apparently, this is not a tendency to pass. But stay for a moment. Numbers are impressive, sure. But what does this actually mean for you? For mobile app developers? For users?

Here’s the deal: LLMs are evolving. They’re not just passive tools that wait for prompts. They are becoming autonomous agents, smart, active assistants who can understand user goals, manage tasks, and even make decisions within the mobile app.

We are talking about apps that do not just react, but think, plan, and work on behalf of the user.

Think of a fitness app that does not track your feet, but also makes a custom workout plan based on your schedule.

Or a project management app that can automatically delegate tasks, write updates, and remind your team, all without constant human input. This is the new wave of LLM agents in mobile apps.

And it’s not some far-off vision.

By 2025, autonomous workflows will be a core part of mobile app experiences. Businesses are already investing heavily to build smarter, more personalized apps powered by LLM agents.

Why? Because users are demanding it. People want apps that just work without the friction of endless clicks, taps, or manual inputs.

This change is not just technical; This is deep human. It’s about making digital experiences feel effortless, intuitive, and personal.

In this blog, we’re going to break it all down for you to understand what LLM agents are, why they matter for mobile apps, and how you can start preparing for this AI-powered future.

Ready to unlock what’s next? Let’s dive in.

How LLM Agents Work in Mobile Apps

So, how do LLM agents actually work inside a mobile app? Let’s break it into simple words.

At a high level, think of an LLM agent as a three-part system:

- LLM (large language model): This is the brain. It understands language, generates responses, and reasons through tasks.

- Agent Logic: This is the decision-maker. This defines what the agent can do, how it can plan tasks, and how it reacts to user requests ..

- Mobile Interface: In this way, the user interacts with the agent, whether through chat, voice, button, or background automation.

All three parts need to work together. The LLM user processes input (eg, any question or command). Agent Logic decided, “What should I do with it?” Then, the mobile app interface withdraws an action or response to the user in a comfortable way.

A Simple Example:

Suppose you are using a travel booking app or an app like Airbnb. You type: “Book me a hotel in New York later this week, under $200 one night.”

- The LLM understands your intent, location, dates, and budget.

- The Agent Logic kicks in and triggers a workflow: searches hotels, filters by price, and checks availability.

- The Mobile App Interface then shows you a curated list of options, ready for booking.

You didn’t click through a dozen menus. You just “asked,” and the agent did the work. This is how LLM agents turn apps from static tools into dynamic, conversational experiences.

Cloud vs On-Device

Now here’s a key piece: where does all this AI magic happen? There are two main places: the cloud or on your device (on-device AI).

- Cloud-based LLM Agents:

Most LLMs today, such as ChatGPT-powered apps, are cloud-based. That means the heavy computation happens on remote servers. The mobile app sends the user input to the cloud, retrieves the AI response, and displays it.

Pros:

- Powerful, large-scale access to models.

- Always up-to-date with the latest reforms.

- Easier to integrate advanced capabilities.

Cons:

- Requires a stable internet connection.

- Can introduce latency (the annoying “waiting for response” moments).

- Raises privacy concerns (user data sent to external servers).

- On-device LLM Agents:

Thanks to advances in mobile hardware (such as Apple’s Neural Engine or Qualcomm’s AI chips), we are starting to look at the on-device LLM agents.

These models are small and optimized to walk locally on smartphones.

Pros:

- Ultra-Lo Letty (immediate reactions).

- Offline works; no internet is required.

- Better privacy and data control.

Cons:

- Limited model size compared to cloud-based LLMs.

- More resource-intensive on device battery and memory.

- Updates require app-level changes.

Read Also: Building AI-Powered Apps with On-Device LLMs

Real-Life Scenario: Cloud vs On-Device

Imagine using an app that takes notes and runs them by an LLM agent.

- If you are on Wi-Fi, the application can use cloud LLM to draft long, complex documents with rich tips.

- But when you are on a plane(offline), it can switch to an on-device model to help summarize notes or to help with to-do lists.

This flexibility ensures that the app remains useful, faster, and private, wherever you are.

Want to understand how on-device agents compare to cloud-based ones? Dive into Real-Time Edge AI vs Cloud Inference: Frameworks and Use Cases for a complete breakdown.

Hybrid Approach

Many apps will adopt a hybrid model. Simple tasks (eg, scheduling, quick note, or offline command) are handled on-device.

For more complex arguments or material generations, the app calls the cloud-based LLM.

This hybrid setup provides:

- Speed for everyday tasks.

- Power for heavy-lifting tasks.

- Resilience in low or no connectivity situations.

- Privacy-first options when needed.

Why this architecture matters for developers

If you are a developer, it is no longer optional to understand this architecture.

It affects:

- User experience (latency, smoothness)

- Battery life and resource usage

- Data privacy and Regulatory compliance

- How your app scales with AI features

Knowing when to remove the tasks on the cloud, when they have to be placed on the device, and how to design the smooth agent workflow in 2025 and beyond will be a significant skill.

Why Autonomous Workflows Matter

Let’s face it, people hope to do more apps with less effort. No one wants to click through the endless menu or fill out the same form again and again.

We want apps that “just get it.” That’s where LLM agents in mobile apps step in.

Autonomous workflows are all about reducing friction. They turn apps from static tools into proactive assistants.

Instead of you telling the app every small detail, the app anticipates your needs, plans tasks, and executes them, often without you even noticing.

Why Now?

Users are getting accustomed to AI-run experiences. From voice assistants to smart email answers, automation is becoming ideal. LLM agents in mobile apps take it further by creating end-to-end workflows that automate complex, multi-step tasks.

For example:

- A personal finance app that doesn’t just track expenses but also suggests budget adjustments in real-time.

- A health app that reads your activity data, books doctor appointments, diagnoses, and even follows up with personalized health advice.

- A project management app that automatically drafts progress reports and assigns next steps to your team.

This level of smart automation isn’t just convenient, it’s becoming essential.

Business Impact of LLM Agents in Mobile Apps

For businesses, autonomous workflows aren’t just a “cool feature.” They’re a competitive edge.

- Increase in users’ engagement: Apps that help users make decisions quickly are used.

- High retention rates: Users are sticking with apps that feel comfortable and smart.

- Operations efficiency: LLM agents reduce the need for human intervention in repeated tasks, saving time and resources.

Companies embracing LLM agents in the mobile app will lead the market. Those who do not? They will fall behind as users migrate to apps that provide a rapid, smooth, and more intelligent experience.

The Human Side of Automation

Autonomous workflows aren’t about replacing humans. They are about to empower users. They eliminate digital noise and focus people on what really matters.

When apps can handle busy tasks, users can spend their time on creative, strategic, or simply pleasant activities.

It is not only about technology, but it is also about the creation of better human experiences.

In short, LLM agents in mobile apps are again defining how users interact with technology. They’re transforming mobile apps from reactive tools into proactive partners.

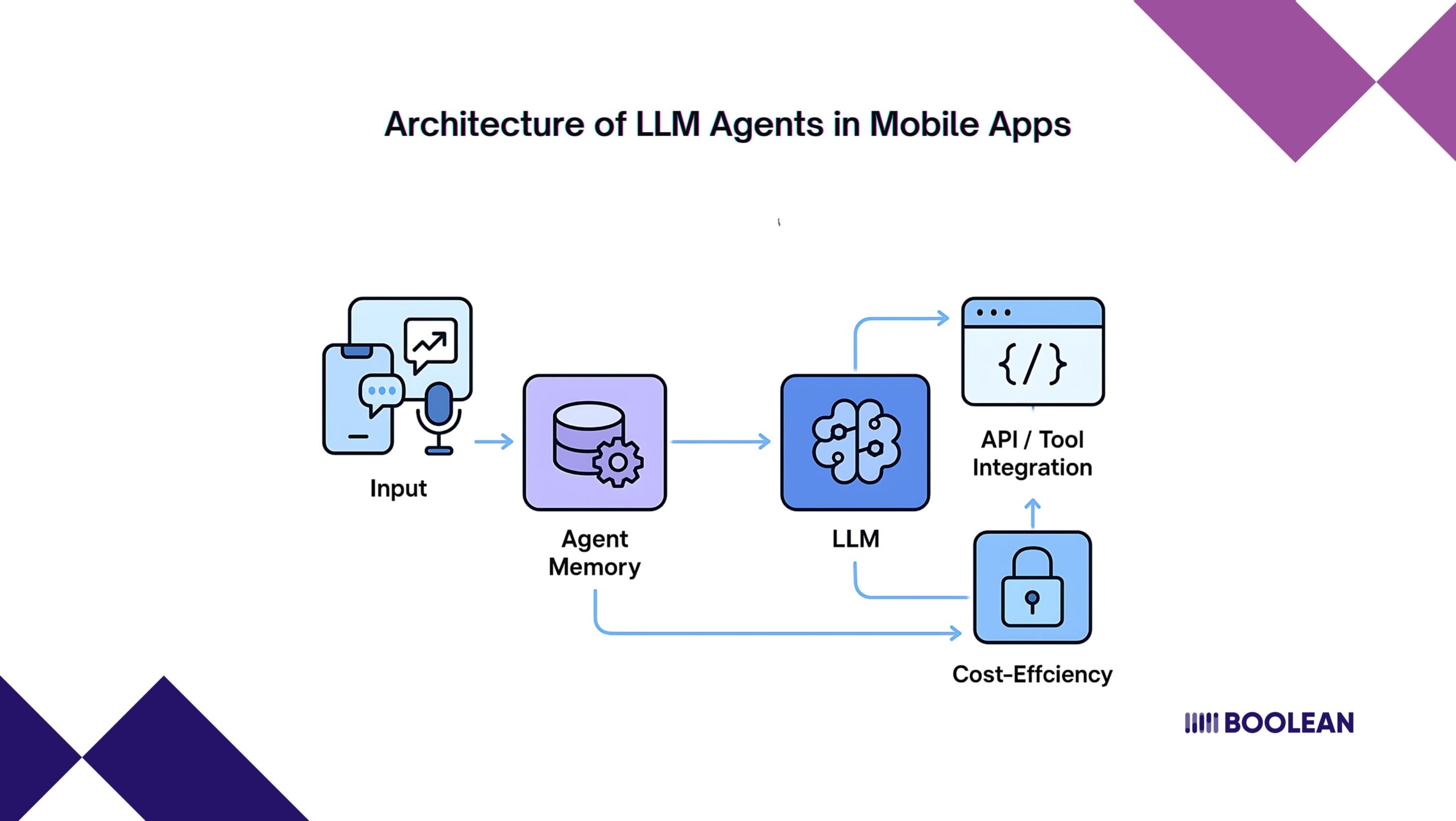

Architecture of LLM Agents in Mobile Apps

Let’s pull the curtain back and see what is really happening under the hood when you interact with the LLM agents in a mobile apps.

It may look like magic when your app “just knows” what you need. But behind the curtain, there is a smart and structured architecture that works smoothly.

Here are the major components that bring LLM agents in mobile apps:

- Input Layer: Voice, Text, Sensors ( How the Agent Listens)

Every interaction starts with input. This could be:

- Text (like typing a question)

- Voice Command (Think Siri or Google Assistant)

- Sensor data (eg, your location, accelerometer, or health matrix)

The more references to the agent, the cleverer it becomes. In 2025, apps will use multi-modal inputs rapidly-you have to use voice, text, and real-world data to better understand.

Example: A fitness app agent can add your spoke request (“plan my workouts”) with your move calculation to create a custom routine on the fly.

Read Also: Multimodal UI in Mobile Apps: Voice, Touch, and Vision

- Agent Memory: Long-term and Short-term Context

An agent isn’t useful if it forgets everything you’ve told it. This is where Agent Memory comes in.

- Short-term memory currently holds a conversation or workflow. For example, if you are booking a flight, it misses your destination and dates until the work is completed.

- Long-term memory stores preferences, habits, and previous interactions. Think of it as the agent’s “relationship” with you; it knows your favorite airline or that you prefer morning flights.

Good memory design makes interactions feel human-like. The best LLM agents in mobile apps will build deeper, more personalized experiences over time.

- Planner/Executor Logic: The Brain’s Decision-Maker

Understanding your request is only half the job. The agent also needs to plan and execute tasks.

This is handled by the Planner/Executor Logic. It decides:

- What needs to be done?

- In what order?

- What tools or APIs should it use?

For instance, if you ask: “Schedule a meeting with John next week”, the planner figures out:

- Who is John?

- Your availability.

- Which calendar service to use?

Then, the executor makes the API calls to book that meeting.

This planning-execution loop is the heart of autonomous workflows.

- API Tool Integration: Connecting with the Outside World

No agent works in isolation. It needs to connect to external tools and services to get real things done.

This could include:

- Calendar APIs (Google Calendar, Outlook)

- Email APIs (Gmail, Microsoft Exchange)

- Payment gateways

- IoT device controls (smart home apps)

The more APIs the agent can access, the broader its capabilities. In 2025, API integrations will be the key differentiator for powerful LLM agents in mobile apps.

- Libraries & Frameworks: The Developer’s Toolbox

Building LLM Agents from scratch isn’t practical. That’s where specialized libraries and frameworks come in.

Some popular tools developers use:

- LangChain: For building advanced LLM-driven workflows.

- ReAct (Reasoning + Acting): A framework where agents can “think” step-by-step and call APIs as needed.

- AutoGPT: An agent that can plan and execute complex tasks autonomously.

- Assistants API (OpenAI): For structured agent workflows with memory and tool-calling abilities.

This framework provides pre-made components such as memory management, task planning, and tool integration, making it easy to build strong agents without re-establishing.

Curious about running LLMs directly on mobile devices? Check out our guide on Running LLMs in Mobile Apps: Phi-3, Gemma, and Open Source Options.

Bring it all together

When combined, these components create a spontaneous system:

- The app captures the user input (text, voice, sensor).

- The LLM understands the request.

- The agent uses its memory and planner logic to figure out what to do.

- It integrates with external APIs to execute tasks.

- The user sees the result through the mobile interface, fast, intuitive, and personalized.

This architecture is what turns LLM agents in mobile apps from a fancy AI chatbot into a fully autonomous digital assistant.

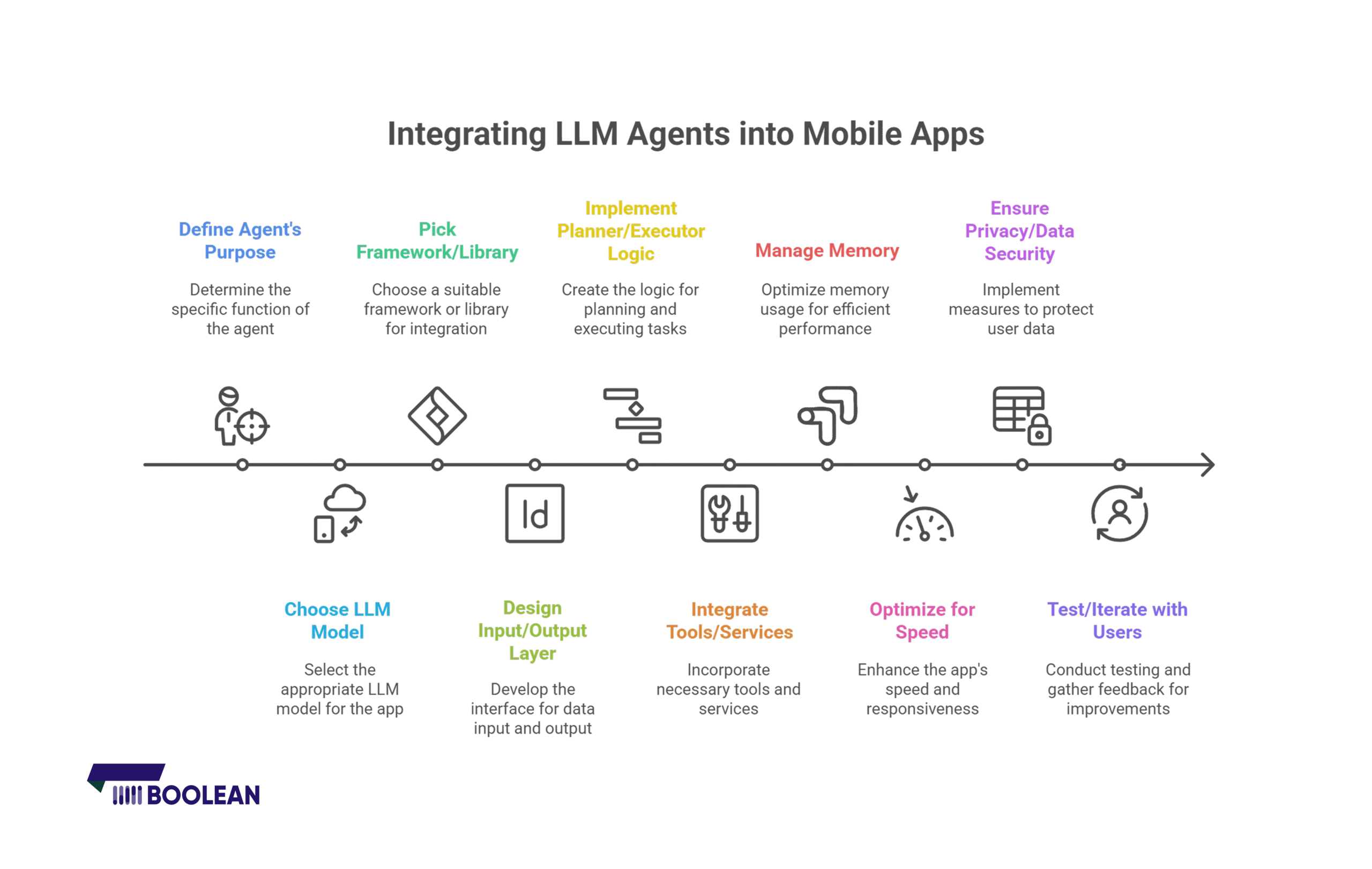

How to Integrate LLM Agents into Your Mobile App (Step-by-Step)

Okay, so you are excited about adding LLM agents to mobile apps, but where do you start?

Don’t worry. It is not as heavy as it seems. Let us break it into simple stages, which you can actually follow-it is your first AI-manual project.

Step 1: Know Exactly What You Want the Agent to Do

This may look clear, but it is the step that runs through most people.

Ask yourself:

- What problem should the agent solve?

- Is it going to chat with users?

- Automate a workflow (like booking appointments or managing tasks)?

- Or maybe something more creative like writing summaries?

Be specific. The clearer you are, the smoother everything else will be.

Step 2: Choose Your LLM Model (Cloud vs On-Device)

Now you need to figure out where the brain of your agent will live.

- If you want super powerful responses and don’t mind needing an internet connection, cloud-based models are great.

- If you want your agent to work offline, it is faster and protects the user’s privacy, the way to go on-device model.

It is a business band between strength and speed/and privacy. Choose what your app likes best.

Step 3: Pick a Framework or Library

You do not need to make everything from scratch. There are tools (called frameworks) that make life easier.

- Want to build task workflows? Use a workflow builder.

- Need step-by-step reasoning? Go for a reasoning framework.

- Looking for a ready-made assistant structure? Choose an assistant API.

These frameworks handle a lot of the complex stuff for you.

Step 4: Design the Input & Output Layer

How will people interact with your agent?

- Will they type messages?

- Speak into their phone?

- Will it quietly monitor sensors like GPS or activity data?

This is super important because it defines how natural the experience feels. Make it frictionless.

Step 5: Implement Planner & Executor Logic

This is where your agent figures out what to do and how to do it.

- The planner breaks down user requests into steps.

- The executor actually carries out those steps, like calling APIs, sending messages, or updating the app interface.

Even a simple “Remind me to drink water every 2 hours” involves planning and execution.

Step 6: Connect to Tools & Services

No agent is an island. It needs to connect with other tools to get stuff done.

- Calendar apps

- Payment systems

- IoT devices

- Backend services you already use

Make sure these connections are smooth and reliable. This is how your agent becomes truly useful.

Step 7: Manage Memory (Short-term & Long-term)

An agent that forgets everything you say? Useless.

You’ll need to give it:

- Short-term memory is used for handling the current task.

- Long-term memory is used for remembering user preferences, past interactions, and habits.

This is what makes interactions feel personal and human-like. People love it when apps “just know” what they want.

Step 8: Optimize for Speed

Nobody likes waiting for an app to think.

You’ll need to:

- Optimize responses for real-time interaction.

- Use caching where possible.

- If you’re running AI on-device, make sure it’s lightweight and efficient.

Fast responses = happy users.

Step 9: Handle Privacy & Data Security

This is non-negotiable. Your agent will handle sensitive data at times. Make sure:

- User data is encrypted.

- You’re transparent about what data you collect.

- Users have control over their own data.

If possible, keep processing on the device for added privacy.

Step 10: Test & Iterate with Real Users

Once everything’s built, next is testing.

- Let real users try it out.

- Watch how they use it.

- Fix where the agent gets confused or stuck.

Agents get smarter with feedback. Keep improving based on what users need, not just what you think is cool.

Integrating LLM agents in mobile apps isn’t just about adding AI. It’s about creating experiences where apps feel alive, helpful, and truly understand users.

Start small. Keep it human. And before you know it, you’ll have an app that feels less like an app and more like a personal assistant in your pocket.

Key Use Cases of LLM Agents in Mobile Apps

Okay, so we are talking about LLM agents in mobile apps, but you must be wondering what they can really do?

The truth is, LLM agents aren’t just chatbots. They’re evolving into real problem-solvers inside apps.

They’re becoming the brains behind tasks that used to require manual tapping, typing, and switching between screens.

Let’s look at some of the most exciting and practical use cases where LLM agents are already making a difference.

- Personalized Virtual Assistants

It is a matter of classic use, but now it is on steroids.

- Users can talk to the app as they will do for a human.

- The agent recalls preferences, understands references, and provides active suggestions.

- This is just “not a reminder set.” It, depending on your program, should I block time for your gym session?”

Mobile apps are moving from “command and control” to smart, conversational companions.

- Automating Complex Workflows

Imagine an app that doesn’t just respond to user actions, but completes multi-step tasks for them.

- Booking a trip? The agent finds flights, reserves hotels, and checks calendar availability.

- Need to process documents? The agent can, in short, classify and send them where they need to go.

- Customer aid quarry automatically automatically automatically to automatically automatically.

LLM agents take tedious, repetitive workflows and handle them in the background.

- Context-Aware Recommendations

This is beyond suggestions, “you can like it”.

- Agents analyze real-time reference: location, recent activities, even the tone of interaction.

- They suggest action at the right time, such as when you hit the gym, when you get lost, or suggest a playlist.

This level of hyper-personalized Assistance is becoming a major difference in mobile apps.

- Voice-Activated Command Centers

Think of apps turning into hands-free command hubs.

- Users can control smart home devices, manage tasks, or get information using natural voice commands.

- The agent understands complex queries like “Turn off the lights after my meeting ends” and sets up conditional automations.

Voice becomes more than a gimmick; it becomes the primary interface for many apps.

- Intelligent Data Companions

For data-dealing apps – such as finance, health, or productivity- LMM agents act as individual analysts.

- The user may ask, “How much did I spend on food last month?” Or “submit my meeting notes briefly.”

- The agent not only finds data but also explains it humanely, even suggests the next stages.

It likes to be a personal data scientist inside your phone.

- Adaptive Learning & Coaching

In education and language learning, LLM agents are transforming the way users learn and improve.

- They provide real-time response, which conforms to the user’s skill level.

- They can simulate interactions to learn language or provide personal fitness coaching based on user activity.

It seems that a 1-on-1 tuition scale.

- Onboarding and Guided Navigation

For apps with complex user interfaces, agents can act as intelligent guides.

- New users can just ask, “How do I set my profile?” And the agent runs them through it.

- Agents can find out when a user gets stuck and provide step-by-step aid.

This makes the apps more accessible and reduces the disappointment of the user.

Why does it matter to 2025

As mobile apps become more feature-rich, users do not want to spend time detecting things. They hope they understand them, guess their needs, and just work.

This is why LLM agents in mobile apps are not only one of the best-they are required to provide a smooth, delightful user experience.

Technical Challenges & Considerations

Adding LLM agents in mobile apps sounds super exciting. But once you dive into actually building them, things get… tricky.

It’s not just about plugging an AI model into your app and calling it a day. There are real challenges that developers (and product teams) need to navigate.

Don’t worry, though; knowing these hurdles upfront will save you a ton of frustration later.

Let’s break down the big ones.

- Cloud vs On-Device

One of the first decisions you’ll face:

- Should the AI processing happen in the cloud?

- Or should it run directly on the user’s device?

Here’s the thing:

- Cloud models are powerful, but they need a constant internet connection. Also, sending data back and forth may increase privacy concerns.

- On-device models are sharp, work offline, and better for privacy-but they are limited by the hardware of the device.

For some apps, a hybrid approach works best.

- Performance vs Battery Life

LLM agents are smart… but they can be resource hogs.

Running heavy computations on mobile devices can:

- Drain the battery fast.

- Heat the phone.

- Slow down other app functions.

So, optimizing models to be lightweight and efficient is a must. You want your app to feel intelligent, without killing the battery in 2 hours.

- Latency — Nobody Likes to Wait

When a user asks the agent a question, they expect an instant response.

But if your app is making a round-trip in the cloud server and back, then the delay may be caused.

You’ll need to optimize:

- Network requests.

- Local caching.

- Edge computing solutions (processing data closer to the user).

Fast, snappy responses = happy users.

- Data Privacy & Security

Let’s not sugarcoat it, LLM agents often deal with sensitive user data.

Whether it’s personal preferences, voice commands, or location info, you have a responsibility to:

- Encrypt all data transmissions.

- Be crystal clear about what data you’re collecting.

- Provide users with control over their data.

Privacy isn’t just a “nice-to-have” anymore. It’s a dealbreaker for many users.

- Managing Agent Memory

An LLM agent that forgets everything you tell it? Useless.

But storing and managing memory isn’t straightforward.

- Short-term memory is easy; it’s just about keeping track of the current conversation.

- Long-term memory (like remembering user preferences over weeks or months) requires careful design. Where do you store that data? How do you ensure it stays secure?

Plus, you have to balance memory depth with performance. You can’t have an agent sifting through mountains of data on every interaction.

- Tool & API Integration

An agent is only as good as the tools it can access.

You’ll need to integrate with:

- Third-party APIs.

- Device sensors.

- Backend services.

But not all tools are designed to work smoothly with LLM agents. You might need to build custom middleware or adapters to bridge the gaps. And those integrations need to be rock-solid; a flaky API can ruin the whole experience.

- Keeping Up with AI Model Updates

The world of AI evolves at breakneck speed. New model versions, improved frameworks, and better optimization techniques they keep rolling out.

The challenge?

- How do you keep your app up-to-date without constantly breaking things?

- How do you swap in a new model version without the reconstruction of your half-app?

You’ll need a modular, flexible, composable architecture that lets you update components independently.

- Cost Management

Running LLMs (especially in the cloud) isn’t free. In fact, it can get expensive very quickly as user numbers grow.

You’ll need to:

- Optimize usage (process only what’s necessary).

- Consider using smaller, task-specific models.

- Explore Edge AI options to close computation.

If you want to keep your app on a constant scale, it is important to keep the cost under control.

- User Expectations

This one’s subtle but important.

Users will expect your LLM agent to “just know everything.” But AI has its limits.

- There will be moments where the agent messes up.

- Or misunderstands a request.

- Or needs clarification.

It is important to design the foilback strategies, handling beautiful error, and to inform the user. The goal is to maintain confidence, even when AI does not get it right on the first attempt.

Meet Boolean Inc.: Simplifying LLM Agents for Mobile Apps

If you’ve been exploring the world of AI agents and mobile apps, you’ve probably come across Boolean Inc.

They’re one of the rising stars in the space, focusing on making LLM agents in mobile apps easier and faster to deploy.

What makes them interesting? They’re not just building AI tools for big tech companies. Boolean Inc. AI agents are on a mission to democratize, offering a framework and SDK that small app teams can also plug into their products.

Their focus is on agent autonomy, low-latency on-device entrance, and seamless API orchestration without developers with complex infrastructure setup.

In short, if you want to experiment with LLM agents in your mobile app but do not want to start from scratch, then Boolean Inc. is definitely a name worth putting on your radar.

Conclusion

LLM agents in mobile apps are no longer just a futuristic concept; they’re becoming a must-have for apps that want to stay relevant.

From automating the workflows to individual user experience, these agents are changing how apps interact with people.

Yes, there are technical obstacles. But the payment is very large: smart app, happy user, and a real competitive edge.

If you are creating mobile apps, now is the time to start thinking about how LLM agents can work for you, not in five years, but today.

FAQs

- What are LLM Agents in Mobile Apps?

They’re AI-powered assistants built into mobile apps. These agents understand language, plan tasks, call APIs, and deliver responses, all inside your app. They feel like a helpful companion who just “gets” what you need.

- Can LLM Agents run on my device without internet?

Yes! Since 2025, smaller, optimized models can run entirely on your phone. This means ultra-fast responses, better privacy, and full offline capabilities. It may not match cloud power, but it’s impressively capable.

- Why do I need agent memory?

Memory helps agents remember context, like your preferences or ongoing tasks. Short-term memory handles the current conversation, while long-term memory learns your likes, habits, and routines for personalized suggestions.

- Which one is better, cloud-based or on-device agents?

It depends on your priorities.

- Cloud-based agents offer more powerful responses but need internet and can raise privacy concerns.

- On-device agents are fast, private, and offline, but may be less capable.

- Most successful apps use a hybrid approach tailored to real-world needs.

- Are LLM Agents secure and privacy-friendly?

They can be. Secure design means encrypting data, limiting data collection, and letting users control what’s stored. Running AI locally (on-device) adds privacy, as user data never leaves the phone.