Introduction

AI is showing up everywhere.

In fitness trackers. In home security. In traffic systems. And definitely in your phone. But here’s the real question if you’re building something smart:

Should your app run on the device or rely on the cloud?

That’s the core of the edge AI vs cloud AI discussion. It’s not just a technical decision; it affects speed, privacy, cost, and how your users experience your product.

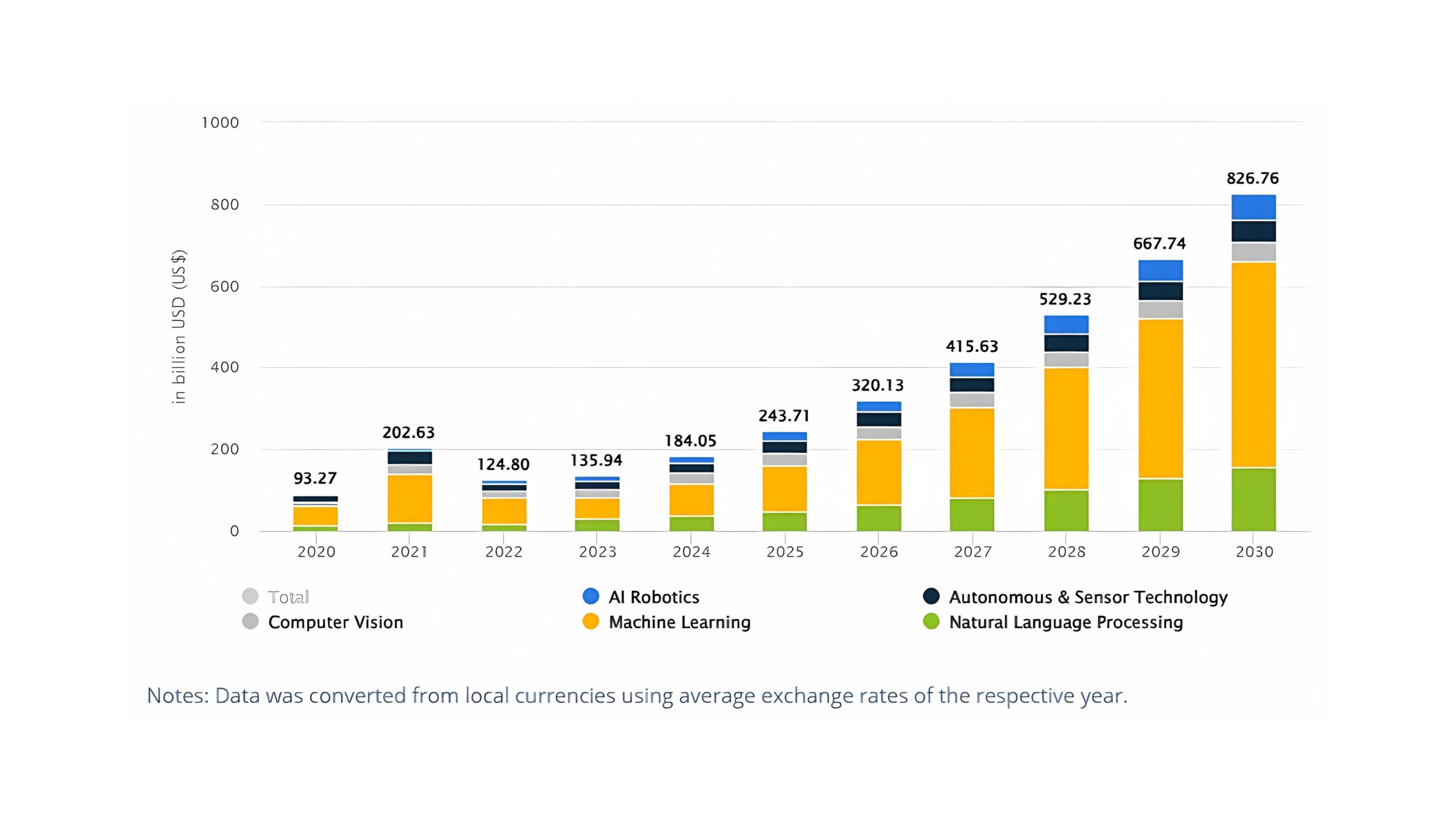

Let’s pause on some numbers. The Artificial Intelligence market is projected to reach around $244.22 billion by 2025. And it’s just getting started.

By 2031, we’re looking at $1.01 trillion, growing at 26.6% per year.

The U.S. alone is expected to hit nearly $74 billion in 2025. That’s a lot of apps, devices, and decisions about where AI should run.

Some apps need answers right now. Think real-time AI, like autonomous drones or factory sensors.

In those cases, on-device inference through edge AI can be a smart move. It offers lower latency, better privacy, and fewer network dependencies. That’s one of the big edge AI benefits.

Others handle huge amounts of data or rely on deep learning models that are just too big to fit on a device.

That’s where cloud inference or cloud-based AI often shines. You get access to powerful resources and more flexibility. But, there’s a tradeoff, especially when latency vs throughput becomes part of the conversation.

The truth is, choosing between edge vs cloud AI isn’t black and white. You’ll need to weigh edge AI performance, AI inference optimization, scalable AI inference, and even security concerns like edge AI security versus cloud AI security.

We’ll walk through all of it, clearly.

You will learn what AI inference is, and how edge AI frameworks are compared to cloud platforms, what the common edge AI challenges are, and how AI can need to affect the success of your app.

Let’s feel this option less like a gamble, more like a solid step of confidence.

What Is AI Inference?

Let’s break this down without getting too technical.

AI inference is the part where your model actually does something.

It’s the moment your app takes what it learned during training and uses it to make a decision, like detecting an object in a photo or predicting what a user might type next.

You’ve got two main ways to run inference: on the cloud, or right on the device using edge AI.

On-device inference means the app runs the AI locally. No internet? No problem. It works offline, responds faster, and keeps sensitive data from ever leaving the device.

That’s one of the biggest edge AI benefits: less delay, better privacy, and smoother experiences in real-time. This is where edge AI latency and edge AI performance really stand out.

Now, on the flip side, there’s cloud inference. This means that your app sends data to a powerful server somewhere, and this server sends the answer back. If you are working with large models or high numbers of users, then it works very well.

Cloud-based AI usually wins when it comes to throughput, or how much work the system can handle at once.

That leads us to the classic latency vs throughput question. Want lightning-fast response times? Edge might be your best friend. Need to process huge amounts of data reliably and at scale? The cloud has your back.

It’s not always one or the other, though. A lot of developers use both, running basic AI on the device and handing over complex tasks to the cloud. Such an AI finance strategy can give you the best of both worlds.

Lower Line: AI’s estimate is the place where magic occurs. But where it happens – Edge vs Cloud AI – can create a big difference in how your app feels, performs, and scales.

What Is Real-Time Edge AI?

Real-time means fast. Not “a few seconds later” fast, right now fast.

Real-time AI is all about getting responses immediately, or as close to that as possible.

Whether it’s a phone unlocking with your face, a self-checkout kiosk tracking motion, or a wearable giving health alerts, people expect things to happen instantly. That’s where edge AI becomes a major player.

Instead of sending data to a remote server and waiting for it to respond, edge AI processes the data right on the device, no round trips, no delays. This is a big deal in the ongoing edge AI vs cloud AI conversation.

With edge AI, latency is drastically reduced. You don’t have to rely on a stable internet connection. Things just work, fast and locally.

And that speed isn’t just a luxury. In many cases, it’s the difference between success and failure. A delay in detecting an object in a self-driving car or a heartbeat irregularity on a smartwatch? That’s not acceptable. Low edge AI latency is critical.

But speed isn’t the only reason developers turn to edge.

Edge AI benefits also include better privacy (since data doesn’t leave the device), reduced bandwidth use, and improved reliability in low-connectivity areas.

Plus, depending on your app’s scale, edge can be more cost-efficient long term.

That said, there are trade-offs.

Edge AI performance depends on the hardware, and not every device is created equal. You’re working with limited memory, battery, and processing power. These are just some of the edge AI challenges teams run into, especially when models are large or when tasks are complex.

Then there’s security. While edge AI security gives you better control over local data, it can also mean more responsibility.

You need to make sure devices stay updated and protected, something that’s handled a bit differently compared to cloud AI security, where everything is managed centrally.

Choosing the right tools helps. There are a growing number of edge AI frameworks built to make on-device AI faster, smaller, and easier to deploy.

And if you’re curious how this plays out in real projects, check out this related post:

👉 Real-Time Edge AI in Mobile Apps: Frameworks and Use Cases

What Is Cloud AI Inference?

Sometimes, running AI on the device just isn’t enough. That’s where cloud AI inference comes in.

Instead of thinking about the phone or device, cloud-based AI sends the data to powerful servers.

Those servers run the model, return a result, and your app responds. It’s a common approach for apps that need large models or support lots of users. Think of it as offloading the heavy lifting.

In the edge AI vs cloud AI discussion, cloud wins on scalability. You can handle more users, update models easily, and work with more complex tasks.

This is why it’s often used in AI deployment strategies, especially in systems built to grow fast.

Of course, there are trade-offs. The delays may be more. If your app requires a real-time response, it may be too slow to wait for a round-trip to the server. This is where Edge vs Cloud AI becomes a balancing act.

To improve the cloud infection work, developers often focus on AI Inference Optimization – making the model small, faster, and more efficient.

And for those working with AI in software development or for those using a mobile AI framework, such adaptation is important to keep the experience smooth.

And while cloud AI security is strong, it still requires careful setup. Just like edge, you need to protect user data and ensure your systems stay secure.

Bottom line: Cloud inference is powerful and flexible. It’s a great fit when you need scalable AI inference, but not always ideal if speed is your top concern.

Key Differences of Edge AI vs Cloud AI

Choosing between edge AI and cloud AI isn’t about which one is better. It’s about which one fits your app’s needs.

Some apps demand speed and privacy. Others need power and scalability. This is where understanding the key differences really helps.

Here’s a quick breakdown:

| Feature | Edge AI | Cloud AI |

|---|---|---|

| Latency | Very low, data is processed on-device | Higher, due to data traveling to the server |

| Throughput | Limited by device hardware | High, can handle large volumes easily |

| Privacy | Strong data stays on the device | Depends, data is sent to the cloud |

| Scalability | Limited to device capabilities | Highly scalable with server resources |

| Connectivity | Works offline or with weak signals | Requires stable internet |

| AI Model Size | Smaller, optimized models | Can use large and complex models |

| Updates & Maintenance | Harder to update once deployed | Easier to manage centrally |

| Security Focus | Local threats, device-level edge AI security | Network and server-side cloud AI security |

| Deployment Use Case | Great for real-time AI, fast response | Ideal for deep learning, scalable AI inference |

In many cases, developers use both, placing simple tasks on the edge while offloading heavier work to the cloud. That mix can offer the best of both worlds.

Latency vs Throughput: What Matters Most for Your App?

Let’s keep it simple.

Latency is about how fast your app reacts.

Throughput is about how much it can handle at once.

If your app needs to feel instant, like detecting a face, triggering an alert, or responding to a tap, low latency is critical.

Users notice even small delays. This is where edge AI really helps. It keeps things fast and close.

But if your app deals with big data or needs to process tons of input at once, say, from thousands of users, then throughput becomes more important. That’s where cloud AI tends to do better.

So, what matters more to you: a quick reaction, or handling a high load?

The answer usually depends on what your users expect and what your app actually does.

There’s no one-size-fits-all just what works best for your goals.

Edge AI Benefits & Challenges

So you are thinking about Edge AI for your app. It is sharp, it is private, and it keeps things local. Sounds great, right?

It is, but it’s not all smooth sailing. Like anything worth building, edge AI comes with real strengths and some tricky bits you’ll want to plan for.

Let’s break it down.

Edge AI Benefits

- Speed

When everything goes on the device, your app can react in real time – no wait on the server. This means that a smooth experience, whether you are scanning the face, tracking gestures, or analyzing voice commands.

- Stronger Privacy

Data stays local. That’s a big deal. Users are more aware of privacy than ever, and edge AI helps you meet those expectations.

No constant uploads to the cloud, no worries about data sitting on someone else’s server. It’s one reason developers looking for safer, real-time AI workflows choose edge.

- Work Without Internet

No Wi-Fi? Spotty mobile signal? Doesn’t matter. Since edge AI doesn’t rely on the cloud, your app can still function offline. That’s a big plus for wearables, remote areas, or mobile apps that need to be reliable in unpredictable conditions.

- Less Bandwidth, Lower Costs

Cloud traffic adds up. With on-device inference, your app doesn’t constantly upload or download data. That saves you bandwidth and helps keep infrastructure costs in check, especially helpful for large-scale or global rollouts.

- Improved Control

With edge AI, you’re not just pushing everything to a server. You get tighter control over how models perform, how updates are rolled out, and how your app behaves across devices.

Edge AI Challenges

- Limited Device Power

A smartphone isn’t a server. You’re working with limited CPU, memory, and battery. Running a complex model on-device takes careful design. That’s why AI inference optimization matters so much in edge environments.

- Device Fragmentation

Not every user has the same phone or hardware setup. Some have dedicated NPUs, some don’t.

This kind of fragmentation can make development and testing feel messy. The right edge AI frameworks can help, but it’s still something to plan for.

- Updates Take More Effort

In the cloud, you tweak a model, and everyone gets the update. With edge, you’re often pushing updates to devices manually or through app stores. That adds friction to your AI deployment strategy and can slow you down.

- Security’s on You

Sure, keeping data local helps with privacy. But it also means you need to think hard about edge AI security. Devices can be lost, tampered with, or left unpatched. Strong encryption, smart permissions, and safe update processes are all key.

- Balancing Performance & Battery

Your model might run beautifully, but not if it kills the battery in two hours. Balancing performance with efficiency is one of the biggest edge AI challenges, especially in mobile apps and wearables.

Cloud AI Benefits & Challenges

Cloud AI sounds like the easy choice, right? Lots of power, easy updates, global scale. And in many cases, it really is a smart way to go, especially when you’re building something big or complex.

But it’s not without its trade-offs. Let’s walk through both sides.

Cloud AI Benefits

- Powerful Processing

With cloud-based AI, you’re tapping into serious computing power. This means that you can run large, complex models that will not just fit on the phone or age device. Great for those apps that rely on deep learning, a recommended engine, or natural language processing.

- Easy to Update and Improve

Need to tweak your model? Just update it in the cloud, and all users get the latest version instantly. This makes AI deployment strategies more flexible and lets you iterate fast, something that’s harder to do with on-device inference.

- Handles Heavy Load Well

Cloud AI is built for scalable AI inference. It can manage high traffic, run multiple tasks in parallel, and grow as your user base grows. Throughput? No problem. It’s built to handle a lot.

- Integration with Other Tools

Cloud AI works nicely with your data pipelines, APIs, and analytics tools. If your app is part of a bigger system, like AI in software development or enterprise services, the cloud helps everything stay connected.

- Centralized Security & Monitoring

With the right setup, the cloud AI security can be solid. You get central control, detailed log and real-time monitoring-especially useful for large teams and regulated industries.

Cloud AI Challenges

- Higher Latency

It takes time to send data to the cloud and wait for the response. For those apps that require rapid reactions-as real-time AI or live video delays can cause a noticeable interval.

- Needs a Stable Connection

Cloud AI won’t work without the internet. If your users are offline or in areas with weak coverage, their experience will suffer. That’s where edge vs cloud AI becomes a real decision point.

- Data Privacy & Compliance Risks

You’re sending user data to external servers. Even with strong cloud AI security, this can raise red flags for privacy, especially in health, finance, or education apps. Encryption helps, but it’s something regulators and users are watching closely.

- Higher Operational Costs Over Time

Cloud compute and storage costs can add up fast, especially if you’re handling large volumes of AI inference every day. Cost control becomes part of your tech strategy.

- Not Always Ideal for Mobile or Lightweight Apps

Some mobile AI frameworks are built to take advantage of on-device capabilities. If your model is small or your app is meant to run offline, the cloud might be overkill.

Pros and Cons: Edge AI vs Cloud AI

Still uncertain whether Edge AI or Cloud AI fits your app better?

This is completely normal. Each has its own strength, and each comes with a trade-off. It really depends on what your app does and what your users expect from it.

Quick Comparison Table

| Aspect | Edge AI — Pros 💡 | Edge AI — Cons ⚠️ | Cloud AI — Pros 💡 | Cloud AI — Cons ⚠️ |

|---|---|---|---|---|

| Latency | Ultra-low, fast response | N/A | Higher due to data transfer | Can feel laggy for real-time apps |

| Connectivity | Works offline or with a weak signal | Limited access to real-time cloud data | Needs internet to function | Won’t work without a stable connection |

| Scalability | Local only, less scalable | Hard to expand across many devices | Scales easily across users & regions | Cost grows with usage |

| Privacy | Keeps data on-device | Security depends on device safeguards | Centralized controls possible | Sends user data to external servers |

| Performance | Great for lightweight, real-time tasks | Limited by device hardware | Handles heavy, complex models | Needs optimization to avoid high cost |

| Update Flexibility | Harder to update once deployed | Can lag if not maintained | Easy to roll out model changes | Updates affect all users simultaneously |

| Battery/Resources | Can drain device battery | Needs smart model optimization | No battery worries on the client side | Higher backend load and costs |

| Deployment Speed | Fast on-device launch is possible | Slower updates across different hardware | Centralized control for quick iterations | Requires good backend infrastructure |

What This Means for You

- If your app depends on speed, privacy, and offline access, edge AI probably makes more sense, especially for mobile, wearables, or field tools.

- If you need power, flexibility, and large-scale optimization, cloud AI is tough to beat, especially for heavy processing, analytics, or fast model iteration.

And if you want both? That’s possible too. Many teams combine them, using edge for fast local tasks and cloud for the big-picture work behind the scenes.

AI Deployment Strategies: Edge, Cloud, or Hybrid?

Building with AI means you’ve got choices. Big ones.

Should your models run on the device, in the cloud, or somewhere in the middle?

There is no size fit here. Your strategy depends on what you are making, who your users are, and how fast your app needs to think.

Let’s look at the main AI deployment paths, edge, cloud, and hybrid, and what each one brings to the table.

Edge AI: Local and Lightning Fast

With Edge AI, your model runs directly on the user’s device – no round-trip for the server. This means rapid reactions, greater privacy, and lubricating offline experiences.

Best for:

- Real-time AI use cases (gesture recognition, on-device image processing, quick health feedback)

- Environments with poor or no connectivity

- Apps that require low latency vs high throughput trade-offs

Why it works:

You get edge AI performance that feels instant. Plus, with on-device inference, there’s no need to send sensitive data elsewhere, a win for edge AI security and user trust.

But be aware:

You’ll face edge AI challenges like limited compute power, device fragmentation, and tougher updates. Choosing the right edge AI frameworks and doing good AI inference optimization will make or break your app here.

Example use case:

A mobile fitness app with a Multimodal UI in Mobile Apps setup; analyzing movement, voice, and biometrics all on-device, in real time.

Cloud AI: Big Power, Broad Reach

Cloud-based AI pushes your model into the cloud. That opens the door for bigger, more complex tasks. You can update models centrally, analyze tons of data, and scale fast as your app grows.

Best for:

- Apps with complex logic or heavy AI models.

- Situations where data must be aggregated or cross-compared.

- Projects where AI inference needs to scale across millions of users.

Why it works:

You get flexible AI deployment strategies, access to powerful compute, and easy updates. For apps that rely on deep learning or evolving models, like AI chatbots or ChatGPT-powered apps, the cloud is often the better fit.

But keep in mind:

There’s often more latency, since data has to travel. Plus, sending personal data to the cloud can raise concerns around cloud AI security and compliance.

Example use case:

An enterprise analytics dashboard built with Composable Architecture, where AI helps interpret massive datasets across different departments.

Hybrid AI: A Smart Middle Ground

Can’t decide between edge vs cloud AI? You don’t always have to.

Hybrid AI combines the best of both: lightweight models run locally for instant feedback, while heavier tasks, like trend analysis or retraining, get offloaded to the cloud.

Best for:

- AI-powered apps that require both accountability and scalability.

- Features that vary in complexity (eg, on-device filtering, cloud-based privatization).

- Teams that want the user need flexibility to move the charge, because the user needs it.

Why it works:

It balances edge AI benefits with scalable AI inference in the cloud. This setup also helps with battery management, model updates, and security planning.

Real-world bonus:

Hybrid setups also support features like Zero-Trust Mobile Security, where sensitive decisions stay on-device but high-level analysis happens in secure cloud environments.

Example use case:

A travel app that uses edge AI for translating signs or menus offline, while syncing trip data and preferences to the cloud for smarter recommendations.

So, What’s Right for Your App?

Here’s a quick way to think about it:

- Choose edge AI if speed, privacy, or offline access are top priorities.

- Go with cloud inference if you need complex models, easy updates, or massive scale.

- Opt for a hybrid if your app has multiple layers, some that need to be fast and local, others that rely on cloud power.

No matter your path, the key is clarity: understand your users, your features, and what kind of performance matters.

And remember, you can always evolve. Start simple, test with users, and grow your architecture as your app matures.

Feeling Stuck Between Edge and Cloud AI?

That’s completely okay.

It is not always easy to choose between Edge AI vs Cloud AI.

Maybe your app requires rapid response time and big data crunching.

Maybe you are safe, battery weight, or just trying the future what you are making.

If you are uncertain or just want to talk through it, we are here for it.

At Boolean Inc., we help teams figure this stuff out all the time. Whether you’re building something new, upgrading what you’ve got, or just curious about your options, we’re happy to chat.

No hard sell. Just real advice based on what actually works.

No pressure, just clear, friendly guidance.

Conclusion

There’s no universal winner in the edge AI vs cloud AI debate. It all comes down to what your app really needs and what matters most to your users.

If your app thrives on real-time AI, low power use, and privacy, edge AI can offer speed and control right on the device.

On the other hand, cloud-based AI gives you room to grow with more power, centralized updates, and better support for scalable AI inference.

And then there’s the middle path, combining both with a hybrid AI deployment strategy. It can help balance latency vs throughput, offer smart AI inference optimization, and adapt as your app evolves.

Whether you’re focused on edge AI performance, worried about edge AI latency, exploring AI inference, or comparing cloud AI security with edge AI security, remember: you don’t need to have it all figured out today.

Start with the needs you know. Test. Iterate. Improve.

And if you’re ever unsure, there are teams like Boolean Inc. ready to help.

FAQs

- What’s the difference between Edge AI vs Cloud AI?

Edge AI runs on the device. Cloud AI runs on remote servers. One’s faster and local, the other’s more powerful and centralized.

- Is Edge AI better for real-time apps?

Yes, in most cases. If your app needs low latency, real-time AI, and fast responses, edge AI usually works better.

- Can I use both Edge and Cloud AI?

Absolutely. A hybrid AI deployment strategy lets you use on-device inference for speed and cloud inference for heavier tasks.

- What are the challenges of Edge AI?

You’ll need to handle limited device resources, updates, and edge AI security. But smart planning makes it doable.

- Which is more secure, Edge or Cloud?

Both have pros and cons. Edge AI keeps data local. Cloud AI allows centralized control. It depends on your app’s setup.