Introduction

Ever tried talking to your phone while tapping the screen… and the app just gets it?

That’s why multimodal apps are used; they let you interact in more than one way. Think voice, touch, and even vision.

From facetime type apps that blend multiple inputs to location based apps that respond to your surroundings, we’re seeing a revolution in how we interact with technology.

It’s no longer science fiction. It’s the new normal in mobile UX.

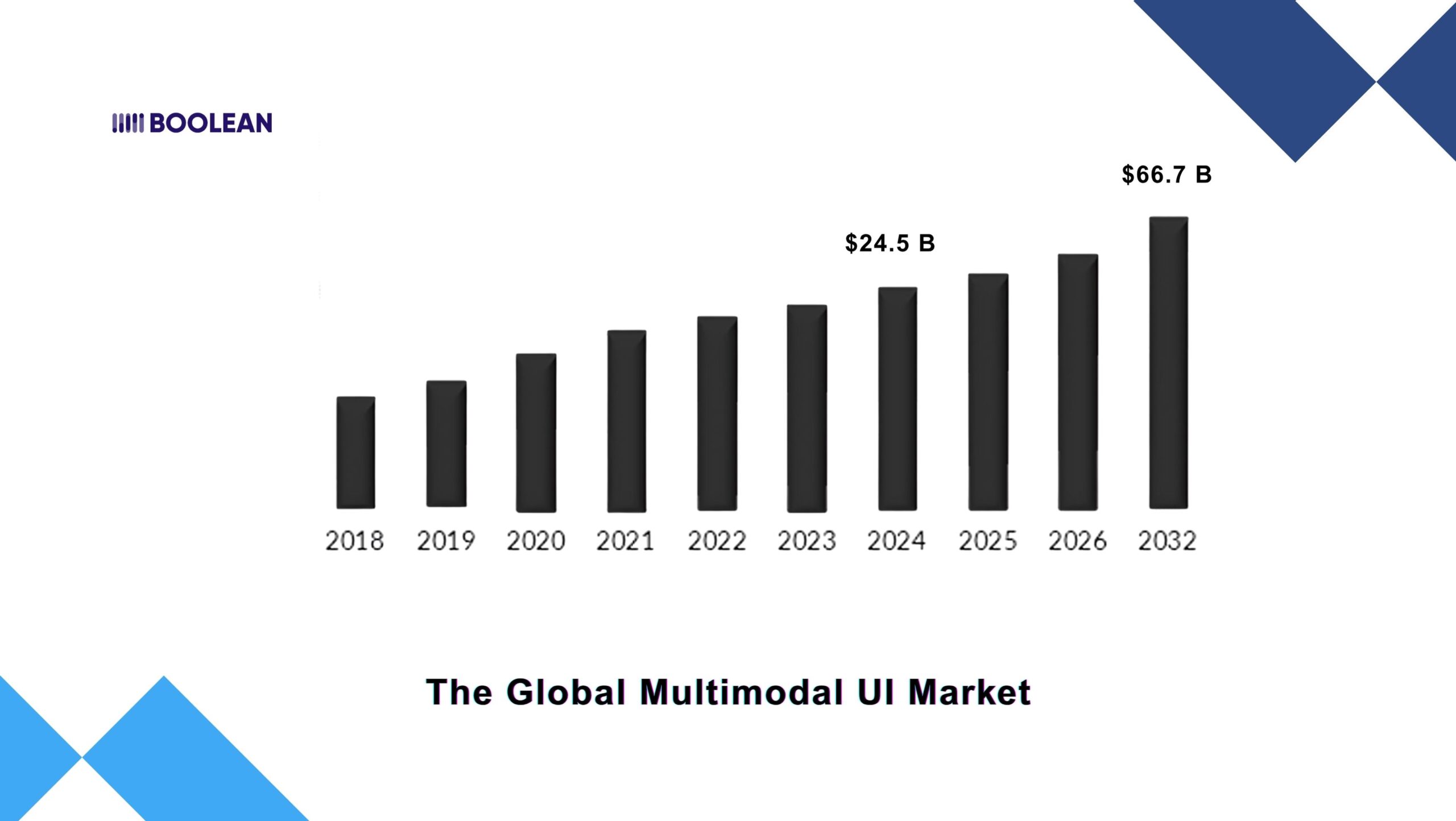

And guess what? This shift isn’t just a trend. It’s a booming market.

The global Multimodal UI market was valued at $24.5 billion in 2024, and it’s projected to skyrocket to $66.7 billion by 2032, growing at a CAGR of 18.2%. That’s massive.

This growth is driven by innovations in voice app development and features like evernote voice recognition becoming mainstream expectations.

Why the surge?

Because people want to interact with apps the way they interact with the world, naturally.

One moment you’re tapping a screen. Next, you’re saying “play my workout playlist” or letting the camera scan a QR code. Seamlessly switching between modes, without thinking twice.

This is the place where modern UX designs are led. To those experiences that feel more comfortable, more human, and more immersive.

Now we hope that our phones do not only do we do, but also how we do it, through gestures, voice tones, eye movements, and even facial expressions.

It is a multimodal UX at work.

It is giving everything from hand-free voice apps and gesture-controlled games to camera-based shopping tools and screenless UI for wearables.

And it is not just about fancy technology. It is about the construction of the interface that is favorable for you, not in any other way.

Whether you are creating the next big app or searching for a new UI trend, one thing is clear:

The future is not single-input. It’s smart input. It’s hybrid. It’s multimodal.

And it starts here.

What Is Multimodal UI?

Let’s break it down.

Multimodal UI (short for Multimodal User Interface) means giving users more than one way to interact with an app.

It’s not just tapping. It’s not just talking. It’s a mix of inputs, like voice, touch, and vision, working together in harmony.

Imagine this:

You open a mobile app by saying, “Start my workout.”

Then you swipe to select an activity.

And the smart camera checks your posture as you move.

That’s a multimodal app in action.

It’s the same principle behind on demand technologies that use dynamic user interface design to adapt to user needs instantly.

It feels natural, right? That’s the point.

We don’t interact with the real world using one input. Sometimes we talk. Sometimes we gesture. Sometimes we just look. Modern picture of recognition technology paired with wearable application development creates experiences that feel truly futuristic.

A natural UI should work the same way.

One Interface, Many Ways to Interact

In a multimodal UX, users can:

- Tap on a touchscreen (mobile touch, gesture control).

- Speak commands using voice input (voice control, speech app, mobile voice).

- Use visual input like facial recognition or hand gestures (vision tech, camera input, visual UX).

The real magic happens when these modes work together. For example, you could:

- Say “Call Mom,” and then confirm the action with a tap.

- Use gesture control to scroll while your hands are messy.

- Let your smart camera detect motion and trigger in-app actions.

The goal? To make app interaction as smooth and intuitive as possible.

Why It Matters

Multimodal apps aren’t just cooler. They’re smarter.

They respond to context. They offer flexibility. And they improve accessibility for users with different needs.

Someone who can’t speak can still tap.

Someone who can’t touch can still use voice.

Someone in a noisy environment might rely on gestures or visuals instead of sound.

That’s inclusive design. That’s the future of mobile UI.

And it’s not limited to niche use cases anymore. We’re seeing AI UI systems that learn how people prefer to interact and adjust in real time.

Think AI gestures, app feedback, and predictive behavior. These are the next-gen UI foundations already showing up in interactive UI and immersive app design.

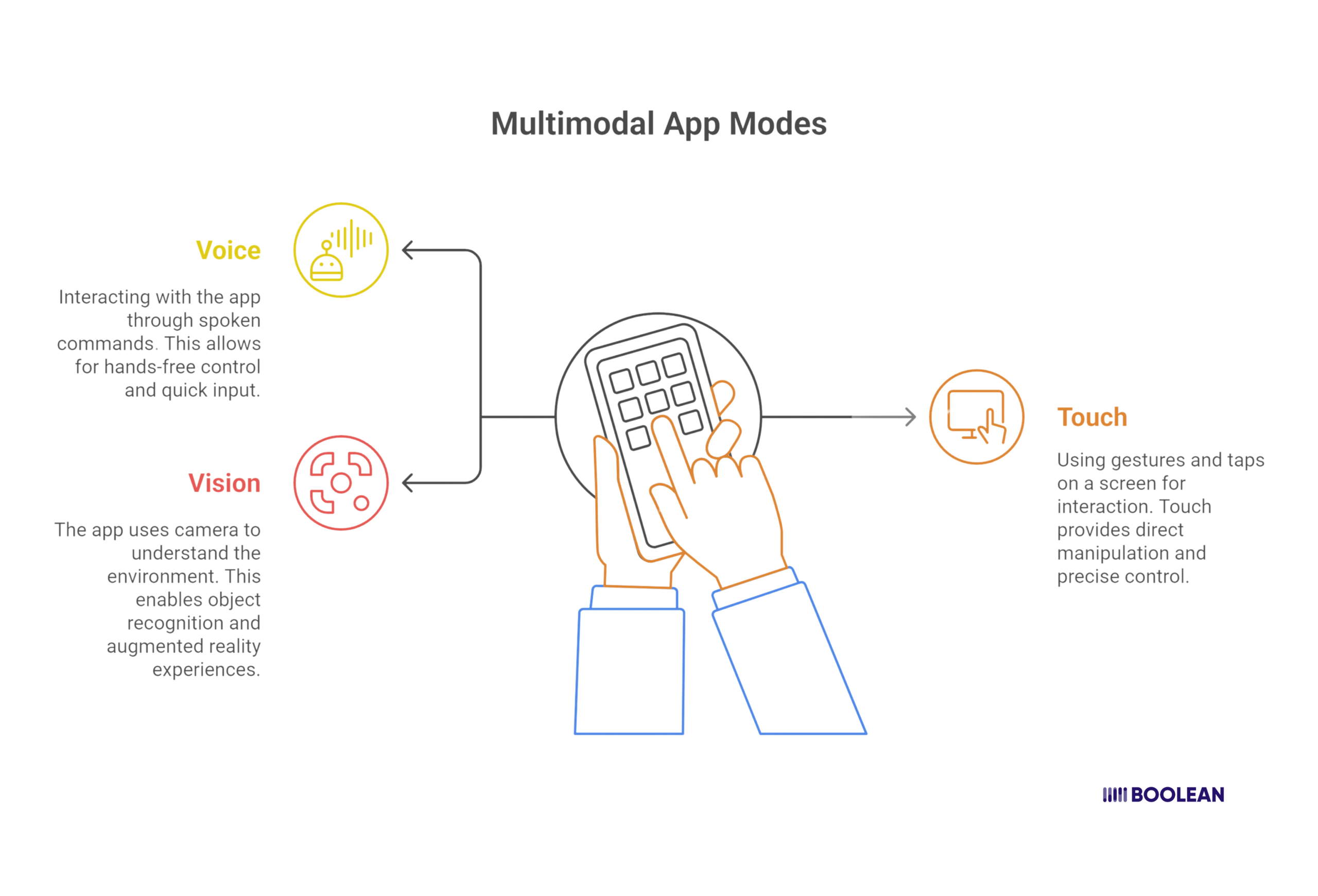

Core Modes of Multimodal Apps

When we talk about a multimodal app, we’re really talking about one thing: choice.

- The choice to speak instead of tap.

- To look instead of scroll.

- To gesture instead of click.

Multimodal UI has three main input types: voice, touch, and vision.

Each provides a unique way of interacting with a mobile app, and when combined, they create experiences that feel comfortable, smart, and really human.

Let’s take a quick look at each:

- Voice Input: Talking to Apps Like They’re People

Talking is the most natural thing we do.

And this is the reason that the Voice input Multimodal app has become such a powerful part of the experience. It is quick, comfortable, and completely free from hand.

In today’s fast-paced world, this kind of facility is gold.

Whether you are sending a message, searching the web, or controlling smart devices, the voice simply seems easy.

No tapping. No typing. Just say it, and your app responds.

From Novelty to Necessity

Not long ago, talking to your phone felt awkward. Now? Normal.

Voice apps and speech apps are everywhere. From virtual assistants like Siri and Google Assistant to specialized facetime type apps with voice controls and location based apps that respond to voice commands based on where you are.

In-app commands in fitness trackers, productivity tools, and even shopping platforms, voice control is no longer just for accessibility. It’s a daily habit.

And the tech behind it has leveled up.

Thanks to AI input and smarter audio input systems, your device can understand speech with incredible accuracy. It knows the difference between “play jazz” and “play just the playlist.” That’s the power of AI UI working behind the scenes.

With voice UX leading the way, apps are becoming more adaptive. They respond not just to what you say, but how you say it.

When Voice Works Best

Voice input shines when your hands are full or your eyes are elsewhere.

Cooking? Driving? Holding a baby?

Just speak.

This is why hands-free apps are gaining momentum, especially as screenless UI and natural UI designs become more common.

Voice also helps users with different abilities interact effortlessly. This is why voice app development has become crucial, with features like evernote voice recognition setting new standards for accessibility.”

It boosts mobile UX by offering an alternative to touch or visual modes. And when combined with other inputs, like touch input or camera input, it makes the entire app interface feel smarter.

Say “open camera,” then smile to take a photo. That’s voice plus vision. A perfect example of a hybrid UI.

Best Practices in Voice UX Design

Designing for voice isn’t the same as designing for screens. It requires empathy and clarity.

Here’s what great voice UX includes:

- Clear prompts and feedback (so users know the app is listening).

- Simple, natural language, avoid robotic commands.

- Flexibility for different accents, speeds, and tones.

- A graceful way to recover when the app doesn’t understand.

Also, voice works best when paired with thoughtful UI animation and visual feedback, like a waveform or blinking icon that shows the app is actively listening.

That’s not just design. That’s trust.

What’s Next for Voice in Mobile Apps?

Voice is no longer the “future.” It’s already shaping the next-gen UI.

We’re seeing trends like:

- Multimodal tools that combine voice with AR UI and gesture.

- Smart interface designs that learn your speech patterns.

- App feedback systems that use voice tone to adjust responses.

- Visual control paired with voice to navigate complex interfaces.

Even the most immersive apps are adopting mobile voice features to enhance usability.

Whether it’s a vision UI tool for low-vision users or a visual app that responds to spoken commands, voice is connecting the dots.

Voice input is not just about convenience. It’s about freedom. And giving users more ways to be heard.

- Touch Input: Still the Core of Mobile Interaction

Let’s start with what we know best: touch.

Touch is the most familiar, most direct form of interaction. From the moment smartphones became mainstream, touch screens have been the foundation of every app experience.

But here’s the thing, touch input has evolved. It’s no longer just about tapping buttons. It’s swiping, pinching, holding, dragging, and even gesturing in mid-air.

Welcome to the world of mobile gestures and gesture control.

Why Touch Still Matters in a Multimodal World

Many users first experience this through what is AR Doodle app on Android or when exploring what is AR Zone app on my phone.

Even in today’s multimodal apps, touch remains central. It’s fast, reliable, and requires no explanation. A user might use voice search to find something but still rely on touch to browse, scroll, or fine-tune actions.

That’s why UX design for touch still needs to be tight, responsive, forgiving, and intuitive.

Think of apps like photo editors, video reels, or design tools. Even entertainment apps like pic celebrity look alike tools and prank apps rely heavily on precise touch interactions.

Without precise mobile touch input, they fall apart. Even in AR Zone apps or vision UI setups, users still reach for the screen when the other modes fall short.

The best app interfaces today use touch as part of a hybrid UI, blending it with voice, motion, and visual input. That’s where natural UI truly shines.

Touch in a Multimodal Context

In a multimodal app, touch complements other modes beautifully:

- Can’t talk? Touch.

- Vision blocked? Touch.

- Just prefer control? Touch.

You might say “Send message,” then touch to choose the recipient. This multimodal app interaction is in its best form: fluid, flexible, and user-driven.

And as the AI UI system becomes more advanced, it can learn even when a user prefers touch input on voice control or visual control, adjusting the experience accordingly.

That’s smart interface design in action.

Designing for Modern Touch UX

When building for touch in a mobile UI, here are a few key things to keep in mind:

- Use adequate spacing and big enough hit targets — not everyone has tiny fingers.

- Build UI animations that give feedback without slowing the experience.

- Don’t rely on hover or small gestures — users need clarity and speed.

- Think about motion input and how it blends into your overall design system.

- Make it easy to recover from mistakes. App feedback should be instant and helpful.

Touch should feel intuitive, not frustrating.

That’s the heart of intuitive UI design, giving users what they expect, when they expect it, with no guesswork. In a world of smart input, simple touch remains one of the most reliable modes.

Touch Meets AR, Vision, and Beyond

As apps become more immersive with AR UI and VR trends, touch gets even more interesting. In many AR Zone app experiences, users still rely on the screen to anchor content, rotate objects, or confirm actions. It’s the bridge between digital and physical.

Combine that with camera input, visual apps, and AI gestures, and you get something powerful: apps that feel alive.

We’re seeing this already in mobile UX design that blends touch input with vision tech, voice UX, and gesture app tools.

The result? More interactive UIs, more freedom, and more control.

Touch is no longer just “the default.”

It’s one of many inputs, and it still leads the way when it comes to mobile interactive experiences.

- Vision Input: When Your Camera Becomes the Controller

What if your app could see what you see?

That’s exactly what vision input brings to the table. It’s the third, and often most futuristic, pillar of multimodal apps, right alongside touch and voice.

With vision, your smart camera becomes more than a lens. It becomes an intelligent sensor. It reads gestures, tracks movement, recognizes faces, and even scans your surroundings. And this transforms how users experience your app.

We’re not just pointing and shooting anymore. We’re interacting with our eyes, faces, and full-body motion.

What Is Vision Input, Exactly?

Vision tech uses the device’s camera (and sometimes depth sensors) to understand the physical world. It turns camera input into meaningful actions, all without the need for buttons or voice.

You’ve probably seen it already:

- Face unlock? That’s facial input. If you’ve wondered what is AR zone on Android or what is samsung ar zone, you’ve already experienced vision-based multimodal interfaces.

- Wave to dismiss a call? That’s gesture control.

- Move your phone to reveal 3D content? That’s motion input and AR UI in action.

In apps, this means you can:

- Scan a document or product with visual input.

- Use camera UX to navigate indoor spaces.

- Control a screen by just looking at it, visual control.

It sounds advanced, but it’s quickly becoming standard across UI trends, especially in interactive UI design.

Where Vision Input Shines

Vision input is incredibly useful when:

- Hands are busy.

- Environments are too noisy for voice input.

- Precision is key.

- You want a more immersive UI.

In AR Zone apps, users often use mobile gestures or facial expressions to interact. That’s vision-based app interaction. It’s playful, responsive, and feels almost magical.

This technology powers everything from object identifier app solutions to creative scratch multimedia projects, the kind of intuitive app experience that today’s users crave.

Vision also empowers screenless UI and natural UI systems. It allows devices to read subtle user cues, like direction of gaze or nods, and respond accordingly.

And when paired with AI input, the camera gets smarter, learning behavior and predicting needs.

Designing for Vision UX

If you’re thinking about adding vision UI to your app design, here’s what to consider:

- Keep it lightweight. Users shouldn’t need special hardware.

- Provide clear cues, what the app sees, and what it’s doing.

- Always include fallback options (like touch input or voice control).

- Be transparent about data usage. Vision input deals with sensitive info.

Also, blend it with other modes. A user might start by scanning an object (vision), then say “add to cart” (voice), and finish with a tap to checkout (touch). That’s a perfect hybrid UI experience.

Use Cases Already in Play

Vision input is already powering:

- Gesture apps that control media with hand waves.

- Visual apps that recognize objects, landmarks, or even moods. App for identifying objects and celebrity look alike apps showcase how vision tech makes everyday tasks more engaging.

- AI gestures that trigger commands automatically.

- Smart app interfaces that react to eye movement or facial expressions.

- Mobile interaction tools in AR learning or gaming apps.

It’s also crucial in inclusive design, helping users with limited mobility navigate apps with just a glance or head tilt. That’s real impact.

And it’s only growing. As more multimodal tools emerge, camera UX will become a key player in how people engage with apps, especially in mobile UX and next-gen UI systems.

Vision input isn’t about flashy features.

It’s about making apps more human, understanding users without needing a word or a tap.

In a true multimodal UX, your eyes are part of the interface. And your phone doesn’t just listen or respond, it sees.

Unified Inputs: Where Voice, Touch & Vision Meet

Here’s the real magic: it’s not voice or touch or vision. It’s all of them, working in harmony.

That’s what makes a truly intelligent, user-first multimodal app. It lets people interact however they feel most comfortable, with voice, gestures, glances, or taps. No restrictions. No learning curve.

Whether it’s anonymous messaging platforms or couple relationship app tools, multimodal design makes every interaction feel natural.

It’s not about showing off technology. It’s about creating smoother, more intuitive app experiences.

Why Combine Inputs?

Because users live dynamic lives. They’re cooking, walking, multitasking, rushing, and relaxing. Sometimes touch input makes sense. Other times, it’s voice control or gesture control.

In these real-world scenarios, apps that support multimodal UX feel less like tools and more like companions.

- Say “Start workout” while tying your shoes. (Voice)

- Swipe to skip a track. (Touch)

- Nod to pause. (Vision)

This kind of fluid app interaction is what defines the future of smart interfaces, powered by multimodal tools, grounded in natural UI principles.

Designing for Hybrid Interactions

Building this kind of hybrid UI takes intention. It’s not just about adding inputs, it’s about making them work together.

For designers, this means:

- Mapping real-world use cases to input modes.

- Offering seamless transitions between voice app, mobile touch, and vision UI.

- Providing instant, helpful app feedback for every action.

- Using AI input to learn and adapt based on user behavior.

An immersive UI doesn’t overwhelm; it adapts. It feels alive, responsive, and tailored.

And when done right, it creates interactive UIs that users not only understand but also enjoy.

Real-World Examples

Multimodal design is already shaping the way we use mobile:

- Navigation apps that use visual UX, speech app commands, and screen taps in tandem.

- Shopping apps that let users search by image (vision) using app that identifies items in picture technology, or currency rate app for Android that combines voice queries with visual displays, confirm by tap (touch), and check out by voice.

- AR UI tools that blend camera scans, verbal instructions, and swipe gestures.

We’re seeing this across UI trends, especially in areas like:

- Mobile gestures

- Smart input systems

- Hands-free apps

- Screenless UI innovations

- And AI gestures that react before you even touch the screen.

And as visual control, audio input, and camera UX improve, we’ll only see richer combinations ahead.

The End Goal: Natural, Human-Centric Design

At its core, multimodal UX isn’t about cramming in features. It’s about designing mobile experiences that feel… human.

- Fast when you need speed.

- Accessible when you need ease.

- Adaptive when you need flexibility.

By embracing mobile interact patterns and building next-gen UI systems that support every kind of input, touch, voice, and vision, we meet users where they are.

And that’s how you turn good apps into unforgettable ones.

Designing a Seamless Multimodal App Experience

Creating a great multimodal app is not only about adding features; It is about designing interactions that feel smooth, natural, and responsible.

In fact, a spontaneous mobile UX allows users to switch without friction between touch input, voice control, and visual input.

Each input should feel intuitive, with clear app feedback and backed by thoughtful UX design.

To get there, developers are embracing composable architecture in mobile apps, allowing modular, flexible systems that adapt as user behavior changes.

This approach benefits everything from on demand technologies to dynamic user interface design implementations.

This structure supports real-time context switching between inputs, improving performance and user satisfaction.

And with real-time edge AI, apps can process camera input, audio input, or gesture data locally, reducing lag and keeping interactions fluid, even offline.

Multimodal design also plays a key role in the rise of the super app, platforms that handle messaging, payments, shopping, and more. These apps demand smart, adaptive interfaces, whether they’re couples counseling app platforms that need sensitive interaction design or object detection app tools requiring precision..

To succeed, designers must blend modes like mobile touch, AI gestures, and vision UI into one coherent, interactive UI, giving users control, comfort, and confidence.

Benefits of Multimodal UI in Mobile Apps

Why go multimodal?

Because users don’t interact with their phones in just one way.

They swipe, speak, glance, and gesture. A multimodal UI meets them wherever they are, creating smoother, smarter, and more enjoyable app experiences.

Here are some standout benefits:

- More Natural Interactions

Aligning with multimodal design is how we naturally communicate, a combination of voice input, touch input, and even facial input or visual control. It creates a more spontaneous app that sounds like a user expansion, not just a device.

- Better Accessibility

Whether it is a hand-ripped position or a user with mobility or vision challenges, multimodal tools open your mobile UI to more people. Voice, gestures, and camera-operated interactions enable everything from phone augmented reality experiences to smart watches apps and the best smartwatch apps with multimodal features.

- Increased Engagement

Users are likely to stick around when your app looks easy and responsible. A multimodal approach increases engagement by offering flexible routes through the UX interface, from mobile gestures to voice search and camera input.

- Smarter, Context-Aware Experiences

With real-time input from AI UI and many sources (such as audio input or vision tech), your app can react with reference. Say you’re jogging, your app might shift from touch to voice UX for better control. That’s smart design. It’s why Bluetooth watch app developers and those creating wearable application development solutions prioritize multimodal interfaces.

- Future-Proofing Your App

UI trends are moving fast toward screenless UI, immersive UI, and natural UI experiences. By embracing multimodal app strategies now, you’re building a foundation for next-gen UI and adaptable tech like AR UI and smart interfaces.

Multimodal design is all about making your app interface more human-responsive, versatile, and ready for anything.

Tools & Technologies for Building Multimodal Apps

Building a powerful multimodal app takes more than good ideas; it takes the right stack of tools, frameworks, and smart integrations.

To support rich app interaction across touch input, voice control, and visual input, developers are turning to a mix of native features, AI engines, and cross-platform kits.

Voice Input & Speech Tech

- Google Speech-to-Text, Apple SiriKit, and Amazon Alexa SDK have made it easy to add voice UX to mobile apps.

- Tools such as Dialogflow and Rasa are essential for voice app development, enabling features like evernote voice recognition in modern apps.

- These platforms are great for building hands-free apps that work in noisy or busy environments.

Touch & Gesture Frameworks

- For mobile touch and gesture control, platform-built-in gestures such as Flutter, react native, and SwiftUI offer APIs.

- Libraries such as Hammer.js enable mobile gestures and speed input to custom app interface design.

- Add UI animation to make the interactive UI feel smooth and alive.

Vision & Camera Tech

- These tools support everything from facial input to object recognition, powering picture of recognition features and object identifier app functionality..

- Combined with AI input, these tools bring intelligence to your visual UX.

AI & Edge Processing

- For real-time multimodal processing, TensorFlow Lite, MediaPipe, and Core ML enable AI gestures, smart prediction, and visual apps at the edge.

- Real-time edge AI helps reduce latency and preserve privacy, critical in smart interface design.

Modular & Scalable Architecture

- A composable architecture in mobile apps allows you to add or swap input methods without breaking the entire system.

- Tools like Jetpack Compose and modular Flutter packages support scalable and flexible multimodal UX development.

Integration with Super Apps

- As super apps evolve, developers are building hybrid UI layers that combine all modalities, voice, touch, and vision into seamless, contextual flows.

- These experiences define the future of next-gen UI.

Building a multimodal UI isn’t just about layering inputs; it’s about choosing the right tools to deliver a fluid, human-centered experience.

With the right software development stack, your app design becomes more than functional. It becomes intuitive, responsive, and ready for the future of mobile interact.

Real-World Examples & Case Studies

Multimodal apps aren’t just theory; they’re already changing how we interact with mobile technology in everyday life.

From banking to fitness, navigation to entertainment, real apps are blending voice, touch, and vision tech to create smarter, more natural user experiences.

Let’s look at how some leading products are putting multimodal UX into action.

Google Maps

Google Maps is a textbook example of a multimodal app.

Similar innovations are seen in augmented reality travel and tourism, showcasing the benefits of virtual reality in business.

- You touch to explore the map.

- You speak to search via voice search.

- You see directions via visual input, including AR UI with Live View, blending camera input with location overlays.

It adapts based on your context, walking, driving, or biking, creating an intuitive app that feels incredibly responsive.

We’re also seeing Uber alternatives integrate multimodal UX, allowing users to book rides via voice control, confirm pickups via touch screen, and track vehicles using visual input.

Alexa & Google Assistant

These voice apps were designed for hands-free app interaction, but over time, they’ve become more multimodal.

- You can now tap, swipe, or even gesture on smart displays. These platforms enable video chat functionality similar to facetime type apps, but with added multimodal capabilities.

- Voice feedback is paired with visual UX to clarify what’s happening.

- This shift shows the rise of hybrid UI systems, built for different types of users and environments.

They reflect where AI UI and natural UI design are headed: flexible, contextual, and adaptive.

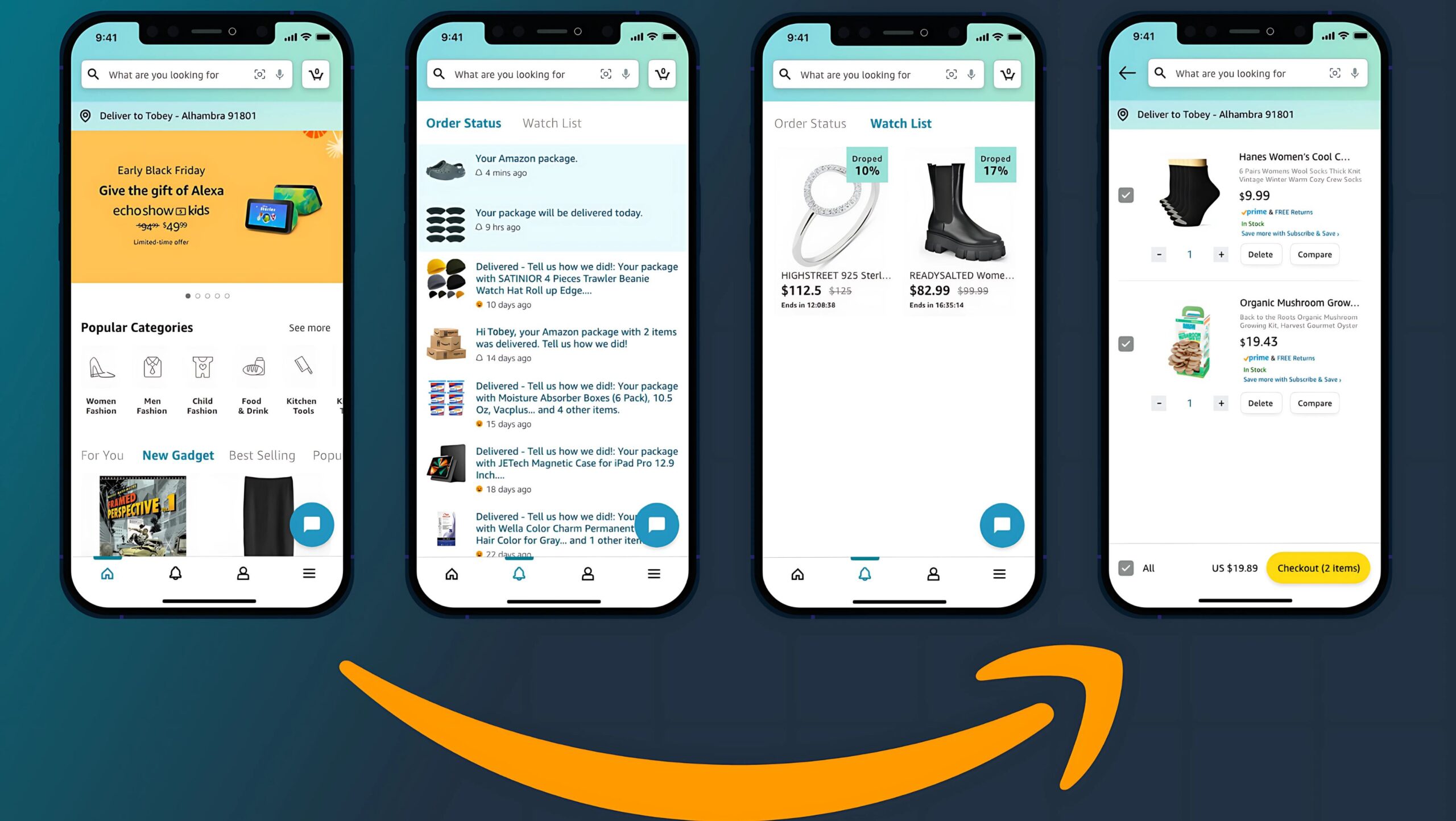

Amazon App

The Amazon shopping app uses:

- Touch screen for browsing and tapping.

- Voice UX for searching items on the go.

- A smart camera for barcode scanning, visual search, and even product matching via vision UI.

It’s not just convenient, it’s smart. The app supports real-time AI input that anticipates what the user needs next.

This kind of smart interface reduces friction and boosts app engagement.

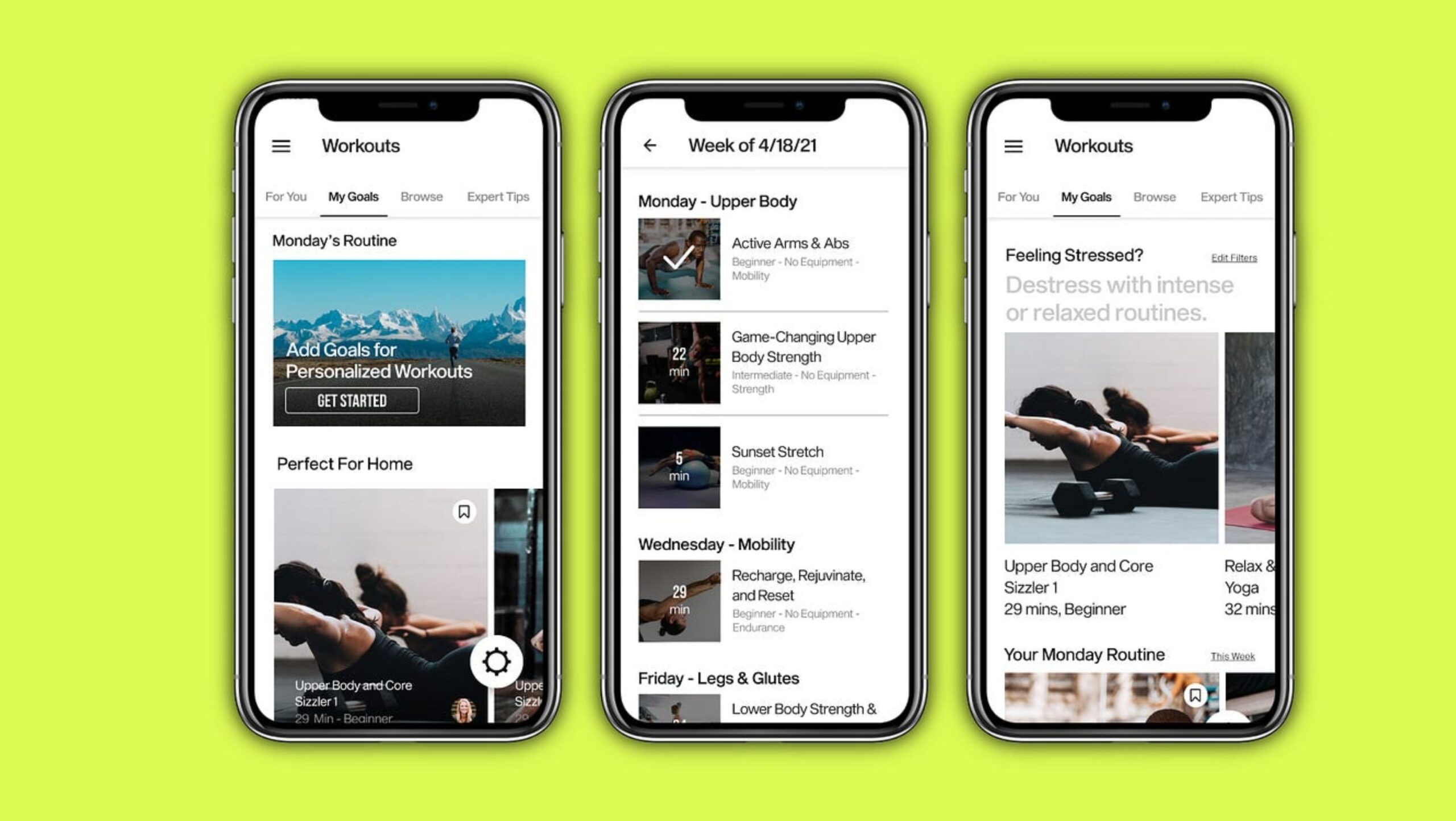

Nike Training Club

In the Nike Training Club app:

- You interact with workouts using touch input and voice control.

- The app provides visual control via instructional videos.

- It adapts to your flow, whether you’re hands-free mid-workout or scrolling through routines.

It’s a great example of an interactive UI that responds to your needs in real time, even using AI gestures to track progress.

Samsung AR Zone App

The AR Zone app by Samsung pushes the boundaries of vision tech:

- It uses facial input, camera UX, and gesture control If you’ve asked what is AR Doodle app on Android or what is AR Zone app on my phone, this is the technology behind it, to create playful, immersive experiences.

- As AR and VR trends continue, this kind of visual app shows how far screenless UI and motion input can go.

It’s a fun, forward-looking example of how multimodal tools can build the next-gen UI.

Apps like Snapchat have made smart camera features and AR UI mainstream. Using camera input for facial recognition, filters, or gesture-based actions has inspired a wave of visual apps that combine vision tech with playful, intuitive interfaces.

The rise of multimodal AI examples in consumer apps is evident everywhere; from prank apps prank apps that use gesture recognition for comedy effects to serious app for couples questions that combine voice and touch for relationship building.

Understanding what is speech recognition in this context means seeing it as one part of a larger, more intuitive system. Even niche applications like anonymous messaging services and professional couples counseling app platforms benefit from multimodal design.

The same technology that powers fun pic celebrity look alike apps also enables critical object detection app features in security and accessibility tools.

As mobile application programming iOS evolves to support these features, and currency rate app for Android developers add voice queries to their visual interfaces, we’re seeing that what is a speech recognition system today is far more sophisticated than simple voice-to-text conversion.

Partner with a Top App Development Company

The building of a successful multimodal app is not just about holding the right idea – it is about executing it with the right team.

Every detail from the app, from the intuitive app to the advanced AI UI, needs to work together across the voice, touch, and vision UI.

That’s where expert partners come in.

Collaborating with a top-tier app development company ensures your product is built with the latest tech, whether it’s gesture control, camera input, or real-time edge AI. They bring the experience, architecture, and insight to scale your vision from prototype to production.

Why Boolean Inc.?

Boolean Inc. is one such trusted name. Known for delivering high-performing apps with smart interface design and immersive UI, their team specializes in crafting apps that truly feel human.

From designing TikTok-type apps to powering visual apps with AR features, Boolean’s approach is grounded in research, innovation, and clean, user-first development.

They understand UI trends, cross-platform integration, and the demand for smooth app interaction in today’s competitive market.

If you’re looking to create a next-gen UI that combines AI input, touch input, and vision tech, a partner like Boolean Inc. can be the edge you need.

Conclusion

The way we interact with mobile apps is evolving fast. Users expect more than buttons and menus. They want to tap, talk, gesture, and be understood.

A well-designed multimodal app brings all of that together. It creates a fluid, human experience powered by voice control, touch input, and vision tech, and backed by smart design, real-time AI, and intuitive flow.

Whether you’re building apps like Snapchat or aiming to launch the next big super app, embracing multimodal UX isn’t just a trend; it’s the standard.

Start designing smarter. Start designing for real life.

FAQs

- What is a multimodal app?

A multimodal app allows users to interact using multiple input methods, like touch input, voice control, and vision tech, often in combination, for a more natural and intuitive experience.

- Why is multimodal UX important in mobile app design?

It enhances mobile UX by adapting to users’ context. Whether users are walking, driving, or multitasking, they can choose how to interact by touch, speech, or gesture.

- Which industries benefit most from multimodal UI?

E-commerce, health, fitness, travel, and apps like TikTok or Uber alternatives benefit greatly, offering more flexibility and smart interface options to diverse user bases.

- How does AI enhance multimodal experiences?

AI UI powers real-time edge AI, facial input, voice recognition, and gesture control, helping apps respond contextually and intelligently to multiple input modes.

- Can small startups build multimodal apps too?

Absolutely! With tools like Flutter, ARCore, and support from top partners like Boolean Inc., even startups can build powerful, intuitive apps with cutting-edge multimodal tools.