Introduction

AI used to feel distant, something reserved for labs, massive servers, or big tech companies. That’s not the case anymore.

Today, it’s in your pocket.

From face unlock to voice commands to real-time health tracking, AI is becoming part of everyday mobile experiences. It’s not flashy anymore. It’s expected.

And it’s growing fast.

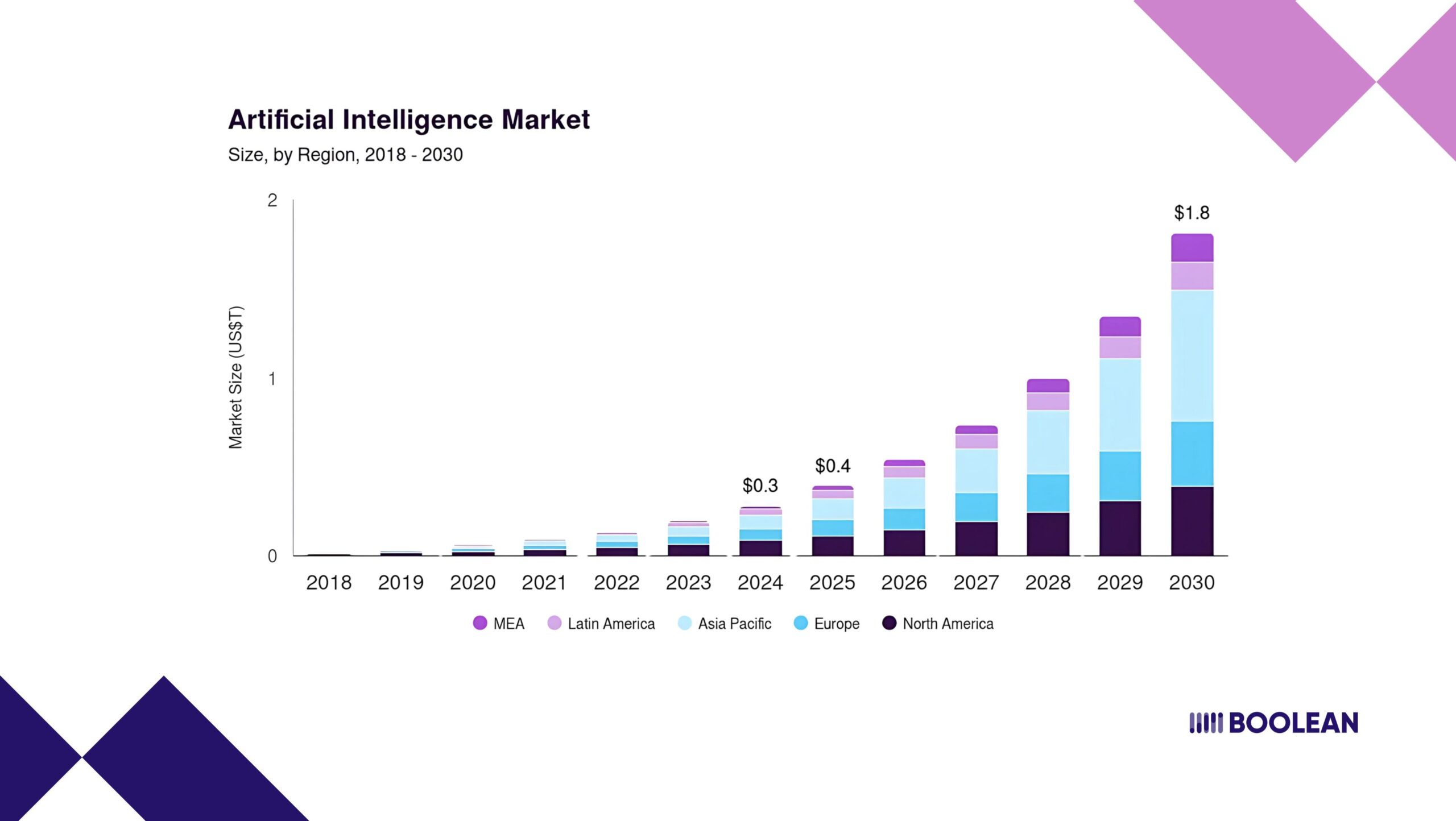

I don’t think you have any idea about how AI is growing and how companies are taking advantage of it.

The global artificial intelligence market was valued at $279.22 billion in 2024, and that number is projected to hit $1,811.75 billion by 2030. That’s not just growth, that’s a full-on shift in how software gets built.

What’s driving it? A big part of the story is on-device machine learning.

Instead of sending everything to the cloud, apps are starting to run AI models directly on the phone and tablet. This means rapid reactions, better privacy, and apps that still do not work when there are no indications.

But here’s the thing: none of that works without the right tools.

As a mobile developer or product builder, you need something reliable. Something that fits your platform. Something that makes your life easier, not harder. That’s where AI frameworks come into play.

In 2025, some names stand out:

ONNX, Tensorflow Lite, and CoreML are leading the path. Everyone brings something different to the table, and depending on your goals, one can be a better fit than others.

This guide walks you through the best options out there. Not in theory. In plain terms. With context, trade-offs, and real value.

Whether you’re building for Android, iOS, or both, if you care about performance, privacy, and future-proofing your app, this is for you.

Let’s get into the tools that actually make mobile AI work.

What Is an AI Framework (For Mobile)?

Think of an AI framework as your set of building blocks.

If you’re working on an app that needs to recognize faces, understand speech, suggest smart replies, or even filter photos, you’re probably using AI models.

But to actually get those models working inside a mobile app, especially on the device, you need something to handle the behind-the-scenes work.

That’s what an AI framework does.

It helps you run deep learning models, manage neural networks, and make everything efficient enough to run right on a phone. This is what people mean by on device ML (or on-device machine learning).

It means the intelligence lives inside the app, not in the cloud.

No server. No lag. No user data is being sent somewhere else.

Just on-device AI, working quietly in the background. Fast. Private. Reliable.

Whether you’re building for Android or iOS, there’s a framework for that. TensorFlow Lite works great for Android apps. CoreML is built right into the Apple ecosystem.

And if you’re aiming to stay flexible and support both platforms, ONNX is a strong, open option.

These aren’t just techy add-ons. They’re essential if you’re building anything in mobile AI today, especially with the rise of Edge AI, where smart decisions happen close to the user, not in some remote server farm.

And the best part? These AI tools save time. You don’t have to reinvent how mobile machine learning works.

The right ML SDK takes care of the hard stuff, like optimizing models for mobile hardware, reducing file sizes, and making sure your app doesn’t eat battery like crazy.

So yeah, at a glance, it might sound technical. But at the core, it’s pretty simple:

AI frameworks help you bring intelligent features to mobile apps; faster, safer, and without needing to code everything from scratch.

What Makes a Mobile AI Framework “Best”?

Not all AI frameworks are created equal, especially when you’re building for mobile.

What works well in a cloud server won’t always cut it on a phone. Mobile apps have different needs. You’ve got less power, tighter memory, battery limits, and users who expect everything to run instantly.

So, what actually makes a mobile AI framework stand out?

Here’s what developers usually look for:

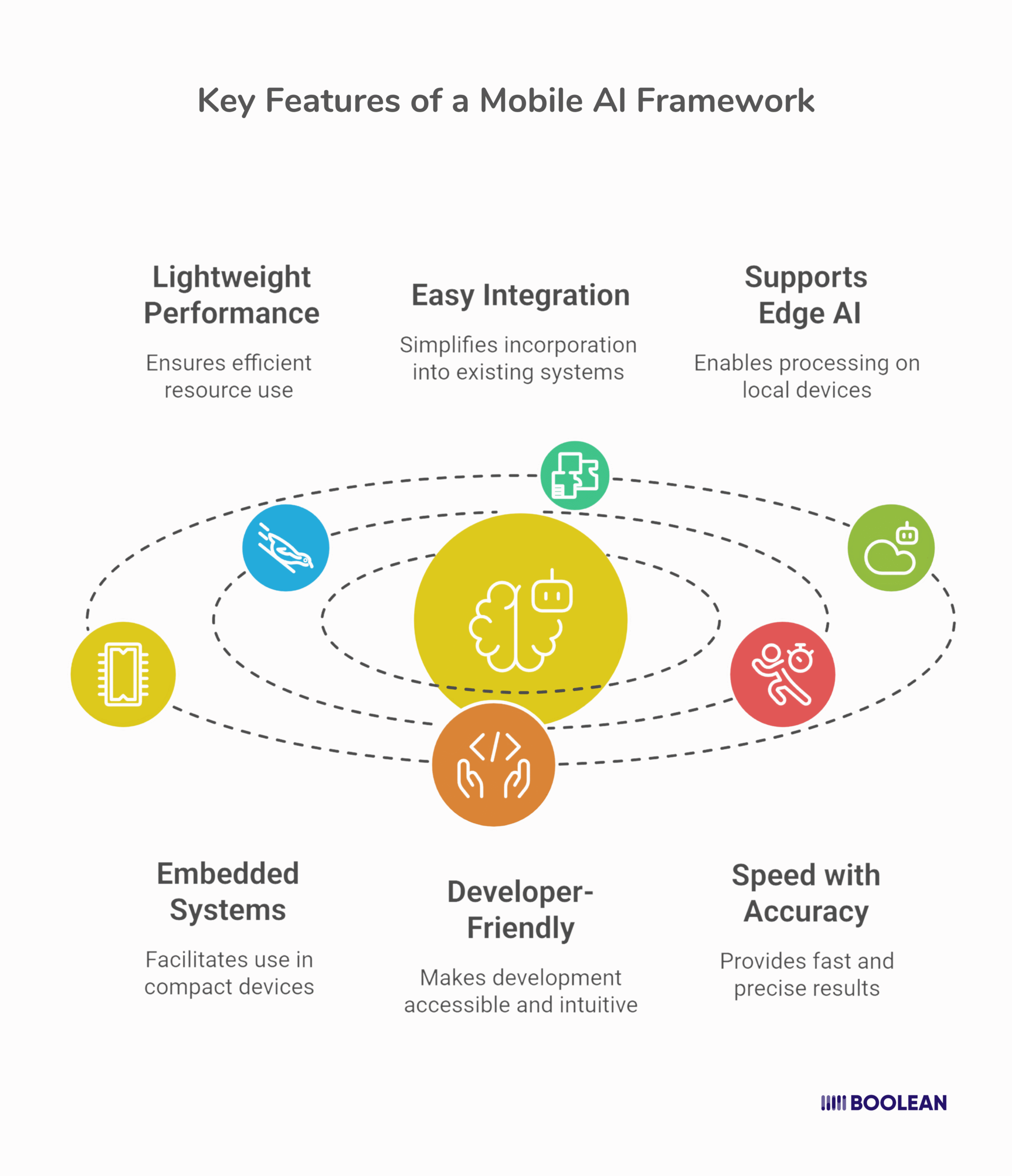

- Lightweight performance

You don’t want your app bloated with a massive library just to run a single AI model. The best tools are lightweight AI frameworks; they keep things lean, fast, and efficient.

Whether you’re dealing with image recognition, audio, or predictive tasks, smaller frameworks make for smoother apps. Less lag, less drain, better UX.

- Easy integration

Building for both iOS and Android? You probably don’t want two completely different codebases. That’s why a cross-platform ML framework is a big win.

Tools like ONNX and other cross-platform on-device AI frameworks help you write once and deploy everywhere. That’s a game-saver for teams trying to ship faster.

- Supports Edge AI (Not Just the Cloud)

Mobile apps are moving toward real-time Edge AI, which just means the smart stuff happens right on the device.

The best mobile ML frameworks for iOS and Android are built with that in mind. They support on-device inference, work offline, and prioritize speed and privacy. That’s what users expect today.

- It Plays Nice with Embedded Systems

For some apps, especially those used in wearables, IoT devices, or medical tools, full mobile OS support isn’t enough.

You need something that works in tighter environments. Embedded AI frameworks are made for those cases. They bring intelligence to devices with limited resources, without cutting corners.

- Developer-Friendly

This might sound obvious, but it matters: the best tools don’t fight you.

Good mobile ML frameworks come with clear docs, active communities, and easy integration. Whether you’re adding voice detection to a health app or using a camera feed for live classification, setup shouldn’t take weeks.

- It Balances Speed with Accuracy

Fast is great, but only if it still works well.

The top lightweight ML frameworks for mobile apps offer quantization, hardware acceleration, and tools to help you get the right balance of performance and precision. No one wants an AI that’s fast but wrong.

So, what’s “best” really depends on what you’re building.

But in general, the best AI frameworks for mobile are:

- Lightweight

- Fast

- Privacy-first

- Easy to use

- Compatible across platforms

- Optimized for real-world mobile constraints

Whether you’re shipping a personal assistant, a fitness tracker, or an educational tool, picking the right framework sets the tone for your entire app development process.

Choose the one that fits your app, not just the most popular one.

Top Mobile AI Frameworks in 2025

If you’re building AI-powered apps for phones or tablets, the framework you choose can make or break your project.

There are now more options than ever, each with its own strengths. Some are better for iOS. Some are built with Android in mind. Others aim for flexibility, giving you one setup that works across both.

Here’s the good news: mobile AI frameworks have come a long way. They’re faster, lighter, and easier to use than they were just a few years ago.

Whether you’re creating something for smart cameras, real-time translation, or fitness tracking, choosing the right tool matters.

Let’s look at some of the best mobile ML frameworks for iOS and Android that developers are using in 2025.

These frameworks stand out for performance, compatibility, and real-world results:

If you’re developing for Android, TensorFlow Lite is probably the first name that comes up. And for good reason.

This lightweight version of TensorFlow is made for on-device ML, which means your models run directly on the phone, no server required.

Why developers use it:

- Built by Google and deeply integrated into Android development

- Optimized for deep learning, neural networks, and Edge AI mobile tasks

- Supports hardware acceleration (NNAPI, GPU, Hexagon DSP)

- Handles quantization easily, so your models stay small and fast

- Comes with pre-trained models and conversion tools for custom ones

Real-world fit:

Apps that rely on camera input, gesture recognition, speech processing, or predictive typing.

Bonus: It also works on iOS, so if you’re going cross-platform, you’re not out of luck.

If you’re focused on Android, you might also want to explore the top AI tools for Android app development that pair well with frameworks like TensorFlow Lite.

Building for iPhone or iPad? CoreML is Apple’s native answer for on-device AI, and it’s incredibly polished.

It’s tightly integrated into the Apple ecosystem, which makes it super smooth to use, especially if you’re already working with Swift, Xcode, and iOS design tools.

Why developers love it:

- Blazing-fast performance on Apple Silicon (A-series and M-series chips)

- Supports on-device machine learning, including neural networks and embedded AI frameworks

- Seamless integration with Vision, Sound Analysis, and SiriKit

- Automatically optimizes models for size and performance

- Strong privacy story, nothing leaves the user’s device

Real-world fit:

Smart photo filtering, live text detection, language translation, and voice-based UI. If your user base is mainly iPhone or iPad, CoreML is hard to beat.

AI chatbots continue to evolve, and many now run smoothly right on mobile. Explore the best AI chatbots in 2025 to see what’s possible.

- ONNX Runtime Mobile

Need a cross-platform ML framework that gives you flexibility? ONNX Mobile is for developers who don’t want to be tied to one ecosystem.

It lets you train your model in PyTorch or TensorFlow and then convert it to the ONNX format, giving you a single, unified format to deploy anywhere.

Why developers pick it:

- Supports cross-platform on-device AI frameworks

- Works across Android, iOS, and even embedded systems

- Great for teams using multiple tools in their ML pipeline

- Supports quantization and mobile acceleration

- Fast, portable, and not locked to any one vendor

Real-world fit:

Apps that are deployed across devices, especially in enterprise or B2B settings, or when you’re shipping to both stores.

Looking to integrate AI conversations into your app? Here’s how you can build ChatGPT-powered apps for business use, with the help of the right mobile AI framework.

If you’re building something that uses the camera, like filters, pose estimation, or gesture control, MediaPipe is worth a serious look.

It’s a framework developed by Google that’s focused on real-time perception, blending traditional computer vision with AI tools in a lightweight package.

Why developers love it:

- Pre-built pipelines for face detection, hand tracking, and object detection

- Supports on-device ML with low latency

- Works with TensorFlow Lite and can be extended

- Ideal for real-time, low-power scenarios

Real-world fit:

AR filters, fitness coaching, live visual effects, virtual makeup apps.

MediaPipe is often the go-to for AI-powered AR apps. You can see where this tech is headed in our look at the latest AR and VR trends in mobile apps.

- MNN (Mobile Neural Network)

Developed by Alibaba, MNN is known for being lean and super fast, especially when you’re running models on the edge.

It focuses heavily on embedded AI frameworks, which makes it a go-to for IoT and smart devices beyond just phones.

Why developers use it:

- Highly optimized for ARM architecture

- Ultra-small binary size

- Low power consumption

- Works across Android, iOS, and Linux

- Supports multiple backends (OpenCL, Vulkan, Metal)

Real-world fit:

Wearables, smart cameras, embedded medical tools, anything where hardware is limited, but performance still matters.

- NCNN & TNN (by Tencent)

Tencent’s NCNN and TNN are great for developers building for Chinese markets or looking for something open-source and battle-tested.

Both frameworks are focused on speed, portability, and low overhead, built specifically for Edge AI mobile and mobile ML frameworks use cases.

Why developers choose these:

- Fast inference with small model sizes

- Easy to integrate into Android apps

- Optimized for Snapdragon and Kirin chips

- Lightweight and perfect for on-device AI tasks

Real-world fit:

Streaming apps, mobile games with AI elements, and real-time communication tools.

From coaching apps to motion tracking, AI is reshaping sports tech. Check out these real-life AI applications in sports to see how mobile frameworks are powering next-gen training tools.

These are some of the best mobile ML frameworks for iOS and Android in 2025, not because they check every box, but because they let developers build apps that feel fast, useful, and modern.

Whether you need lightweight ML frameworks for mobile apps, a powerful SDK, or an embedded AI framework that fits tight hardware constraints, there’s something here for your stack.

ONNX for Mobile: Flexibility Without Lock-In

Switching between tools, formats, and platforms can feel like a maze. Especially in mobile development, where what works on Android might need redoing for iOS. That’s where ONNX shines.

ONNX (short for Open Neural Network Exchange) gives developers something simple and rare: freedom.

With ONNX, you’re not tied to one tool or locked into a specific mobile ecosystem. You can train your model however you like, PyTorch, TensorFlow, even scikit-learn, then export it to ONNX format and run it on mobile with ONNX Runtime Mobile. Clean and straightforward.

Built for Cross-Platform Mobile AI

ONNX is made for flexibility. That’s why developers building cross-platform apps are turning to it more and more. It helps you take your trained models and run them across Android, iOS, and even embedded systems, all without rebuilding everything from scratch.

So if your app needs to work across devices or if your team uses different tools in the pipeline, ONNX cuts out a lot of the mess.

Some real strengths:

- Works with many training frameworks, PyTorch, TensorFlow, and more

- Outputs models that are optimized for mobile and on-device ML

- Keeps performance fast and memory use low

- Plays nicely with Edge AI setups, especially where low latency is key

ONNX Runtime Mobile: Lightweight and Fast

ONNX Runtime Mobile is the lighter version built for phones and smaller devices. It’s built to run AI models directly on the device, no cloud, no lag.

Why does this matter?

- It keeps things private (no need to send data elsewhere)

- It keeps things fast (no waiting on server responses)

- It works offline, helpful in areas with poor connectivity

If you’re working on Mobile ML frameworks that need to stay lightweight, this is a great option.

Why ONNX Stands Out

Here’s why many mobile devs are leaning into ONNX in 2025:

- It’s not platform-specific; it supports cross-platform on-device AI frameworks

- It helps avoid vendor lock-in

- It supports embedded AI frameworks and Edge AI mobile use cases

- It lets you focus on building your model, not fighting with formats

- And it’s actively maintained with help from Microsoft and the open-source community

It’s a relief, honestly, especially if you’re working across multiple platforms or managing a team with varied ML workflows.

Real-Life Use Cases

ONNX is especially useful if:

- You’re building both Android and iOS apps and want one model for both

- You’re working with multiple AI tools (like PyTorch and TensorFlow)

- You’re shipping models to embedded systems or edge devices

- You care about performance but don’t want to constantly convert file formats

When you’re developing a health tracker, a voice assistant, or a camera-based app, ONNX can help keep your workflow smooth and your AI models portable.

CoreML for iOS: Optimized, Fast, and Private

If you’re building apps for iPhones or iPads, CoreML is likely your best bet. It’s Apple’s native AI framework built specifically for on-device machine learning.

What makes CoreML stand out? It’s fast, private, and deeply integrated with iOS. That means tasks like image recognition, text prediction, or voice analysis run smoothly, all right on the device. No cloud needed, which also makes it ideal for Edge AI scenarios.

CoreML works well with models from TensorFlow or PyTorch (using Apple’s conversion tools) and supports tight integration with things like the camera, Siri, and even ARKit.

What You Can Build with CoreML

CoreML is behind features like:

- Face ID enhancements

- Smart photo categorization

- On-device voice recognition

- Handwriting prediction

- AR object detection

- And the list keeps growing.

For developers building iOS AI apps that feel smooth, secure, and responsive, CoreML is the clear go-to.

Best Use Cases

CoreML fits especially well in:

- iOS AI apps (voice assistants, smart keyboards, health tracking)

- Mobile ML apps where privacy is key

- Apps that use on-device inference for fast, offline performance

It’s a top pick for mobile machine learning on iPhones, and continues to improve with every iOS release.

TensorFlow Lite: Flexible and Built for Scale

If your app needs to run AI models directly on mobile devices, fast, reliably, and across platforms, TensorFlow Lite is one of the top choices.

It’s the lighter version of TensorFlow, specifically made for on-device ML and mobile machine learning.

And in 2025, it is more powerful, efficient, and flexible than ever.

Here’s what developers love:

- It supports Android and iOS

- It works well with CPUs, GPUs, and even Edge AI hardware

- It can run quantized models to reduce size and improve speed

- It’s compatible with a wide range of AI frameworks and model formats

And best of all, it’s easy to drop into your project thanks to official Mobile ML SDKs.

Designed for On-Device Intelligence

Need to run a voice recognition model locally? Want to add real-time object detection from the camera feed?

TensorFlow Lite is built for those use cases where on-device machine learning matters. It’s optimized to:

- Run fast, even on low-power hardware

- Avoid sending data to the cloud (great for privacy and offline use)

- Deliver low-latency results that feel instant to users

That’s why it’s widely used in health apps, smart keyboards, AR tools, and anything that relies on Mobile ML.

Use Cases in the Real World

TensorFlow Lite powers everything from real-time language translation to personalized app experiences. It’s behind many Android features, but it works just as well on iOS when needed.

Perfect for:

- Android-first mobile apps

- Cross-platform on-device AI frameworks

- Lightweight ML SDKs for apps with limited storage

- Projects where inference must happen offline or privately

And since it’s backed by Google, the tools and support keep getting better every year.

ONNX vs CoreML vs TensorFlow Lite Comparison

Choosing the right AI framework for your mobile app is not always straightforward.

Each tool brings something different to the table. Some are great for flexibility, others are tied deeply to speed, and some are deeply tied to specific platforms.

Here is a clear, simple comparison to help you decide.

| Feature | ONNX | CoreML | TensorFlow Lite |

|---|---|---|---|

| Best for | Cross-platform flexibility | iOS-first, privacy-focused apps | Android-first and scale-ready apps |

| Platform support | Android, iOS, Windows, embedded | iOS, iPadOS, macOS | Android, iOS, Raspberry Pi, edge |

| Performance | Fast with ONNX Runtime Mobile | Super optimized for Apple devices | Smooth on mobile CPUs and GPUs |

| Privacy (on-device) | Yes – runs offline | Yes – Apple is strict on privacy | Yes – great for offline performance |

| Ease of use | Moderate (needs some setup) | Very easy inside Apple’s ecosystem | Friendly and well-documented |

| Model size tools | Shrinking supported | Auto-optimized by Apple | Supports quantization for small sizes |

| Integration tools | ONNX Runtime, ONNX.js | Vision, CreateML, SiriKit | Model Maker, Android ML Kit |

All three are strong mobile AI frameworks, and they all support on-device machine learning. It actually comes down to where you have users, and how flexible or integration you need.

Lightweight & Embedded Options for Niche Use Cases

Not every app needs a huge AI engine running in the background. Some apps just need to be smart, without being bulky.

Think fitness bands, budget phones, or apps running where the internet comes and goes. In these cases, lightweight AI frameworks and embedded AI frameworks are the better fit.

Why Lighter AI Makes Sense

If you’re working with limited memory, slower processors, or tight battery budgets, going lightweight matters.

These frameworks are designed to make on device ML possible without draining resources or slowing things down.

Here’s what you get:

- Faster startup times

- Smaller app size

- Lower power usage

- Smooth performance even on older devices

This is especially helpful for Edge AI mobile applications and other real-time features where speed and efficiency are non-negotiable.

Some Frameworks Built for “Small but Smart”

Here are a few go-to options when you’re building compact, efficient apps using mobile ML frameworks:

- TensorFlow Lite Micro

Designed for microcontrollers and very low-power devices. Ideal for embedded AI use like smart wearables, appliances, or IoT sensors.

- TinyML

Not a single tool, but a growing movement focused on running ML models on the tiniest devices. Great for hardware-constrained environments where full-size frameworks won’t fit.

- ONNX Runtime Mobile (with quantized models)

You can slim down ONNX models for use in mobile or embedded settings. This gives you flexibility if you’re building cross-platform on-device AI frameworks.

- MediaPipe by Google

A lightweight, real-time ML framework optimized for visual tasks like face detection, hand tracking, or gesture recognition. Works well for mobile and AR apps using a small footprint.

When to Use These Tools

- These tools are a great match when you’re working on:

- Smartwatches and fitness wearables

- Battery-sensitive Android apps

- Remote monitoring of health devices

- Mobile machine learning on budget phones

- Smart security or utility apps that need to work offline

These kinds of Mobile ML SDKs help you build fast, focused AI features, even on tight constraints.

Picking the Right AI Framework for Your App

There’s no perfect AI framework that works for every app, and honestly, that’s kind of the point. What’s “best” depends entirely on what you’re building, who you’re building it for, and what kind of performance or flexibility you need.

Here’s a no-fluff way to figure it out.

Start With Where Your App Lives

- Building for iOS?

Go with CoreML. It’s smooth, quick, and plays really well with Apple’s hardware. If privacy matters or you want your AI to run fully offline, this is a great pick.

- Focusing on Android?

TensorFlow Lite is your friend. It’s well-supported, optimized for Android phones, and gives you access to tools like ML Kit. Great if your users are mostly on Android.

- Doing both?

Check out ONNX or even TensorFlow Lite if you want to use the same model across platforms. They’re more flexible and keep you from having to build two separate versions of everything.

Consider the Stuff That Matters to You

Here are some things worth thinking about:

- Is speed a big deal?

You’ll want something optimized for the device, like CoreML on iPhones or TensorFlow Lite with GPU acceleration.

- Worried about battery or data usage?

Choose lightweight AI frameworks or ones that support on-device ML to avoid constant server calls.

- Got tight storage limits?

Use quantized models and tools that shrink your AI footprint — Mobile ML frameworks like TensorFlow Lite Micro or a trimmed-down ONNX model can help.

- Already have a model trained?

Stick with whatever framework plays nicely with your current setup. No need to reinvent the wheel.

There’s no trophy for picking the most powerful framework. The best one is the one that works reliably, quickly, and without blowing up your app size or draining the user’s battery.

Here’s a cheat sheet:

- CoreML = iOS native, private, fast

- TensorFlow Lite = Android-first, flexible, scalable

- ONNX = Platform-agnostic, open, works with multiple backends

- TinyML / Embedded AI frameworks = Great for wearables, IoT, or edge use

Real-World Use Cases with Examples

Let’s be honest.

AI can sound abstract until you see it doing something useful. But the truth is, many of the apps we use every day are already powered by on-device ML, whether we realize it or not.

From fitness tracking to smart shopping to real-time translation, mobile AI frameworks like CoreML, TensorFlow Lite, and ONNX are making our apps smarter and way more helpful.

Here’s what that looks like in real life:

- Smart Cameras & Face Filters

Ever used a filter that tracks your face perfectly, even as you move? That’s on-device AI at work.

- Example: Instagram and Snapchat-type apps’ filters

- What they use: Lightweight mobile ML frameworks like MediaPipe or TensorFlow Lite

- Why it works: It all happens on your phone, fast, private, and without needing the internet.

- Fitness & Wellness Apps

Your running app knows when you’re jogging. Your smartwatch tracks your heartbeat. And none of that data needs to leave your device.

- Example: Apple Health, Strava, Calm

- Frameworks: CoreML (iOS), TensorFlow Lite (Android)

- Key benefit: Keeps personal data private and works offline, great for real-world workouts or low-signal areas.

- Instant Translation & AR Text Reading

Point your camera at a menu in another language and get instant translation. Feels like magic, right?

- Example: Google Translate’s camera mode

- Framework used: TensorFlow Lite

- How it helps: Translation happens on the device, even if you’re offline while traveling.

- Mood & Emotion Tracking

Some mental health apps can sense your mood from how you type, speak, or breathe, without sending anything to a server.

- Example: Mindfulness apps, medical diagnosis apps, journaling tools

- Built with: Small, efficient models using ONNX or CoreML

- Why it matters: It’s private and works in real time.

- Try-On & Visual Search in Shopping Apps

Ever tried virtual lipstick on your face or placed a virtual couch in your room with your phone?

- Example: Sephora, IKEA Place

- Frameworks used: ONNX Runtime Mobile, CoreML, or TensorFlow Lite

- Why it’s smart: You don’t need cloud servers to do this; it all happens on-device.

- AR & Gaming Experiences

In gaming, AI isn’t just about beating your opponent; it’s about making the experience responsive and personal.

- Example: Mobile AR games that track your movement

- Powered by: Edge AI mobile frameworks and embedded AI frameworks like TinyML or optimized TFLite

- Upside: Smooth gameplay and cool real-world interactions, no lag, no loading.

- Scanning & Organizing Receipts or Docs

Apps that scan receipts or read business cards use AI to pull data instantly.

- Example: CamScanner, Microsoft Lens

- Frameworks: ONNX, TFLite, OCR tools

- Why it’s efficient: It works even when offline and doesn’t send private data to the cloud.

This isn’t science fiction; it’s what on-device ML is doing right now, thanks to the rise of smart, flexible mobile ML SDKs.

Choosing the Right Framework? Let Boolean Inc. Guide You

Not sure which AI framework fits your app? That’s exactly where we come in.

At Boolean Inc., we don’t throw buzzwords around; we dig into your goals, your tech stack, and your users to help you choose the mobile ML framework that actually makes sense.

Whether it’s CoreML for iOS, TensorFlow Lite for Android, or a cross-platform on-device AI framework like ONNX, we help you move forward with clarity.

Need something lightweight? Need privacy? Need speed?

We’ll help you get there, without wasting time.

Let’s make your app smarter, together.

Conclusion

There’s a lot to consider when picking the right AI framework for your mobile app, and that’s okay.

Whether you’re building for iOS, Android, or both, the goal isn’t just to find the best tool on paper. It’s to find what works best for your app, your users, and your goals.

If you’re leaning toward fast and private AI on iPhones, CoreML is a solid choice. For Android, TensorFlow Lite gets the job done with flexibility and speed. And if you’re building cross-platform, ONNX gives you options without tying you down.

There’s no one-size-fits-all. And honestly, that’s the beauty of it, you can pick the right tool for the job.

And hey, if you’re still unsure? No pressure.

Boolean Inc. is here to help. We’ve helped teams figure this stuff out, build smarter apps, and get their AI features running smoothly, without the guesswork.

Let us know what you’re building. We’ll help you choose wisely and get moving.

You bring the idea. We’ll help make it smart.

FAQs

- Do I really need an AI framework for my mobile app?

If your app uses features like image recognition, voice commands, or personalization, then yes, an AI framework can make those features faster, smarter, and more efficient. And if privacy or offline access matters? Even more reason to go with on-device ML.

- What’s the difference between CoreML, TensorFlow Lite, and ONNX?

Think of it like this:

- CoreML is Apple’s go-to for iOS apps.

- TensorFlow Lite is flexible and Android-friendly.

- ONNX gives you the freedom to go cross-platform without being locked into one ecosystem.

Each one has its strengths; it depends on where your app is headed.

- Is on-device AI better than using cloud-based AI?

Both have their place. But if you want faster response, better privacy, or offline functionality, on-device AI is the way to go. Plus, it saves users from needing a constant internet connection.

- Can small apps use AI frameworks too?

Absolutely. You don’t need to build the next TikTok to use mobile ML frameworks. Even lightweight apps like document scanners or mood trackers benefit from smart features using embedded AI frameworks.

- What if I pick the wrong AI framework?

You’re not stuck forever, but switching can be a hassle. That’s why it helps to get advice early on. If you’re not sure which direction to go, a quick chat with a dev team (like us at Boolean Inc.) can save you a lot of time and budget down the road.