Introduction

People tap an icon, ask a question, and expect an answer right away.

That instant feel rests on two pillars: smart on-device models and a rock-solid release pipeline. Put bluntly, “good enough” pipelines are no longer good enough for mobile AI apps.

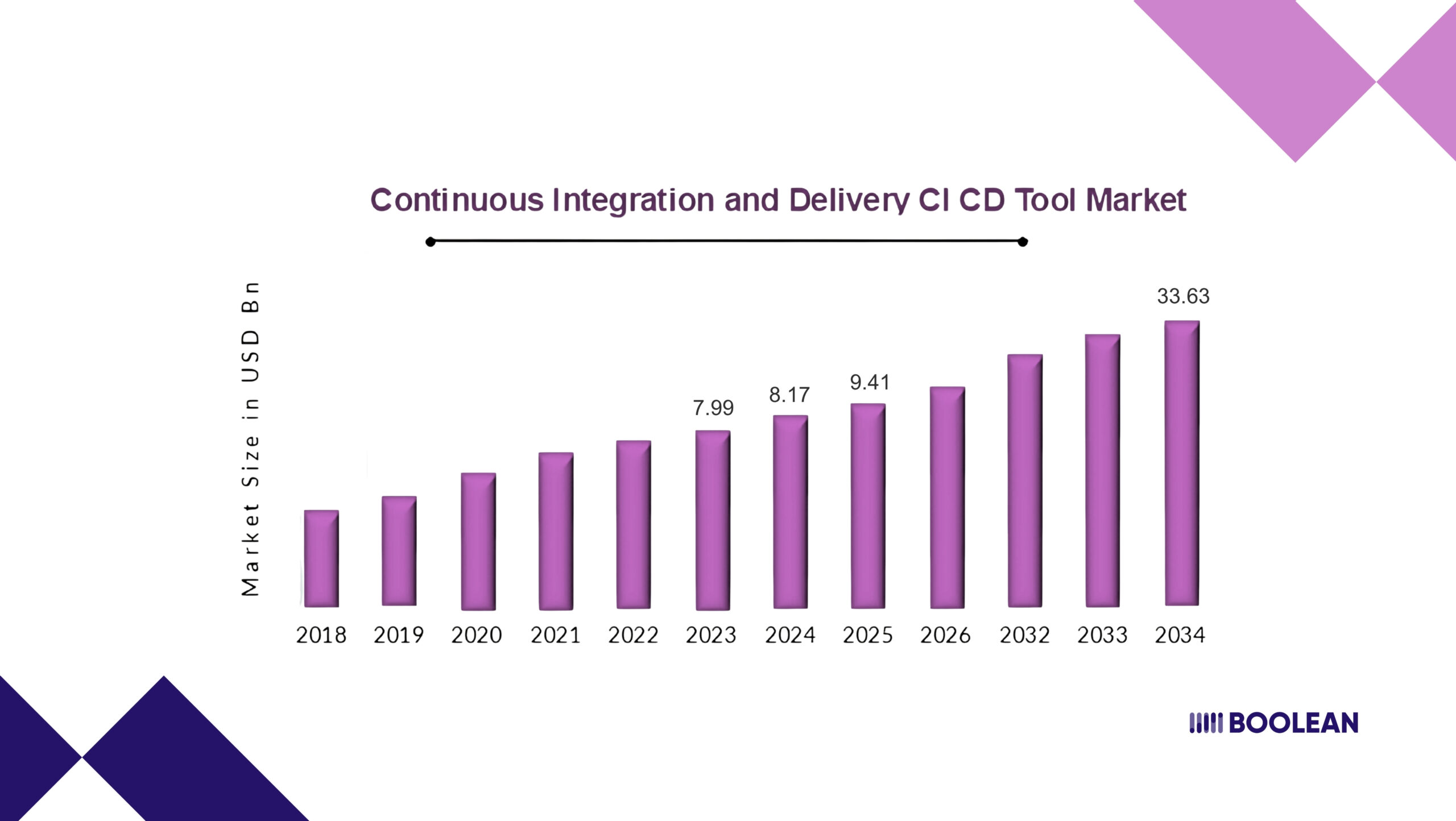

Money flows where speed and safety meet. The Continuous Integration and Delivery (CI / CD) Tool Market sat at USD 8.17 billion in 2024.

Analysts now forecast a jump to USD 9.41 billion by 2025 and a leap to USD 33.63 billion by 2034. That’s a 15.19 % CAGR.

A bigger pie means more vendors, more features, and higher expectations for teams shipping code and models.

So why drill down on CI/CD for mobile AI apps?

Traditional mobile pipelines test UI flows and network calls. Add on-device inference, and the rules change. Models must be versioned, benchmarked, and rolled back as fast as code.

A flaky build can stall an entire user base. A slow model can drain a battery in minutes.

Throughout this guide, we’ll walk through a practical flow that keeps code, models, and testers in sync.

We’ll see how “CI/CD for mobile apps” stretches once AI steps in. By the end, you’ll have a blueprint for real-time inference tests inside your own pipeline, without the sleepless nights.

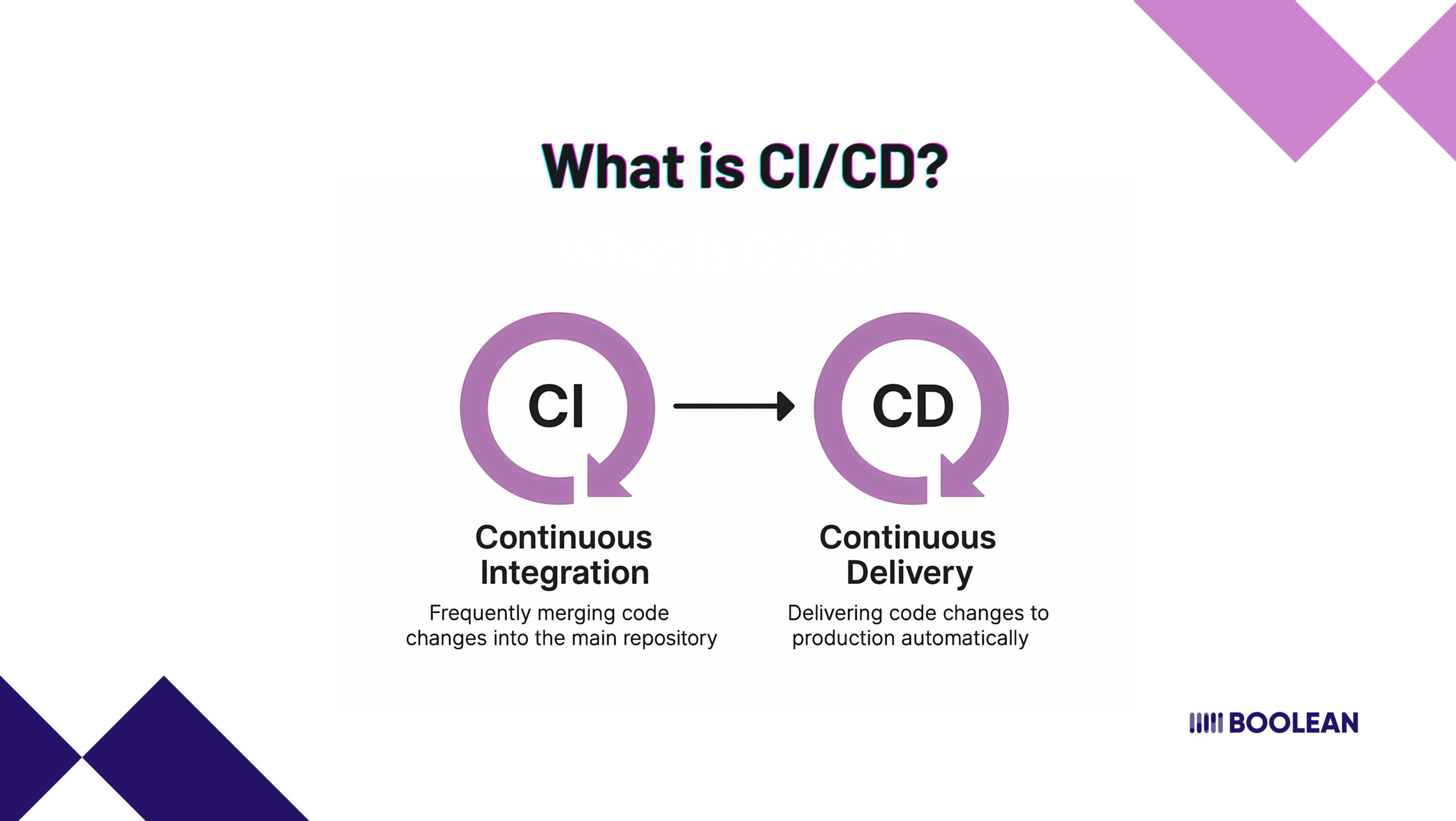

What is CI/CD?

You have probably heard CI/CDs in technical conversations. It looks technical, but at its core, it is about making life easier for development teams and keeping apps reliable for users.

Let’s talk about what it really means.

Continuous Integration (CI)

Imagine that your entire team is working on a mobile app. Everyone’s push code change, AI model Twix, perhaps update the new UI.

Now, think of anarchy if these changes were tested just before one release. It will be heavy, isn’t it? The errors will pile up and will take them to fix it forever.

This is the place where there is a continuous integration step.

With CI, every code change is tested, tested, or smaller, properly tested as it is added to the codebase. It is not about waiting for the “test phase” later.

The test takes place immediately. It checks whether the app still creates, if the automatic test passes, and if everything remains stable.

For mobile AI apps, it also means whether a new AI model version works with the current app code. Because AI is not stable. Models develop, and every small update can break some unexpected.

CI helps you catch those issues quickly when it is easy to fix them.

Continuous Delivery (CD)

Continuous Delivery comes into play once everything is integrated and tested, and you want to deliver it.

With CD, your app is always in a “ready-to-ship” state. It doesn’t mean you’re pushing updates to users every hour. But it does mean that if you wanted to release, you could, with zero panics.

No scrambling to create builds. No last-minute fixes because someone forgot to update a version number. The pipeline handles it for you.

For mobile apps that use AI, CD also ensures that the latest model versions are bundled correctly with the app, or even downloaded dynamically if your app supports that. It keeps your workflow clean, predictable, and safe.

Why CI/CD Matters So Much for Mobile AI Apps

Mobile AI apps are a different beast. You’re not just pushing app updates. You’re also dealing with AI models that need to perform in real-time, on devices with varying specs, screen sizes, and hardware.

Every model tweak could affect:

- Performance (Is the app slowing down?)

- Inference speed (Does the AI respond instantly?)

- Accuracy (Does AI make the right decisions?)

Without CI/CD, testing all these variables manually would be exhausting and unreliable. You’d spend more time firefighting than innovating.

CI/CD gives you a safety net. It automates the repetitive checks. It keeps you informed. It ensures that every update, whether it’s code or an AI model, goes through the same reliable process, every single time.

More importantly, it frees you up to focus on improving your app instead of worrying about breaking it.

What is a CI/CD Pipeline?

Think of a CI/CD pipeline as a roadmap to get your software into the hands of users.

It is a step-by-step process that takes every change-it is a new feature or bug fixed, and transfers it through a series of checks and tasks until it is ready for release.

The best part? Once you define this process, all this can happen automatically.

You do not need to manually trigger or manufacture. The pipeline handles it for you. You write the script once, and since then, it is a click of a button, or even completely automated at the base of a new code push, like a trigger.

Keeping Everyone in the Loop

Communication matters. Most CI/CD Tools require you to set your information for major moments in the pipeline. For example:

- One construction was successful.

- The tests failed.

- A deployment was completed.

You can get these updates through email, Slack, or whatever your team likes. It aligns all and avoids late surprises in the project schedule.

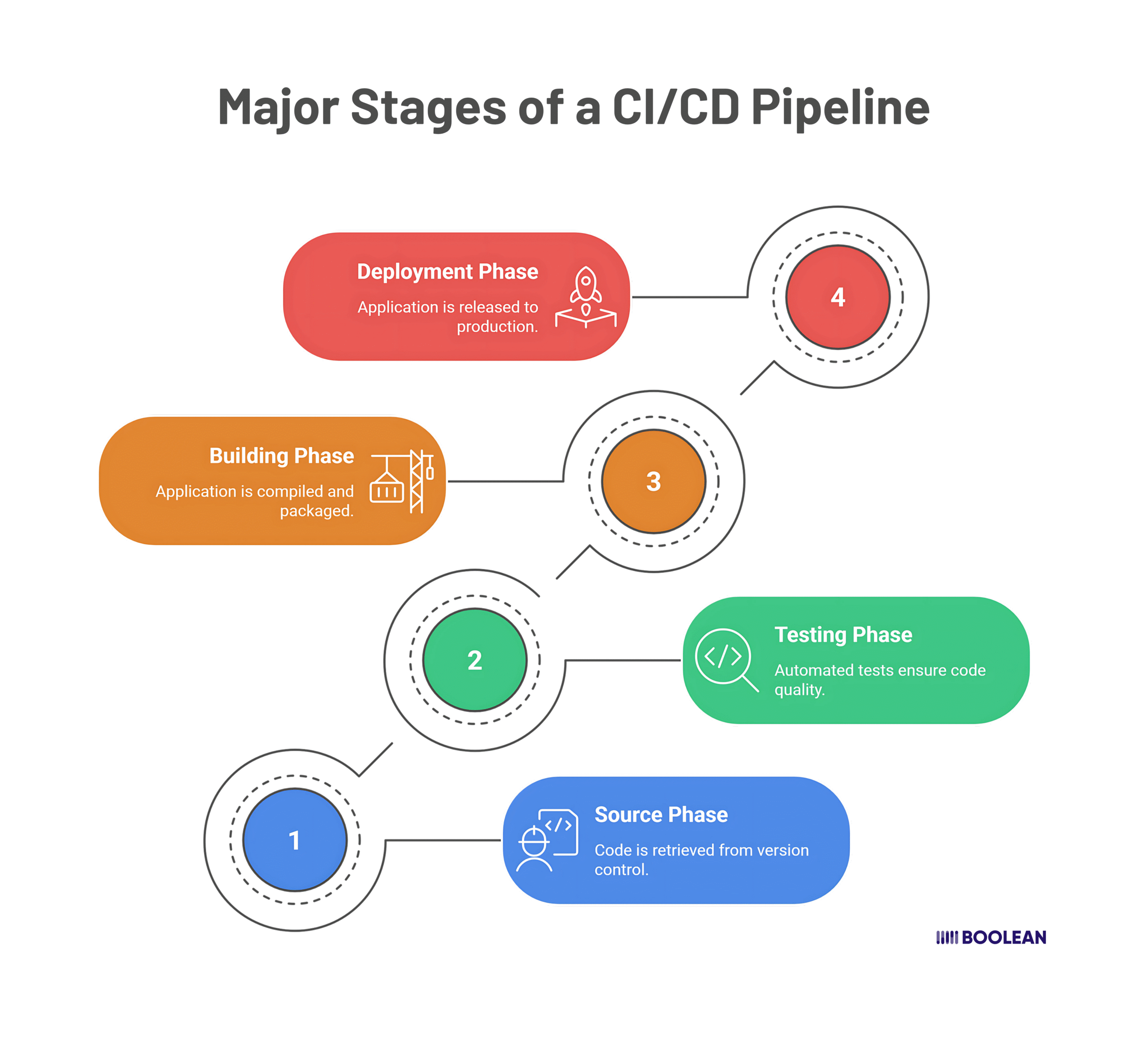

Major Stages of a CI/CD Pipeline

While pipelines can be customized, most of them follow a main structure with these main steps:

- Source phase

Everything starts here. This is the initial line of the pipeline. Typically, it is a source code repository such as GitHub, GitLab, or Bitbucket.

Whenever someone changes the repository, say, a new AI model is uploaded or a piece of app code is updated, the pipeline automatically triggers.

There is no need for a manual command or reminder.

- Testing Phase

This is the segment that saves you from nasty surprises later. The code that turned into just driven is going through a chain of automatic assessments:

- Unit Tests: Check if character elements of the code work successfully.

- UI Tests: Ensure the app’s user interface behaves as anticipated.

- Integration Tests: Validate how special parts of the app work collectively.

For AI apps, this phase can also consist of checks to test if the AI models respond correctly and perform within suited velocity limits.

If something breaks here, the pipeline stops. It’s a red flag telling the crew that the difficulty wishes attention before transferring forward.

- Building Phase

Now comes the element wherein the app starts taking form. The code, AI models, and dependencies are packaged collectively to create a runnable construct of the app.

If there are problems with lacking documents, damaged configurations, or incompatible dependencies, this phase will capture them.

For cellular apps, this is also where code signing occurs, specifically if you’re getting ready for a manufacturing launch on platforms like the Google Play Store or Apple App Store. This guarantees the app is verified and steady for distribution.

- Deployment Phase

Once the construct passes all tests and is assembled efficiently, it’s time to supply it. This is where the app is pushed to extraordinary environments consisting of:

- Alpha (inner testing)

- Beta (outside testers)

- Production (stay users)

CI/CD pipelines can help you automate deployments, too.

Whether it’s importing the app to TestFlight, rolling it out to beta testers, or publishing to app shops, the pipeline can control it with minimal manual intervention.

Automating this whole drift reduces human mistakes, saves time, and ensures that every alternate, whether it’s a code update or an AI model development, is going through a steady,

Challenges of Testing Real-Time Inference on Mobile

Testing a mobile app isn’t easy. Add AI to the mix, especially AI that needs to respond in real-time, and things get even messier.

Let’s face it, AI isn’t just running on cloud servers anymore. Apps are now using real-time edge AI to process data directly on devices.

This means the AI models need to perform live, without depending on internet speed. Sounds great for user experience, but it also adds a new level of testing headaches.

Here are the big challenges teams face when testing real-time AI features in mobile app development. :

So Many Devices, So Many Unknowns

Mobile apps don’t run on one perfect device in a lab. They run on thousands of different phones and tablets out in the wild. Each has its own specs, chipsets, and quirks.

An AI model can perform beautifully on a high-end device but can intervene or misbehave on a cheap phone.

With mobile AI frameworks such as CoreML and TensorFlow Lite, you can adapt to various devices, but you still need to test to ensure.

Testing across this sea of devices manually is just not practical. You need automation. But even automated device farms have limits.

Performance and Latency Issues Are Hard to Predict

Real-time inference isn’t forgiving. The AI needs to “think” and respond instantly. If it takes even a second too long, users will notice.

When you’re trying to implement on-device RAG (Retrieval-Augmented Generation), performance matters even more.

You’re not just running a model, you’re fetching and processing data right on the device.

Testing for this involves measuring how long it takes for AI outputs to appear during live interactions. And that’s hard to automate in a meaningful way across different devices and usage scenarios.

Constant AI Model Updates Bring Constant Risks

AI is never a “set-it-and-forget-it” feature. Models are always being retrained or fine-tuned. You fix one issue, and suddenly, a small tweak causes unexpected side effects elsewhere in the app.

Imagine you’re pushing updates to LLMs in mobile apps. Every time you tweak the model, you need to test:

- Does it integrate with the app correctly?

- Does it respond as fast as before?

- Is the accuracy still reliable on-device?

Read Also: Building AI-Powered Apps with On-Device LLMs

Without a solid CI/CD pipeline, you’ll spend more time chasing bugs than improving features.

Real-World Usage is Hard to Simulate

Your app might work perfectly in a quiet office, but what about in a noisy cafe? Or in poor lighting? Or on a shaky mobile network?

These are real-world conditions that impact how AI behaves. Testing AI in software development isn’t just about checking if it functions; it’s about checking if it functions well under messy, unpredictable conditions.

Automating tests for these scenarios is challenging, but necessary. Otherwise, you risk shipping features that fall apart in actual usage.

Testing Can’t Slow You Down

Here’s the tough part. AI testing is complex, but your pipeline still needs to stay fast. Long, heavy test cycles slow down the app development process. Teams get frustrated. Releases get delayed.

You need a CI/CD setup that balances the depth (catching significant AI issues) with speed (not transforming your pipeline into a bottleneck). It is a delicate balance but is perfectly obtained with the correct structure.

These challenges are fine. Why test the real-time AI estimate, the mobile app should be made directly in your CI/CD for the process.

This is not an additional step; if you want to provide a reliable, AI-operated mobile experience, it is part of the core workflow.

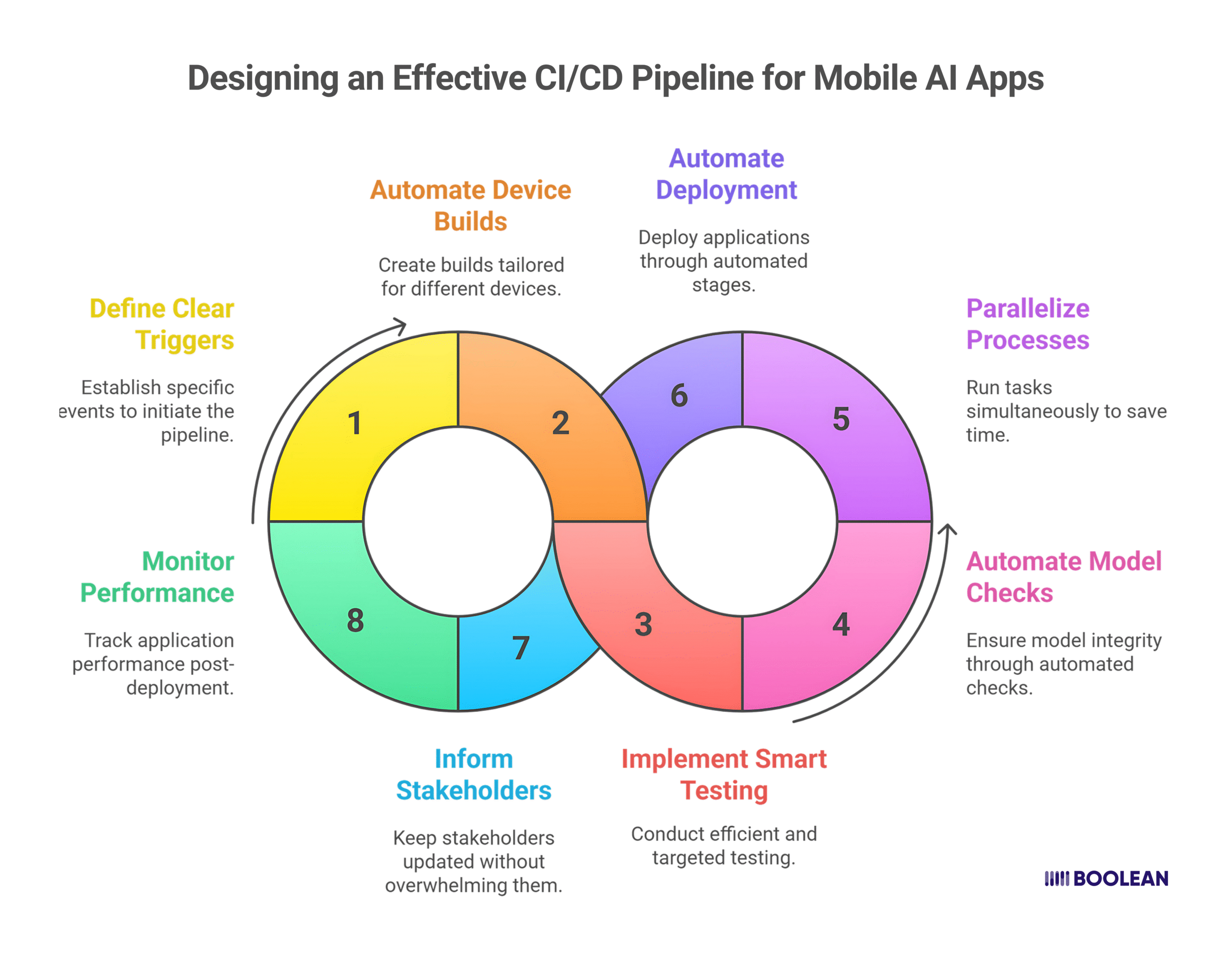

Designing an Effective CI/CD Pipeline for Mobile AI Apps

Building a CI/CD pipeline for mobile apps is already a challenge. But once you throw AI into the mix, things get trickier. You’re no longer just dealing with code updates. You’re also managing AI models, real-time inference tests, and device performance checks.

So, how do you design a pipeline that can handle all this without slowing down your team?

Let’s break it down into simple, practical steps.

- Start with Clear Triggers

First things first, decide what should trigger the pipeline:

- A new code push?

- An AI model update?

- A pull request?

For mobile AI apps, it’s an awesome concept to trigger the pipeline no longer only whilst code changes but additionally while new AI fashions are devoted to the repository. This continues both developers and AI engineers in sync.

Read Also: The best AI chatbots in 2025 for mobile apps and web platforms

- Automate Device-Specific Builds Early

Unlike internet apps, cellular apps require builds for one of a kind structures (iOS and Android).

If you’re the usage of Mobile AI frameworks like CoreML (iOS) or TensorFlow Lite (Android), you want to automate this construct right from the start.

Set up your CI/CD pipeline to generate platform-specific builds at once after the code passes initial tests. This saves time later and allows trap tool-specific build troubles early.

- Make Testing Smart, Not Heavy

You can’t run every possible test for every small change. It will slow down your pipeline and frustrate your team. Instead:

- Run unit tests for every small code update.

- Schedule full integration tests for significant merges.

- For AI inference tests, create a dedicated testing suite that focuses on speed and accuracy on real devices or emulators.

Use real-time edge AI testing tools that simulate real-world conditions (like poor lighting or background noise). But keep it selective, only run deep AI tests when necessary.

- Automate Model Integration Checks

Every time a new AI model is added, your pipeline should automatically:

- Validate the model’s compatibility with the current app code.

- Run basic inference tests to ensure it responds within acceptable latency.

- Bundle it into the mobile app build.

You don’t want to discover at the last moment that a model update broke the app.

- Parallelize What You Can

One of the smartest ways to keep your pipeline fast is to run processes in parallel:

- Build iOS and Android apps at the same time.

- Run unit tests while builds are happening.

- Execute AI model validation alongside UI tests.

Parallel execution saves hours in long pipelines and keeps the team’s feedback loop short.

- Automate Deployment Stages Carefully

Set up different deployment stages:

- Alpha builds for internal testing.

- Beta releases for early adopters.

- Production for public release.

You can automate these steps, but always keep manual approvals before pushing to production.

This adds a final checkpoint for human review, especially important when dealing with AI in software development, where model behavior might need a last-minute sanity check.

- Keep Everyone Informed (Without Spamming Them)

Your CI/CD pipeline should send notifications at critical points:

- Build failures.

- Test failures.

- Successful deployments.

But don’t flood the team with minor alerts. Set up smart notifications in apps like Discord, Slack, or email, so the right people get the right updates at the right time.

- Monitor After Deployment

CI/CD doesn’t stop after deployment. Use monitoring tools to track AI inference performance in real user environments.

This feedback can be invaluable for teams working on LLMs in mobile apps or apps with complex AI features.

The Goal: Smooth, Reliable, Fast Releases

An effective CI/CD pipeline for mobile AI apps should feel like a smooth flow:

- Developers push changes.

- AI engineers update models.

- The pipeline handles the heavy lifting.

- The team gets quick feedback.

- Releases happen with confidence.

It’s not about making it overly complex. It’s about making it repeatable, reliable, and fast.

Best Practices for Testing Real-Time AI in CI/CD

Testing real-time AI is different.

You’re not just checking if a button works or a screen loads. You’re testing how fast and how accurately an app can “think” on the spot.

And when you’re running these tests inside a CI/CD pipeline, things need to be smart, automated, and efficient.

Here are some best practices that will actually make a difference:

- Test on Real Devices (Not Just Emulators)

Emulators are great for quick checks. But they cannot completely copy the quirks of real devices.

Performance bottlenecks, thermal throttling, or hardware-specific issues often do not pay attention to the emulator.

Set the test on a small pool of real devices representing various performance levels-high-end, mid-range, and budget equipment.

This gives you a clear picture of how your AI features perform in the real world.

- Keep AI Inference Tests Lightweight and Targeted

You can’t afford to run heavy AI tests on every code change. Instead:

- Identify key AI functions that are critical to the user experience.

- Write fast, focused tests that validate inference speed and accuracy for these functions.

For example, if your app does live image recognition, test how quickly it returns results under normal lighting conditions. Don’t run full dataset evaluations in the pipeline; that’s best left for offline testing.

- Simulate Real-World Scenarios Early

Don’t wait for production to discover that your AI features fail in noisy environments or poor lighting. Simulate these scenarios during automated tests:

- Add background noise for voice recognition tests.

- Alter brightness and contrast for image-based AI.

- Introduce network delays if the AI falls back to cloud inference.

It doesn’t need to be exhaustive. Even a basic simulation can catch obvious failures before they reach users.

- Measure Latency in Every Test Run

Speed is everything with real-time AI. Your tests should always measure latency, not just “does it work”, but “how fast does it respond”.

Set thresholds:

- If inference takes longer than X milliseconds, fail the test.

- If the frame rate drops below a certain point during AI processing, flag it.

Keep it simple, but consistent. This ensures performance regressions are caught early.

- Use Versioned AI Models in Tests

Always test against specific versions of your AI models. Don’t rely on “latest” by default. Versioning ensures you know exactly which model is being tested and deployed.

This also makes it easier to roll back if a new model introduces unexpected issues.

- Automate Model Validation Before Integration

Before a new AI model becomes part of the app build, run automated validations:

- Check model size to ensure it doesn’t bloat the app.

- Run basic inference tests to verify output consistency.

- Ensure compatibility with the current app code.

This step acts as a safety net that catches problems before they reach the build phase.

- Parallel Testing for Speed

Speed matters, even in testing. Design your CI/CD pipeline to run AI tests in parallel with other test suites.

For example, while UI tests are running, you can simultaneously validate AI inference on real devices.

This saves valuable time and keeps your development workflow smooth.

- Analyze Failures Fast with Clear Logs

AI test failures can be tricky to debug. Make sure your test scripts generate detailed, but readable logs:

- Capture inference times.

- Record device resource usage.

- Save input/output samples when tests fail.

Good logging reduces time spent figuring out what went wrong and speeds up fixing the issue.

- Keep Feedback Loops Short

Developers and AI engineers require a quick response. If a model fails in tests, it should be immediately known for clear reasons.

Integrate information in Slack or email, but avoid spamming. Focus on important failures that need to be noted.

- Continuously Improve Test Coverage (But Stay Practical)

AI evolves fast. Your tests need to evolve, too. Regularly review testing cases to cover new features or edge cases, but avoid overcomplicating.

Focus on high-effect tests that catch real issues without drawing the speed of the pipeline.

Testing real-time AI in CI/CD isn’t about making it perfect. It’s about making it practical, reliable, and fast enough to keep up with your team’s workflow.

Conclusion

Building mobile apps that run AI smoothly isn’t easy.

It’s one thing to have cool AI features, but making sure they actually perform well, update without headaches, and reach users quickly? That takes a solid CI/CD pipeline.

At Boolean Inc., we get it. You want your team to focus on building amazing features, not getting stuck fixing broken builds or slow tests.

This is why it is not just a technical process for us to manufacture smart, automatic pipelines-this is about keeping your team stress-free and your app is always ready for the next release.

Let’s talk if you want to simplify your CI/CD for mobile AI apps, or to test the real-time estimate without slowing down your growth.

👉 Access a CI/CD for a strategy that works for your AI-operated apps.

👉 Specialist guidance is required? Contact us for a personal consultation.

FAQs

- Why is CI/CD important for mobile AI apps?

Because AI features update often, and mobile apps need frequent releases. CI/CD helps automate testing and deployment so you’re not stuck doing everything manually each time.

- Can I test AI inference speed in a CI/CD pipeline?

Yes, you can! You just need to set up automated tests that measure how fast your AI model responds on actual devices or emulators.

- Do I need different pipelines for iOS and Android AI apps?

Not necessarily. You can design one pipeline that builds both versions in parallel. But you’ll need to handle platform-specific build steps inside that flow.

- How often should I run full AI tests in CI/CD?

Run lightweight AI tests on every change, but save full, deep AI testing for major updates or pre-release builds. This keeps things efficient.

- Can Boolean Inc. help set up my CI/CD pipeline for AI apps?

Absolutely! We help teams automate their CI/CD workflows so you can focus on building great apps while the pipeline takes care of the rest.