Introduction

LLMs in mobile apps are changing how we interact with technology every day.

Just a few years ago, running these advanced models on a phone seemed out of reach.

Now, it’s becoming a reality.

With more users pushing the limits of LLMs in mobile apps, the experience of using AI is getting faster, more private, and easier to personalize.

From writing tools to voice assistants, these models are reshaping what mobile apps can actually do, without needing a constant internet connection.

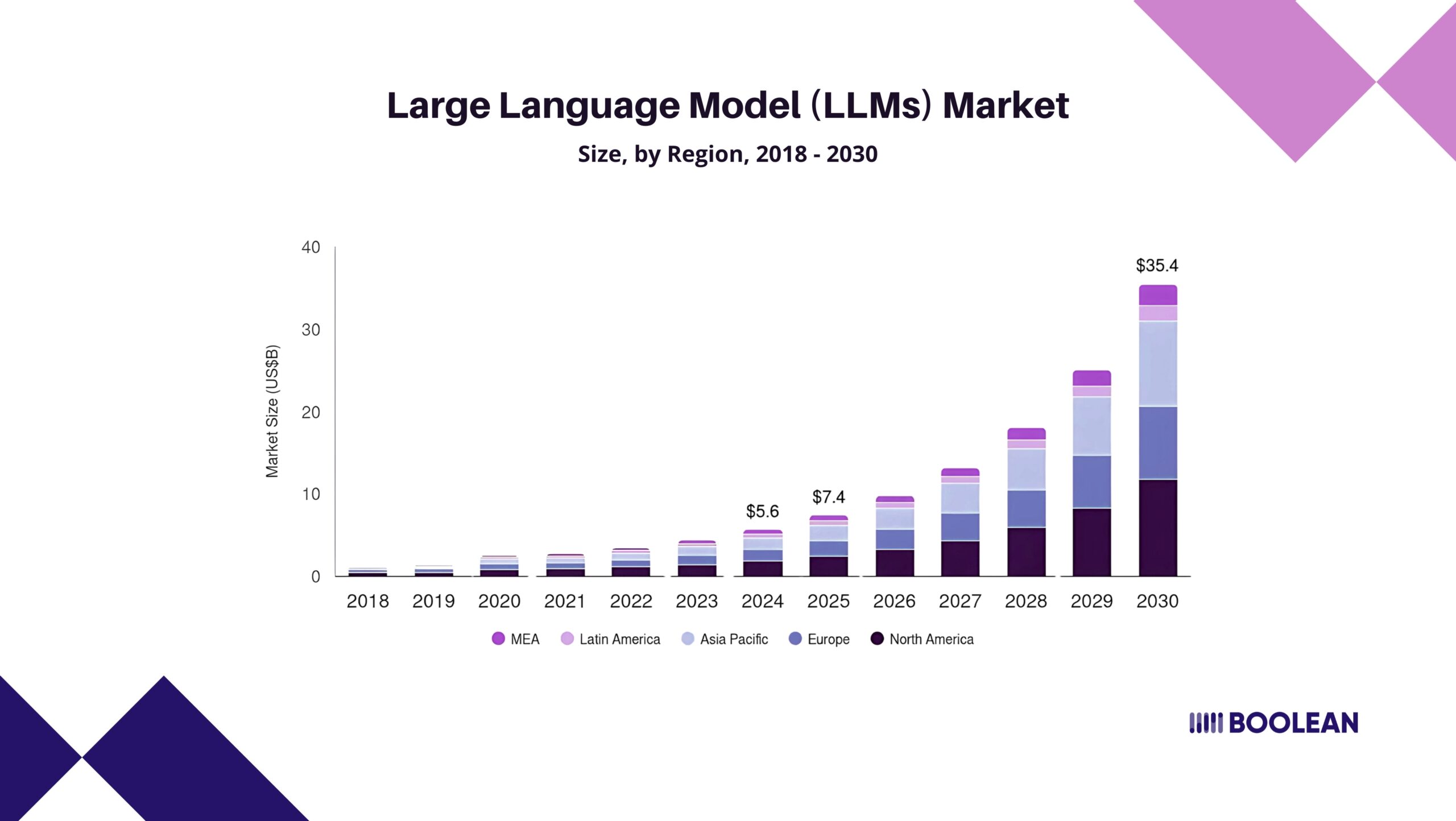

The numbers tell a clear story: the global large language models market was valued at over $5.6 billion in 2024.

Projections indicate it will reach over $35 billion by 2030, maintaining an annual growth rate of approximately 37%.

So what’s driving this change?

People want speed. They want privacy. And they don’t always want to rely on a cloud server sitting halfway across the globe.

Local LLM deployment offers a way to bring more control and better performance to apps, especially when you’re offline or on a slow connection.

In this post, we’ll walk through some of the most promising open source mobile LLM options like Phi-3 and Gemma.

We’ll also explore how tiny LLMs are being embedded into everyday apps, and what you should think about if you’re planning to build or integrate one yourself.

Why Run LLMs on Mobile?

Let’s be honest, cloud-based AI isn’t always the answer. It’s powerful, sure. But it comes with trade-offs: latency, data privacy concerns, and the constant need for a stable connection.

Running models directly on your device, what many call on-device LLMs, brings everything closer to the user.

Responses feel instant. Data doesn’t need to leave the phone. And yes, even when you’re offline, your app can still function like it’s connected to something much bigger.

This is why local LLMs are getting serious attention. You get the benefits of AI without depending on remote infrastructure.

Plus, you save on server costs and reduce app complexity. For startups and indie developers, that’s huge.

It also opens the door for smarter app experiences, like real-time edge AI in mobile apps. Think voice-to-text, language translation, summarization, or code suggestions, all happening on the spot. No round-trip to the cloud. No delay.

With more open source LLM options popping up, it’s getting easier to build these experiences without starting from scratch.

Tools like Phi-3 and Gemma are making it possible to embed compact, efficient models into mobile environments without overwhelming your app.

This shift is also aligning well with trends like composable architecture in mobile apps. Developers are thinking in smaller, flexible building blocks, and embedding LLMs in apps fits that mindset perfectly.

Of course, performance still matters. You want an efficient LLM for mobile, not one that drains the battery or crashes older devices. They’re smaller, optimized, and surprisingly capable for tasks like summarizing, answering questions, or personalizing content.

Security also becomes easier to manage when you run models locally.

You’re not shipping sensitive data off to a server. It fits naturally with ideas like zero-trust mobile security, where you assume every part of the system needs to be verified.

And let’s not forget about user experience. With UI animation, smoother AI responses feel more native and responsive. No awkward loading spinners. Just fast feedback that makes your app feel smarter.

So whether you’re building something new or improving an existing app, LLMs on mobile open up serious possibilities.

And with better tools for LLM deployment, more developers are exploring this path. The demand for LLM mobile apps is real, and growing.

From running LLMs locally to picking the best LLMs for mobile, it’s no longer about asking if this is possible. It’s about figuring out how to make it work for your use case.

Key Considerations for Mobile Integration

Before you start plugging a language model into your app, take a breath. There’s more to think about than just model size.

Running LLMs in mobile apps brings a new set of questions. Some are technical. Some are about trade-offs. Others are about what actually makes sense for your users.

- Device Constraints Are Real

Mobile devices aren’t mini-servers. RAM is limited, battery life matters, and performance varies a lot between devices. A model that works well on a flagship phone might crawl on older hardware.

That’s why choosing a lightweight LLM for apps is key.

Start small. Optimize aggressively. Efficient LLMs for mobile are those that deliver just enough performance for your use case, without overwhelming the system.

- Local vs Cloud: Choose What Fits

Should you run the model locally or lean on the cloud? It depends.

Local LLMs offer better speed, offline access, and improved privacy. But they’re limited by what the device can handle. Cloud-based models give you more horsepower, but add latency and ongoing server costs.

The right move might be somewhere in between. Hybrid setups are growing. LLM deployment that starts local, but falls back to the cloud when needed.

- Frameworks and Format Compatibility

Your deployment toolkit matters. Whether you’re using ONNX Runtime, TensorFlow Lite, or something like llama.cpp, you’ll want to make sure your model actually runs, and runs well, on your chosen stack.

Not all open source LLM projects are equally ready for mobile. Some provide clean ONNX exports. Others need more tweaking. And some don’t support quantization out of the box.

The smoother your toolchain, the faster your LLM mobile app gets to market.

- Quantization and Optimization

If you want real-world performance, you’ll need to shrink your model. Quantization reduces model size and makes it easier to run on-device. Go for 8-bit or even 4-bit when possible.

Many on-device LLMs now support these formats. It’s one of the reasons we’re seeing a jump in adoption.

Running LLMs locally becomes much more practical when the model doesn’t consume half of your phone’s memory.

- Choose Models with Long-Term Support

Working with an open source LLM? That’s great, but do your homework. Look for active repos, a helpful community, and frequent updates. Dead projects waste time.

If you’re going to embed an LLM in your app, you want confidence that it will keep improving, and that you won’t be the only one fixing bugs.

- Match the Model to the Use Case

Not every app needs a 2B+ parameter model. If your use case is narrow, such as summarization, text classification, or smart autofill, you may achieve better results with smaller models specifically tuned for the task.

Keep it focused. That’s what makes embedding LLMs in apps successful. It’s not just about adding AI, it’s about adding the right AI.

- Don’t Forget Privacy and UX

Mobile LLMs give you more control. You’re not shipping user data to a server. You’re processing it locally. That can be a huge trust boost for users.

But it also means you own the full experience. If the model fails, there’s no cloud to catch you: testing, fallback plans, and good UX matter.

Especially when you’re promising AI features that feel seamless.

Choosing the best LLMs for mobile isn’t just about squeezing a model into an app. It’s about knowing your platform, your users, and your goals. Make decisions with all three in mind.

Curious how to actually build with these models? Check out our guide on Building AI-Powered Apps with On-Device LLMs for hands-on steps and practical tips.

Overview of Popular Lightweight LLMs

Not all models are built for mobile use, and that’s okay. What matters is knowing which ones are small, efficient, and actually work on real devices.

Let’s start with one of the most talked-about compact models right now.

Phi-3 (by Microsoft)

If you are looking for a small, sharp, and competent language model that actually works on mobile, then Phi-3 is worth a close look.

Microsoft built Phi-3 with a clear target: Make it lighter enough to run on everyday devices, while still sufficient to be useful in smart real-world functions.

This makes a natural fit for LLM in mobile apps, especially those who require speed, privacy, and offline access.

What Makes Phi-3 Mobile-Friendly?

- Compact Size: Phi-3 comes in small variants, such as Phi-3 mini and Phi-3 small, which are perfect for walking directly on smartphones, tablets, and embedded devices. There is no need for huge storage or RAM.

- Fast, Local Performance: As on-device LLM, it reacts quickly without sending data to the Internet. This is ideal for tasks such as summary, recitation, and chat features inside your app.

- Privacy by Design: Because you are locally running LLMS, the user lives on the data device. No cloud round-trips. This is a big thing for apps handling sensitive information like health, finance, or individual notes.

Tech Details That Help Developers

- Supports Quantization: Phi-3 works well in quantized formats (like 8-bit), which means it uses less memory and runs faster. That’s key for efficient LLM for mobile usage.

- Easy Integration: Microsoft provides tools and formats compatible with mobile stacks, such as ONNX. This helps streamline LLM deployment, especially for Android and iOS apps.

- Open Source Access: You’re not locked into a proprietary setup. As an open source LLM, Phi-3 can be adapted, fine-tuned, or integrated however you need. The code and model weights are freely available.

Practical Use Cases for Phi-3 Mobile Apps

- Smart Text Input: Predictive typing, autocomplete, or grammar suggestions, all possible with Phi-3 mobile apps.

- Offline Chatbots: Want a chatbot that works even without Wi-Fi? Phi-3 enables that, using LLMs on mobile for fast, private responses.

- Note Summarization: Great for journaling or productivity apps that summarize content on the spot.

- Lightweight Code Helpers: For dev tools, Phi-3 can offer basic code suggestions or error explanations, right on the device.

Why Choose Phi-3?

- It balances size and capability better than many other models.

- It’s easy to experiment with, thanks to open tools and good documentation.

- It supports the trend toward embedding LLMs in apps where latency, privacy, and control really matter.

Phi-3 shows that best LLMs for mobile don’t need to be huge. They just need to work, efficiently and reliably, inside your app’s environment.

Gemma (by Google DeepMind)

If you’ve ever wanted a smart language model that doesn’t rely on a constant internet connection, Gemma LLM might be what you’re looking for.

This model is part of Google’s push to bring local LLMs to more devices, without making you sacrifice speed or accuracy. It’s small, efficient, and meant for LLMs in mobile apps that just need to work.

Why Does Gemma Work So Well on Mobile?

Let’s keep it simple. Mobile devices have limits: battery, memory, and processing power. Gemma for mobile is built with that in mind.

It runs locally, responds quickly, and keeps user data on the device. So your app can be smart and private at the same time..

Whether you are building a note-taking app, AI chatbot, or even a voice assistant, Gemma helps you offer facilities without pushing everything on the cloud.

Key Points Developers Like

- It’s Open Source: No paywalls. No hidden costs. Open source LLM models like Gemma give you freedom, freedom to experiment, adjust, and deploy as you need.

- Easy to Work With: Gemma plays well with mobile-friendly tools like ONNX and TensorFlow Lite. That makes LLM deployment much less painful. Whether you’re targeting Android or iOS, the setup won’t make you tear your hair out.

- Light on Resources: This is a lightweight LLM for apps. It’s meant to run on everyday devices, not just the latest flagship phones. You don’t need top-tier specs to make it work.

Real Ways to Use Gemma in Your App

- Smart Replies in Messaging Apps: Think context-aware suggestions that actually make sense.

- Offline Text Summarizers: Perfect for users who want to keep things local, especially in travel apps or research apps.

- Voice Input Features: Real-time transcription and commands, with no server involved.

- Personal AI Tools: Apps that act like a smart companion, summarizing, organizing, helping, all without sending data to the cloud.

Why Gemma Might Be Right for You

- It’s one of the most practical LLMs on mobile today.

- You can keep user data safe by running LLMs locally.

- It helps you build responsive, helpful AI features that users can trust.

- It fits well in apps that need quick responses without big infrastructure.

In short, Gemma LLM is enough to run easily, enough to be useful, and open enough to build real features without additional obstacles for developers.

If you are thinking about embedding LLM in apps, it can be one of the best LLMs for mobile.

Other Open Source LLMs Worth Considering

Not every project needs Phi-3 or Gemma. Sometimes, the right choice comes down to size, compatibility, or just personal preference.

Luckily, there are other open source LLM options that are small enough to work well on mobile, and still flexible enough to build real features.

Here are a few models people are using today in LLM mobile apps.

Mistral (Small but Powerful)

Mistral is known for its tiny LLMs that pack a punch. The model comes in smaller versions, like Mistral 7B, which can be trimmed down further using quantization to make them run on devices.

- Works well for running LLMs locally with decent speed.

- Flexible for LLM deployment in both mobile and edge environments.

- Not as small as Phi-3, but still workable on higher-end devices or with good optimization.

For apps that need more power but still want on-device LLM support, Mistral is a solid option.

LLaMA (Lightweight When Tuned Right)

Meta’s LLaMA models are popular, and while the standard versions are too large for phones, people have created open source mobile LLM variants.

- Models like LLaMA 2 7B (quantized) can be squeezed onto certain mobile devices.

- Ideal for experimenting with embedding LLMs in apps that need a bit more brainpower.

- Great for developers who enjoy tweaking and customizing their models.

It takes more effort, but if you want to explore efficient LLM for mobile, some LLaMA variants can get the job done.

TinyLlama (Made for Mobile)

As the name suggests, TinyLlama is built for tight environments.

- Super small size, designed specifically for mobile AI models.

- Fast startup, low memory use, and minimal impact on battery life.

- Excellent for simple tasks in mobile LLMs, like keyword tagging, basic chat, or content suggestions.

This model is especially useful if your app just needs a lightweight LLM for apps, not something big and resource-heavy.

LLaVA (For Vision + Language Apps)

If your app mixes text and images, like scanning, captioning, or AR support, LLaVA is a lightweight model to know.

- Combines local LLMs with visual input processing.

- Great for on-device features like object detection plus natural language responses.

- Smaller builds are available for mobile AI models, especially with quantized versions.

Apps using LLaVA often fall under creative tools, smart cameras, or even real-time guides.

GPTQ Models (Highly Optimized Variants)

GPTQ isn’t a model itself, it’s a method to compress and optimize large models like LLaMA or Mistral into smaller, usable versions. Think of it as shrinking a model without losing too much capability.

- Ideal for running LLMs locally where space and memory are limited.

- Useful for developers comfortable with tinkering and trying different LLM deployment setups.

- Helps make big models practical as tiny LLMs for mobile use.

If you’re aiming for efficient LLM for mobile and need more flexibility, GPTQ-optimized models can be a smart move.

FasterTransformer (Built for Speed)

Not a model, but worth mentioning, FasterTransformer helps accelerate on-device LLM execution.

It works with models like GPT or LLaMA and helps improve response times and reduce energy use.

- Useful when you’re trying to embed LLMs in apps that need real-time interaction.

- Often used in best LLMs mobile setups for demanding tasks like translation or code suggestion.

Read Also: How to Build ChatGPT-Powered Apps for Business Use

Why These Models Matter

All these options support LLM in mobile apps based on server or cloud. This means that more control, better privacy, and quick response time for users.

Whether you are creating a tool for personal productivity, language learning, or AI chat, these models help you create smart features without eliminating the tech side.

And since they are open source LLMs, you are free to modify, scale, or improve them based on the needs of your specific app.

Deployment Strategies: Getting LLMs Running in Mobile Apps

Once you choose the right model, the next question is: How do you really run it inside your app? Good news, you have got options, and you do not need a data center or supercomputer.

Here’s how to think about LLM deployment for real-world mobile use.

- Choose the Right Format for the Model

Most open source LLMs need a little conversion before they’re mobile-ready.

- ONNX: Works across platforms and is supported by many mobile AI models. Great for flexibility.

- TensorFlow Lite: Optimized for Android, and now increasingly iOS. Ideal for efficient LLM for mobile deployment.

- CoreML: Best for iOS apps. Converts models into a format that runs fast on Apple devices.

Curious about frameworks? Check out Best Mobile AI Frameworks in 2025: From ONNX to CoreML and TensorFlow Lite for a detailed comparison.

- Optimize for Size and Speed

Nobody wants their app to eat battery or lag. Here’s how to avoid that:

- Quantization: Reduces the size of your local LLMs without losing too much accuracy.

- Pruning: Cuts out unused parts of the model to make it lighter.

- Edge Compilers: Tools like TVM or XNNPack help improve performance for on-device LLM use.

Pro tip: Small tweaks can make a big difference, especially for tiny LLMs or lightweight LLMs for apps.

- Pick a Runtime Engine

This is the part that actually runs the model on the phone.

- Onnx Runtime Mobile: Works on Android and iOS, supports several LLMs in the mobile app.

- Tensorflow Lite Interpreter: Slim and Fast, especially for Android.

- CoreML Runtime: manufactured in iOS, adapted for mobile LLMS on iPhones and iPads.

Choosing the right engine ensures your LLM mobile apps run smoothly and don’t drain the battery.

- Keep Data Local Whenever Possible

One reason many developers use local LLMs is privacy. Running everything on the device means user data stays put.

- No internet needed for processing.

- Faster response times.

- Easier compliance with privacy laws.

Running LLMs locally also helps apps work in places with weak or no network, travel, fieldwork, rural areas, etc.

- Test on Multiple Devices

Not every phone is created equal. A mobile AI model might run fine on a new device but struggle on older hardware.

- Test for performance, memory use, and battery impact.

- Optimize settings or fallback behaviors based on device capabilities.

- Offer options in-app for users to enable or disable certain LLM features.

Real-World Examples & Use Cases

So, what do LLMs really look like in the wild in mobile apps?

It’s not all chatbots and gimmicks.

Developers are finding smart, practical ways to add on-device LLM features that users really benefit from, without needing to send data to the cloud.

Here are some examples that show how powerful and flexible local LLMs can be.

- Smart Note-Taking and Summarization

Apps such as Voice Journal, Meeting Assistant, and Two-Du Manager are now using local LLMs for cleaning dirty inputs, briefly, or even auto-generated titles and tags.

- Tiny LLMs make it possible to do this right on the phone.

- Embedding LLMs in apps like this helps people stay organized, even offline.

- Developers often use models like Phi-3 or TinyLlama for these tasks due to their compact size and speed.

- Offline Chatbots and Assistants

Need quick answers, reminders, or helpful replies, without relying on Wi-Fi or mobile data?

- Running LLMs locally makes this possible, especially in areas with poor connectivity.

- Language learning apps, travel tools, and wellness apps are using this approach to offer AI chat features without cloud costs.

- With the right open source LLM, even basic devices can offer fast, useful conversations.

- Code Help for Developers (Yes, on Mobile!)

Some mobile IDEs and developer tools now offer on-device LLM support for:

- Code completions

- Syntax suggestions

- Error explanations

This is especially helpful for students or devs working on the go. Using a lightweight LLM for apps like this keeps things responsive while preserving privacy.

- Real-Time Text Suggestions in Messaging Apps

From grammar fixes to tone suggestions, mobile LLMs are making typing easier and smarter.

- Apps are using efficient LLMs for mobile to help users express themselves more clearly.

- Since everything happens locally, suggestions pop up instantly, no awkward delays.

- Voice Assistants with Zero Cloud Dependency

Many users are uncomfortable sharing voice data with cloud services. Developers are solving that by:

- Using open source mobile LLM tools to process voice commands on the device.

- Translating speech to text, generating replies, and triggering actions, locally.

- This setup also supports mobile AI models focused on accessibility and personal productivity.

- Context-Aware UI Features

Some modern apps are using LLM mobile apps not just for content, but for adapting the interface based on how users interact.

- Dynamic text suggestions

- Smart autofill

- Adaptive prompts or action buttons

These features use LLMs on mobile to read patterns and respond in helpful, natural ways.

From clever messaging to offline voice assistants, cases of use of LLM in mobile apps continue to grow.

With the rise of Open Source LLM and better tools for LLM sins, even small teams can manufacture apps that feel fast, individual, and intelligent – without massive infrastructure requirements.

Challenges and How to Overcome Them

| Challenge | What It Means | How to Overcome It |

|---|---|---|

| Memory & Storage Limits | Large models use too much RAM or device space. | – Use quantized or compressed models (e.g., 4-bit) – Choose lightweight LLMs for apps – Clean up model files after use |

| Slow Load Times | Models take time to initialize, causing delays. | – Use lazy loading – Pre-load in the background – Choose optimized runtimes like ONNX or TensorFlow Lite |

| Battery Drain | Heavy computation reduces battery life quickly. | – Optimize prompts and reduce processing cycles – Benchmark energy usage – Stick to efficient LLMs for mobile |

| Device Performance Variability | Not all devices handle LLMs equally well. | – Detect device specs and adapt – Offer feature toggles for low-end users – Test across a variety of phones and tablets |

| UX and Trust Issues | If LLM responses are slow or inconsistent, users may lose trust. | – Provide loading indicators – Cache common results – Seamlessly embed LLMs in apps with consistent tone and speed |

Don’t try to do too much. The best LLM mobile apps do one or two things really well. Start small, keep it fast, and build from there.

How Boolean Inc. Helps Us Build Better Mobile LLM Apps

It is not easy to create smooth, responsible, and privacy-friendly LLM in mobile apps.

From the management of memory and delay to the model for real-world devices, there is a lot to handle-either if you are aiming to perform without relying on the cloud.

That’s where Boolean Inc. comes in.

They don’t just offer tools, they offer real, practical support for getting LLMs in mobile apps working the way they should.

Whether it’s on-device LLM deployment, model compression, or running open source LLMs efficiently on phones and tablets, their focus is always on usability and speed.

Here’s what they bring to the table:

- Optimized model runtimes built for edge hardware

- Support for tiny LLMs and efficient LLMs for mobile

- Simple tooling to help developers handle local LLMs without headaches

- Deep integration support for embedding LLMs directly into existing mobile workflows

Thanks to Boolean, we can focus more on product and UX, and less on wrangling deployment details.

If your team is thinking about running LLMs locally, especially for real-time features or low-connectivity environments, Boolean Inc. is a partner worth knowing.

Conclusion

Running LLM in mobile apps is not just a trend; it has quickly become new.

Users are looking for greater privacy, low delay, and low dependence on the cloud. With advancements in local LLMs, tools like Phi-3 and Gemma, and support from frameworks and teams like Boolean Inc., the pieces are finally coming together.

From LLM deployment to real-world use cases, we’re seeing lightweight models perform surprisingly well, even on mid-range phones.

And as more open source LLMs and tiny LLMs hit the scene, the bar for building smart, efficient, and responsive mobile AI apps keeps rising.

What matters most? Start small. Focus on one problem your app can solve well with AI. Use the right tools, measure on real devices, and keep the experience smooth for users.

And if you’re still exploring your options, we’ve got you covered.

Mobile AI is no longer “coming”, it’s already here. Now it’s just about building wisely.

FAQs

- Can LLMs really run on mobile devices?

Yes, especially the newer lightweight LLMs for apps like Phi-3 and Gemma. With the right tools, even mid-range phones can handle them.

- What’s the benefit of running LLMs locally instead of in the cloud?

You get faster response times, better privacy, and fewer connectivity issues. Running LLMs locally also means lower long-term costs.

- Which models are best for mobile AI apps?

Models like Phi-3, Gemma, and other open source LLMs are great starting points. They’re small, fast, and built with mobile in mind.

- What tools can help with mobile LLM deployment?

Look into TensorFlow Lite, ONNX Runtime Mobile, and support from companies like Boolean Inc. for smooth LLM deployment.

- Do I need a powerful phone to use LLMs on-device?

Not necessarily. Many mobile LLMs are optimized for performance. You can scale features based on device capability.