Introduction

On device RAG is changing how mobile apps work.

Imagine asking your phone a question and getting a smart, helpful answer, even without internet.

That’s the promise of on device RAG, or Retrieval-Augmented Generation, running right on your smartphone. It brings together on-device AI, local LLMs, and fast mobile vector search to make your apps smarter, faster, and more private.

Instead of relying on the cloud for every single query, apps can now retrieve and generate responses right from the device.

Yes, no internet required, no data sent off to some distant server. It’s faster, private, and more reliable in places where connectivity is spotty.

And this isn’t just a passing trend.

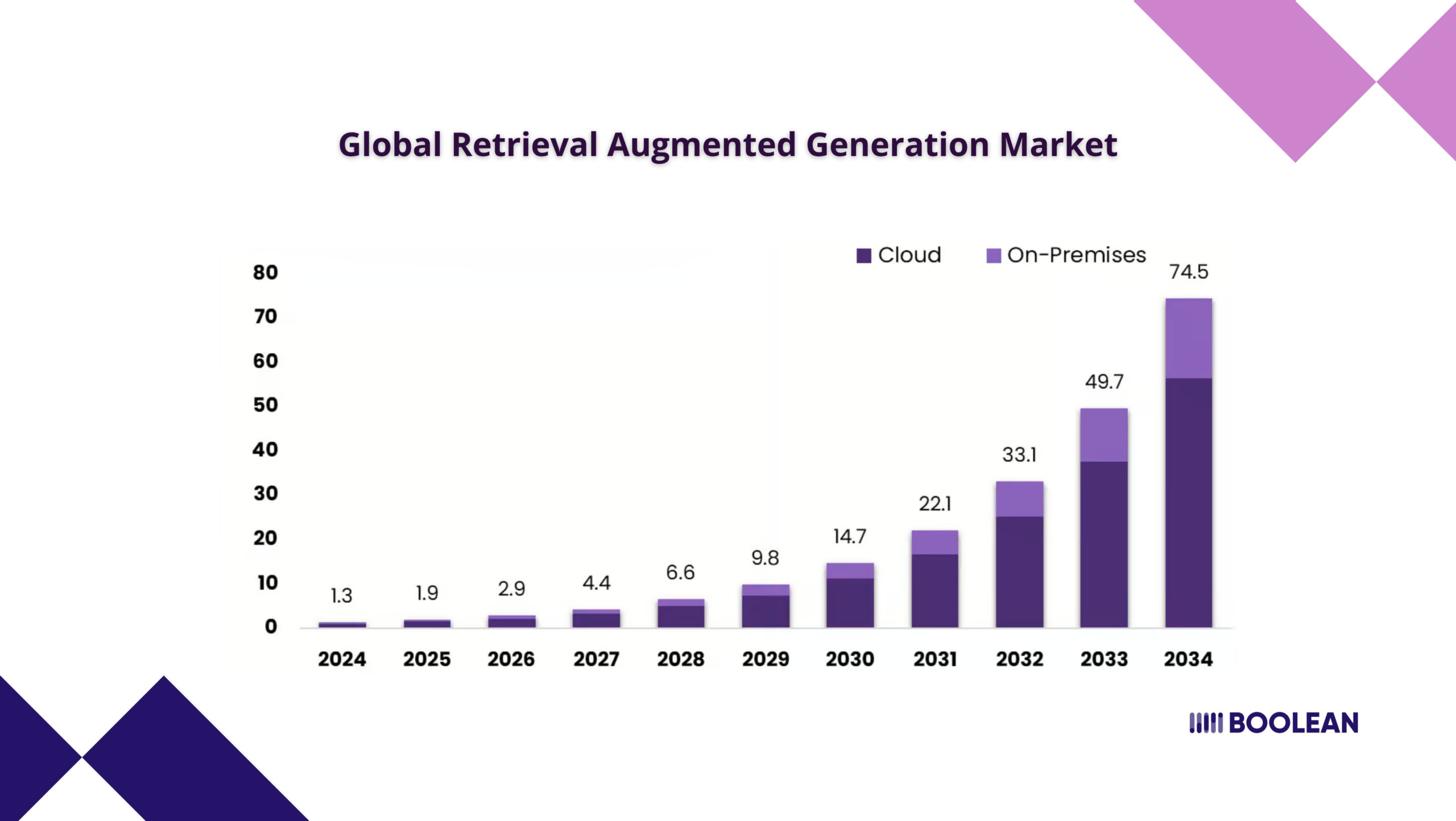

The Global Retrieval Augmented Generation Market is projected to grow from $1.3 billion in 2024 to about $74.5 billion by 2034, with an annual growth rate of nearly 49.9%. That’s massive. (Market.us)

Users and teams everywhere are already exploring Mobile RAG, Edge RAG, and On device AI to stay ahead.

If you’re building mobile apps and want to keep things local, fast, and secure, it’s time to look at On device LLMs, mobile vector search, and offline RAG implementations.

This guide will walk you through it, step by step.

Whether you’re building a mobile RAG chatbot, a field assistant, or just experimenting with on-device vector search, you’ll find helpful ideas to get started and make it work.

Let’s make mobile smarter, without depending on the cloud for everything.

What is On Device RAG?

RAG stands for Retrieval Augmented Generation.

In simple words, this is a method where a language model first receives relevant information from the basis of its knowledge, then uses it to generate a response.

On device RAG means that your mobile app can answer questions or generate materials using information stored on the device.

It combines on device AI, on device LLM and local data to create a smart, responsible experience. Instead of sending your data to the cloud, everything happens is on your phone or tablet.

Now, imagine doing all of that directly on a mobile device. That’s on device RAG.

Instead of sending queries to cloud servers, an app can perform local retrieval and generation right on the phone. That means lower latency, stronger data privacy, and offline capability.

Whether you’re building a Mobile RAG chatbot, a field-support RAG app, or a Mobile app RAG tool for internal use, the process happens without needing a live connection.

To make this possible, you combine three things:

- A lightweight On-device LLM (language model)

- A Mobile vector database to store embeddings

- An On-device retrieval system for fetching relevant chunks of data

It’s not just for AI chatbots either.

Use it for Offline RAG in educational tools, Edge RAG in smart cameras, or Local RAG in healthcare apps.

All of this helps create more responsive and privacy-aware RAG implementations.

If you’re wondering how this ties in with AI infrastructure on phones, you might want to check out our post on Building AI-Powered Apps with On-Device LLMs.

As On-device AI becomes more practical, we’re also seeing growth in Mobile vector search solutions and custom Mobile RAG architectures tailored for smartphones and tablets.

Whether it’s Android or iOS, On device LLMs are opening new doors for responsive and secure AI applications.

Why Implement RAG On Device?

Most AI apps still depend on the cloud. But that’s changing, and for good reason.

If your app needs to answer questions, summarize content, or generate helpful responses from custom data, relying on external servers may not cut it anymore. Privacy, speed, cost, and offline functionality all start to matter more.

Running Retrieval Augmented Generation directly on mobile means users don’t need to be constantly online. It works in tunnels, hospitals, remote locations, or anywhere a signal drops.

For critical tools like a RAG mobile assistant or a RAG app for frontline workers, that can make a real difference.

Speed is another big reason. On-device retrieval and mobile vector search mean answers come fast, without waiting for a server.

Edge RAG and RAG mobile architecture reduce delays, making your app feel smooth and responsive. Even in places with poor or no internet, offline RAG and RAG without internet mobile keep your app working.

Let’s break it down. To implement vector search and generation locally, you combine:

- A Mobile vector database.

- A lightweight on-device LLM.

- An On-device retrieval setup using compact embeddings.

- A simple but efficient RAG architecture for mobile apps.

This combo gives you a mobile RAG implementation that’s fast, reliable, and functional even without internet.

Developers are also turning to edge RAG and edge RAG frameworks to optimize for battery and performance across different mobile chips.

Whether you’re building a RAG mobile architecture for document search, offline chat, or guided support apps, local AI makes things simpler and safer.

Here are some real-world examples:

- A Mobile app RAG for medical protocols in remote clinics.

- A Secure on device RAG implementation for legal reference apps.

- A Lightweight RAG for smartphones that provides offline product support.

- A student tool built with offline RAG tutorial mobile patterns.

Need a place to start? Try a step-by-step on device RAG using small models and a local document store. Tools are already available to make on-device vector search implementation smoother.

This isn’t just a performance upgrade. It’s a shift in how we think about privacy-first, intelligent applications, built for real-world use.

With the right setup, on-device AI retrieval, LLM retrieval mobile, and Local retrieval generation become not only possible but practical.

Whether you’re looking for a RAG on-device guide, building your first Mobile apps local RAG, or planning a production-level RAG without internet mobile experience, the tech is ready, and getting better fast.

So if you’re wondering how to implement on device RAG, you’re in the right place.

Core Components of On Device RAG

Building on device RAG may seem technical at first, but the parts themselves are quite simple.

Once you understand how they work together, it becomes very easy to practice them.

Whether you are building a mobile RAG assistant or a smart offline help tool, these are the main pieces with which you will be working.

- On Device LLM

You’ll need a compact yet capable on device LLM, a small language model that can run smoothly on mobile hardware.

These models are responsible for generating lessons based on the information received.

Users often use 4-bit or 8-bit volume models to save space and memory without renouncing too much accuracy.

- Mobile Vector Database

Before the model can generate answers, it needs relevant context. That’s where a Mobile vector database comes in.

You store preprocessed data (like documents, FAQs, or user guides) as embeddings. When a user asks something, the app compares their query to those stored vectors to find the most relevant snippets.

- On-Device Retrieval

The next step is on-device retrieval. It fetches the right pieces of information from your local database.

This keeps everything fast and private. You can use tools like Faiss (mobile build), Qdrant (lightweight mode), or even SQLite combined with ANN search techniques.

- Local Retrieval + Generation Flow

This is the heart of Local RAG. The query is embedded on-device, relevant context is pulled from the vector store, and the On device LLM uses that to generate a helpful response.

This setup enables RAG app functionality even when the device is offline.

- Lightweight and Flexible Architecture

To handle different devices and performance levels, many developers lean toward a composable architecture.

This makes it easier to swap components (like embedding models or vector engines) without breaking the entire system. It also makes debugging less of a headache.

- Optional: Embedding Generation

Some apps create embeddings on the device using tiny transformer models. Others precompute them and bundle them with the app.

Both approaches work, but it depends on the use case. Precomputed saves battery; real-time generation offers more flexibility.

- UI Integration

All this tech works best when it’s invisible.

Build interfaces that feel natural and intuitive, especially if you’re aiming for a multimodal UI in mobile apps. That could mean voice, text, or even document upload inputs.

Simple front-ends can dramatically improve how users engage with your mobile app RAG tool.

- Real-Time Responsiveness

Keeping things fast is crucial. That’s why many developers focus on real-time edge AI design principles, limiting memory use, preloading data smartly, and keeping vector searches under a few hundred milliseconds.

So if you are building a RAG mobile system or starting your mobile rip implementation, then keep these components in mind.

They’re the backbone of everything, from Offline RAG chat tools to complex RAG architecture for mobile apps.

Once these pieces are in place, the rest, like model fine-tuning, UI design, or usage limits, becomes much easier to handle.

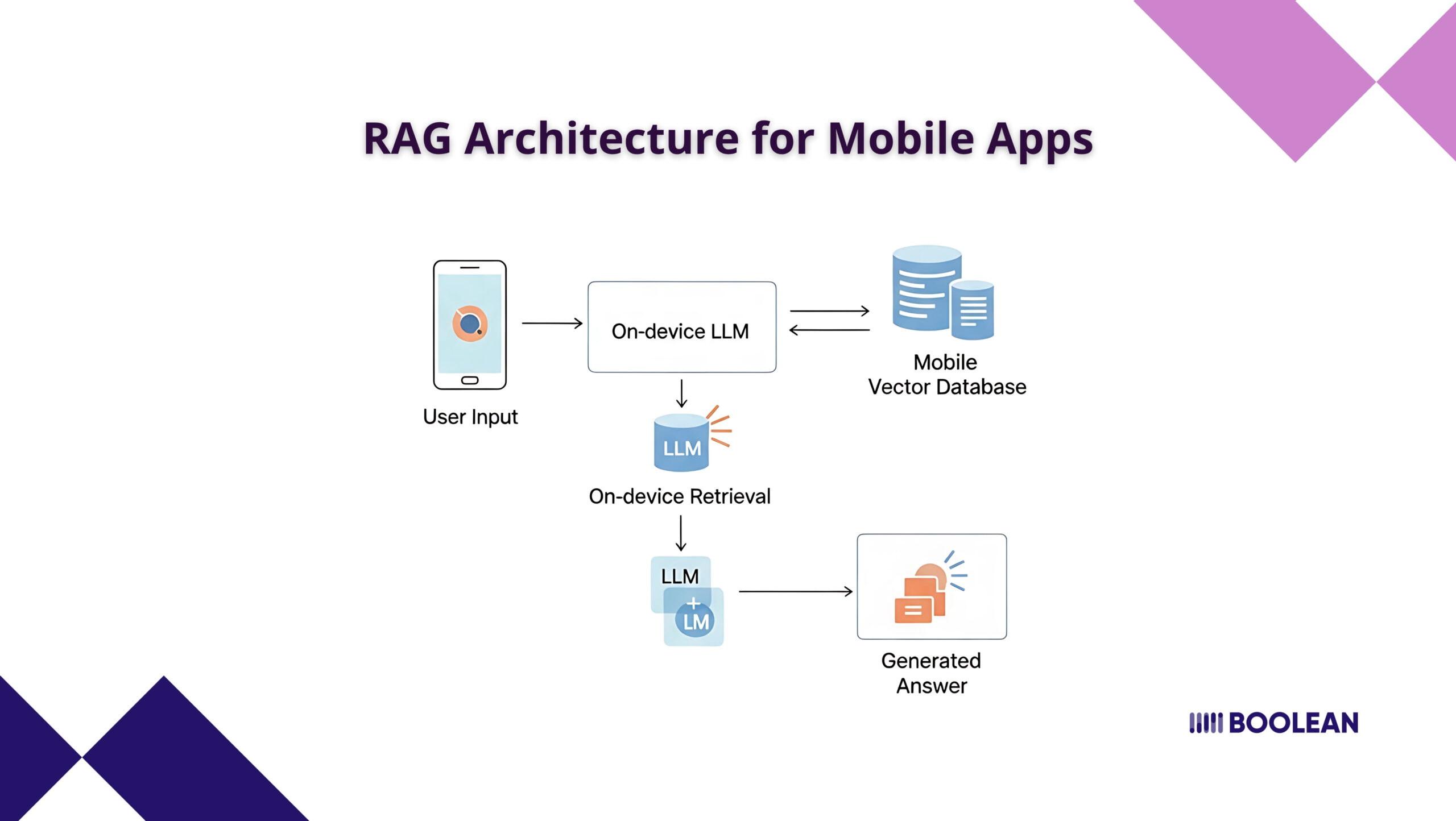

RAG Architecture for Mobile Apps

Getting the architecture right is key for a smooth on device RAG experience.

Let’s break down how the main parts work together in a mobile app.

At the center, you have the on-device LLM. This is your app’s brain, ready to generate answers and understand user questions.

Next, you need a mobile vector database. This stores your data as vectors, making it easy to search and retrieve relevant information quickly.

When a user asks something, the app uses on-device retrieval and mobile vector search to find the best matches from the local database.

The on device AI then combines this information with the LLM to create a helpful response. This is the core of the RAG mobile architecture and the edge RAG framework.

Here’s a simple way to picture it:

- User Input: The user asks a question.

- On device LLM: Understands the question and helps generate the answer.

- Mobile Vector Database: Stores and organizes your data as vectors.

- On-device Retrieval: Finds the most relevant data from the database.

- Generated Answer: The app delivers a helpful response, all on the device.

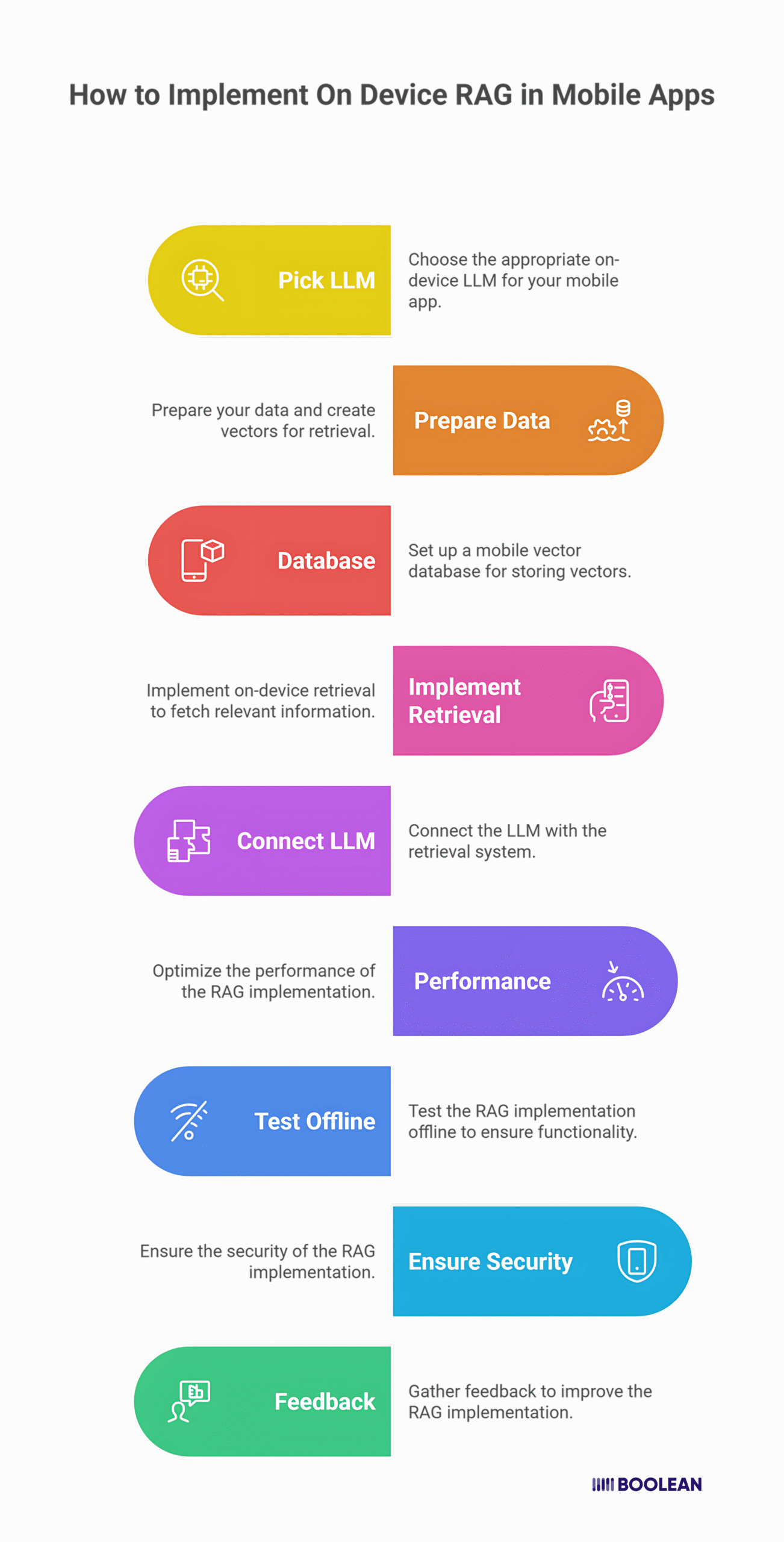

Step-by-Step Guide: How to Implement On Device RAG in Mobile Apps

Building a RAG app on mobile might not be that easy, but breaking it down into small steps makes it much easier.

Here’s how you can do it, even if you’re new to on device AI or RAG implementation.

Step 1: Pick the Right On-Device LLM

Start by thinking about your app’s needs. What do you really want?

Do you want quick answers, or do you need more detailed responses?

Choose an on device LLM that matches your goals. Smaller models are not just great for speed; they have more battery life, while larger ones can handle more complex questions.

Make sure the model you pick is supported on your target devices. If you’re unsure about this, try a few options and see which one is good for your RAG app.

Step 2: Prepare Your Data and Create Vectors

Think about what information your users will need. This could be product manuals, help articles, or even personal notes. Clean up your data so it’s easy to work with.

The next thing you do is use a vectorization tool to turn your text into vectors. These are like digital fingerprints that help your app find the right answers fast.

Don`t worry if this sounds new; many tools make this step simple, even for beginners.

Step 3: Set Up a Mobile Vector Database

Now, you need a place to store your vectors. A mobile vector database is designed for this job. It keeps your data organized and easy to search. Look for a database that’s lightweight and works well on smartphones.

Some options are open-source and easy to set up. This step is the backbone of local RAG and mobile RAG implementation.

Step 4: Implement On-device Retrieval

This is where your app starts to feel smart. On-device retrieval lets your app search the vector database and find the most relevant information in seconds. You can use existing libraries or write your simple search function.

The goal is to make sure users get helpful results, even if they’re offline. Test this step with real questions to see how well it works.

Step 5: Connect the LLM with Retrieval for Generation

Now, bring everything together. When a user asks a question, your app should first use on-device retrieval to find the best data.

Then, pass this data to the on-device LLM. The LLM will use the information to generate a clear, helpful answer. This is the heart of RAG mobile architecture. It’s what makes your app feel personal and responsive.

Step 6: Optimize for Performance and Battery

Now, mobile users hope that the apps will be faster and will not drain their battery. Therefore, test your app on various devices, including old phones.

Look for ways to make your app lighter, such as using small models or reducing how much data you process at once.

Lightweight RAG for smartphones is all about making sure everyone can use your app comfortably.

Step 7: Test Offline and Edge Scenarios

One of the best things about on device RAG is that it works without internet. Try using your app in airplane mode or places with no signal.

Make sure all features still work. This is especially important for users who travel or work in remote areas. Offline RAG and RAG without internet mobile features can be a real lifesaver.

Step 8: Keep Security and Privacy in Mind

Your users trust you with their data. Always use secure on device RAG implementation practices. Store sensitive information safely and never share it without permission.

Local retrieval generation and mobile apps, local RAG helps keep everything private. If you’re handling personal or confidential data, double-check your security settings.

Step 9: Gather Feedback and Improve

After your app is live, listen to your users. Ask them what works and what can be better. Use their feedback to improve.

RAG Mobile Implementation is a continuous process, and small tweaks can create a large difference.

By following these steps, you will create a mobile app RAG which is fast, private and always available. Don’t be afraid to use and learn as soon as you go.

Popular Tools & Frameworks for On Device RAG

You’ve got the idea. Now it’s time to actually build.

Choosing the right tools can make your RAG app project much smoother. There are now more options than ever for on device AI, on device LLM, and mobile vector search.

Let’s look at some popular choices and how they fit into your workflow.

- Lightweight LLMs You Can Run on Phones

At the heart of every RAG app is a model that can generate responses based on the retrieved content. Here’s what’s actually usable on mobile:

- Phi-2 (2.7B) – Excellent performance with tiny memory footprint.

- Mistral 7B (4-bit quantized) – A bit heavier, but manageable on newer phones.

- Gemma 2B – Good middle-ground between quality and size.

- TinyLlama (1.1B) – Built for edge devices and ultra-low memory environments.

All of these work well with:

- GGUF format via GGML-based runtimes.

- ONNX Runtime for Android or cross-platform setups.

- CoreML for native iOS performance.

- TensorFlow Lite which supports quantized transformer models.

For more guidance on deployment methods, take a look at Mobile SDKs vs APIs. It explains where SDKs shine and where APIs might make more sense in hybrid setups.

- Mobile Vector Database Options

You need a fast way to search embeddings locally, and this is where Mobile vector databases come into play:

| Tool | Ideal For | Pros | Notes |

|---|---|---|---|

| Faiss (Mobile) | General mobile use | Fast, widely used | Needs a mobile-friendly build |

| Qdrant (Edge) | Rust/Edge-native apps | Lightweight, fast | Supports WASM, embedded mode |

| SQLite + HNSW | Simpler use cases | Easy to integrate | Lower performance but portable |

These engines let you implement vector search without any internet dependency. Perfect for Offline RAG, especially where latency matters.

- Embedding Models & Inference Engines

You can’t skip this. Embeddings are the bridge between input and retrieval.

- Use MiniLM, E5-small, or BGE-small for high-quality, compact embeddings

- Use ONNX Runtime Mobile or TensorFlow Lite to run these on-device

- Precompute and store vectors if the data doesn’t change often

You’ll use this part in almost every RAG architecture for mobile apps, unless you’re using a precomputed pipeline.

- RAG-Friendly SDKs and ML Runtimes

Frameworks help you keep things clean and maintainable:

- ML Kit (Android) – Great for integrating image/text/voice alongside RAG.

- CoreML – Seamless integration on iOS.

- ONNX Runtime – Hardware acceleration on Android and cross-platform.

- TensorFlow Lite – Best balance between performance and portability.

- Hugging Face Transformers + Optimum – For model export and quantization.

If your app is chat-focused or needs GPT-style flow, don’t miss Build ChatGPT-Powered Apps. It breaks down how to manage both user interaction and model serving, locally or in hybrid mode.

- Helpful Tools for Deployment & Optimization

Let’s make it easier to ship:

- BentoML – Manage your local models and inference pipelines

- GGML / GGUF – Lightweight runtime formats for quantized models

- LoRA / QLoRA – Fine-tune on your private data with minimal cost

- Transformers.js / Transformers Swift – Front-end focused deployment helpers

Before you pick your tools, test your target devices. A newer iPhone can easily handle a quantized 2B model, but older Android devices may struggle.

For help picking the right tools, check out our deep dive on Best Mobile AI Frameworks in 2025: From ONNX to CoreML and TensorFlow Lite.

Choose a flexible stack that can scale down when needed.

With the right setup, your on-device mobile application exceeds just one feature-it becomes the original of a responsible, private, and flexible mobile experience.

Whether you are targeting healthcare, education, finance, or personal productivity, these devices help you achieve it without compromising.

Want to help match devices in terms of your use? Just ask, I can recommend a sample stack based on your app type.

Best Practices for Mobile RAG Implementation

Getting on device RAG to work is one thing. Making it smooth, responsive, and usable in the real world? That takes some careful choices.

Here are some best practices to keep in mind as you work on your mobile RAG implementation.

- Keep Models Lightweight

Use quantized LLMs whenever possible (e.g., 4-bit or int8). These models drastically reduce memory usage without hurting quality too much.

It’s the easiest way to make RAG mobile usable on most smartphones.

Smaller isn’t always worse. For many apps, a 2B model performs just fine if your retrieval quality is solid.

- Precompute When You Can

If your knowledge base is static, generate embeddings ahead of time. Don’t make the phone do that work unless it really has to. This saves power and shortens response time.

For dynamic data (like chat history), keep an on-device embedding model handy, but cache aggressively.

- Limit Vector Search Scope

You don’t need thousands of chunks for every question. Keep your Mobile vector database tight, well-chunked, relevant, and no larger than necessary.

Limit top-k retrievals to around 3–5 for speed. Anything more slows down your app and doesn’t help that much.

- Stay Local, Stay Private

Use On-device AI retrieval wherever possible. No network calls, no servers, no data leaving the phone. Users appreciate privacy, especially for sensitive or enterprise-grade apps.

If you must go hybrid, make sure users know when and why.

- Test on Low-End Devices Too

A blazing-fast prototype on your flagship phone might crawl on a mid-range device. Always test your Mobile RAG implementation on a range of hardware, especially older Androids and budget iPhones.

Use performance monitors to track RAM use, battery drain, and inference time.

- Use Smart Caching

Cache embedding results, previous queries, and even full answers when you can. It’s a huge speed boost and saves compute cycles. Most users won’t ask the same thing twice in five minutes, but some will.

- Offline First, Cloud Optional

Design your RAG app to work offline first. Then (if needed), offer cloud fallback for larger tasks. This way, users still get answers even without Wi-Fi or data.

This hybrid setup also makes RAG without internet mobile apps far more robust.

- Modular Design

Follow a composable architecture style. Keep your embedding model, vector search, and LLM separate. That way, you can swap pieces without rewriting your entire flow.

Makes testing and debugging easier, too.

- Fine-Tune Retrieval Before Generation

A weak search leads to bad answers, even with a good model. Spend time improving your chunking strategy and vector quality. Local retrieval generation is only as good as the context it pulls in.

- Profile Everything

Benchmark on-device performance early. Measure:

- Time to embed

- Time to retrieve

- Time to generate

- Memory usage

- Heat generation (especially during generation)

It’s better to adjust before your users hit a wall.

Done right, your Mobile app RAG can feel instant, intuitive, and helpful, even offline.

Whether you’re building a Secure on device RAG implementation for enterprise or a Lightweight RAG for smartphones aimed at students, these small details will make a big difference.

Real-World Example: Simple Offline RAG Chatbot

Let’s say you want to build a lightweight, offline Q&A chatbot. It doesn’t need the internet. It doesn’t send anything to the cloud.

And it answers questions based on a local set of documents, right on the phone.

Here’s what that can look like in action.

Use Case

Imagine a field technician who needs instant help while servicing equipment in a rural area.

They’ve got no signal. But your app has all the manuals and instructions loaded locally. The chatbot answers questions like:

- “What’s the error code 306 fix?”

- “How to reset the hydraulic unit?”

This is the perfect fit for Mobile RAG and Offline RAG setups.

What You’ll Need

- 1 quantized On-device LLM (e.g., Phi-2 4-bit)

- 1 Mobile vector database (Faiss or Qdrant Lite)

- A few technical PDFs, chunked and preprocessed

- Precomputed embeddings using MiniLM

- A mobile device (Android or iOS)

Implementation Steps

- Preprocess Docs:

- Chunk the PDFs into small, readable sections (2–3 sentences each).

- Generate embeddings for each chunk using MiniLM (off-device).

- Store Embeddings Locally:

- Save these vectors into a mobile-compatible vector store like Faiss or Qdrant.

- Embed the User Query (On-Device):

- Use a small encoder (e.g., MiniLM converted to ONNX or TFLite) to convert the user’s question into a vector.

- Run Vector Search:

- Fetch the top 3–5 most similar chunks using your On-device retrieval engine.

- Feed Context to the LLM:

- Pass both the user’s question and the retrieved chunks into your On-device LLM.

- Display Answer:

- Return the response to the user via a clean UI, text, voice, or even haptic feedback.

Example Prompt to the LLM

You are a helpful assistant for troubleshooting machines.

Context:

– [chunk 1]

– [chunk 2]

– [chunk 3]

User Question: How do I fix hydraulic error 306?

Answer:

Deployment Notes

- Use TensorFlow Lite or ONNX Runtime to run models on-device.

- Compress your vector DB and model weights to reduce app size.

- Optimize inference paths to prevent overheating and lag.

- Cache recent queries in memory.

This small prototype shows the full flow of a Mobile RAG implementation, retrieval, generation, offline capability, and usability.

The same structure can be scaled up for education apps, enterprise support tools, or private personal assistants.

Challenges and Limitations of On-Device RAG

| Challenge | Details |

|---|---|

| Model Size Limits | Mobile devices can’t handle large models easily. You’ll need quantization and tight memory management. |

| Battery & Heat Issues | On-device generation can drain battery and cause overheating, especially during longer interactions. |

| Storage Constraints | Models, embeddings, and local data can quickly inflate your app’s size, sometimes over 1GB. |

| No Real-Time Updates | Local data doesn’t update automatically. Without sync logic, your RAG system can go stale. |

| Hardware Fragmentation | Performance varies across devices. What works on a flagship may lag on mid-range or older phones. |

How Boolean Inc. Can Help You with On Device RAG

Getting On device RAG to actually work well on mobile isn’t just about plugging in a few tools.

It takes smart decisions around models, memory, battery use, and user experience. This is the place where Boolean Inc. can step in and make things easier.

We help teams to manufacture mobile-first AI features that run fast, remain private, and work offline-you are starting from scratch or correcting what you have already got.

Need help choosing the right model? Struggling with vector search? Not sure how to keep your app size down? We’ve worked through all of that before. And we’re happy to guide you through it, too.

Want to build a fast, secure, and usable RAG mobile app?

Let’s talk.

Conclusion

Building on device RAG might seem a bit technical at first, but it’s absolutely doable and worth it. You get faster responses, better privacy, and apps that don’t depend on a stable internet connection.

It is not about chasing buzzwords. This is about making useful, reliable equipment that people can trust, even when they are offline.

Start simple. Pay attention to what matters. And if you ever feel stuck or uncertain, remember – you don’t know all this alone.

FAQs

- Can RAG really run fully on a smartphone?

Yes, with smaller models and smart optimization, you can run the full RAG loop, embedding, retrieval, and generation, right on modern devices.

- Do I need internet for on device RAG to work?

Nope. That’s the point. Everything can run locally, so your app stays usable even without a connection.

- What kind of LLM should I use for mobile?

Look for quantized models under 2–4B parameters, like Phi, TinyLlama, or Mistral variants that run on-device using TFLite or ONNX.

- Is vector search fast enough on phones?

Yes, especially with tools like Faiss or Qdrant Lite. Keep your dataset small and the retrieval top-k low for quick results.

- Can On-device RAG handle voice input too?

Absolutely. Combine with on-device speech-to-text, and you can build chatbots or helpers that respond to voice, offline.